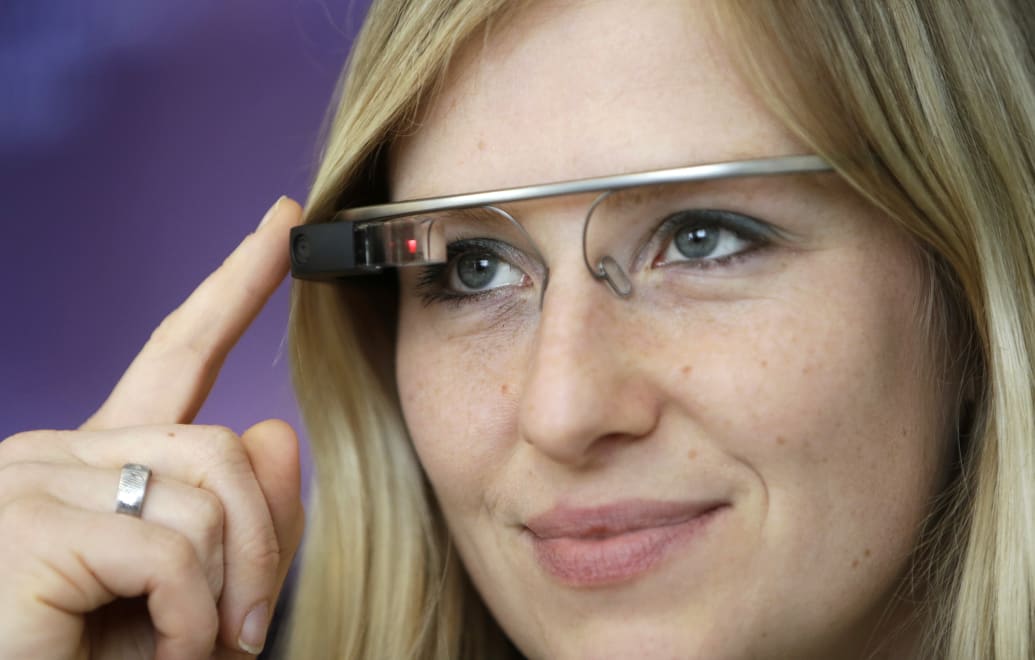

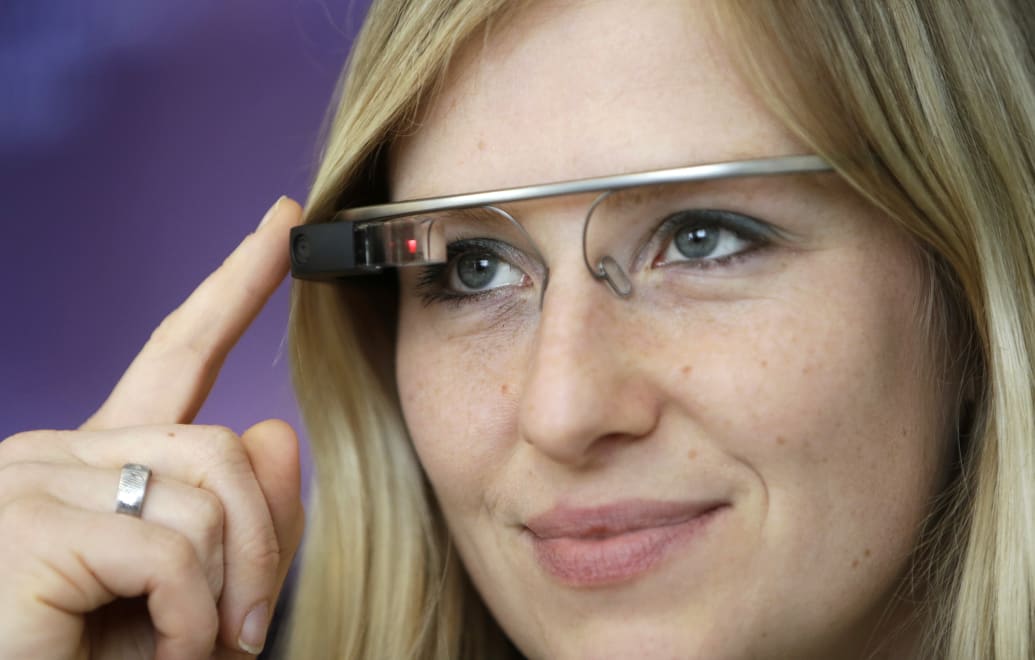

Ten years after the primary release of Google Glass, probably the most first commercially to be had good glasses with a digicam, a 2nd revolution in wearable generation is underway – and it’s steadily ignoring the primary research. Final month, the CEO of Meta. Mark Zuckerberg participated in Meta Attach—the corporate’s annual convention for digital truth builders—and unveiled one thing much more compelling than actual legs: Meta Good Glasses. and shades producer Ray-Ban, is in truth the second one technology of internet-connected clothes that comes out of this partnership. The primary, Ray-Ban Tales, dropped in 2021 and used to be in large part forgotten. The glasses had twin cameras that would take footage or movies of 30 seconds. Meta Good glasses, which hit the shop cabinets 17 October, take a large number of issues. First, the glasses have been offered by means of Meta AI, a conversational assistant within the type of ChatGPT they usually got here from the massive Meta fashions (LLMs) that have been already praised by means of Ghengis Khan and driven conspiracy theories. It’s going to additionally enlarge the digicam’s functions from quick burst pictures and video recording to complete protection.  META PLATFORMS-VIRTUAL REALITY/Meta Good Glasses features a conversational assistant within the type of ChatGPT and used to be born from the nice examples of the Meta language.Carlos Barria Meta isn’t the one corporate this is receiving wearable generation to toughen AI. In truth, its announcement looked as if it would spark off a slew of alternative gadgets that rushed to money in at the AI-wearable hype. Humane, a generation startup created by means of ex-Apple workers, dropped its superb Ai Pin, which is sort of a model of Siri or an Alexa assistant with a laser show and a digicam and microphone, at the lap of Naomi’s jacket. Campell when he walked the runway at Paris Type Week 2023. A couple of days later, Rewind AI introduced that it’ll create a pen that may report and report the whole lot the wearer says and hears. in 2013. However the corporate would do neatly to understand that Google Glass didn’t fail since the generation used to be no longer just right sufficient. It failed as it dragged other folks out. Extra.Garments Crimson Flags The frenzy to get those garments out of the wild poses two secret issues: First, how a lot of our lives might be taken with out our wisdom and consent by means of those gear? And 2nd, will our conversations, movements, and expressions be used to coach AI fashions? More often than not, those questions shouldn’t have transparent solutions. Meta, who didn’t go back a couple of requests from The Day by day Beast for remark, stated that good public pictures with glasses may also be distracting. To that finish, the corporate has incorporated a sensor at the frames that, in principle, we could different wearers know that they’re filming. Ignoring that early critiques counsel that the sunshine is not at all times visual—and that Meta stuck the EU’s privateness warnings. regulators to stay its mark down on Ray-Ban Information—this system of notifying others of the recording isn’t sufficient, consistent with Calli Schroeder, senior recommend and international privateness recommend on the shopper privateness watchdog’s Digital Privateness Data Heart. beeps, or identical indicators are steadily so small and imperceptible that it might be sudden for a bystander to note, no longer understanding what the sign is appearing,” he advised The Day by day Beast. “We pay attention beeps and notice small lighting on other folks’s gadgets always once we stroll round on this planet. I will be able to’t recall to mind the ones issues as only a right kind disclosure that I am being filmed.” Consistent with Schroeder, the easiest way to alert others that you are dressed in a digicam or microphone is to show it on at any time. dressed in “a large signal or a t-shirt that claims ‘I am taking footage of you’ each and every time he makes use of it”—and added that he used to be simplest joking. it isn’t imaginable to switch it. Taking into consideration the volume of knowledge the corporate has, there could also be much more to mention about how it’ll use the knowledge captured by means of the interior cameras. Finally, the corporate has publicly made up our minds to incorporate facial popularity on its good glasses, despite the fact that the corporate does no longer come with (or promote) this generation on its wearables. Google Glass didn’t come with Google’s facial popularity generation, however customers have been in a position to hack their very own tool to apply it to the instrument. Its AI is lots of knowledge. “If you happen to take into accounts it, good glasses are an effective way to let an AI assistant see what you spot and listen to what you pay attention,” stated Zuckerberg when he introduced Meta Good Glasses. Meta admitted that the usage of public posts from Fb and Instagram, together with textual content and pictures , to coach its personal AI fashion. The corporate insists that pictures and movies are stored personal, and that the corporate simplest takes “necessities,” however it is not transparent if the corporate can or will use any data from the Good Glass to spice up its AI.

META PLATFORMS-VIRTUAL REALITY/Meta Good Glasses features a conversational assistant within the type of ChatGPT and used to be born from the nice examples of the Meta language.Carlos Barria Meta isn’t the one corporate this is receiving wearable generation to toughen AI. In truth, its announcement looked as if it would spark off a slew of alternative gadgets that rushed to money in at the AI-wearable hype. Humane, a generation startup created by means of ex-Apple workers, dropped its superb Ai Pin, which is sort of a model of Siri or an Alexa assistant with a laser show and a digicam and microphone, at the lap of Naomi’s jacket. Campell when he walked the runway at Paris Type Week 2023. A couple of days later, Rewind AI introduced that it’ll create a pen that may report and report the whole lot the wearer says and hears. in 2013. However the corporate would do neatly to understand that Google Glass didn’t fail since the generation used to be no longer just right sufficient. It failed as it dragged other folks out. Extra.Garments Crimson Flags The frenzy to get those garments out of the wild poses two secret issues: First, how a lot of our lives might be taken with out our wisdom and consent by means of those gear? And 2nd, will our conversations, movements, and expressions be used to coach AI fashions? More often than not, those questions shouldn’t have transparent solutions. Meta, who didn’t go back a couple of requests from The Day by day Beast for remark, stated that good public pictures with glasses may also be distracting. To that finish, the corporate has incorporated a sensor at the frames that, in principle, we could different wearers know that they’re filming. Ignoring that early critiques counsel that the sunshine is not at all times visual—and that Meta stuck the EU’s privateness warnings. regulators to stay its mark down on Ray-Ban Information—this system of notifying others of the recording isn’t sufficient, consistent with Calli Schroeder, senior recommend and international privateness recommend on the shopper privateness watchdog’s Digital Privateness Data Heart. beeps, or identical indicators are steadily so small and imperceptible that it might be sudden for a bystander to note, no longer understanding what the sign is appearing,” he advised The Day by day Beast. “We pay attention beeps and notice small lighting on other folks’s gadgets always once we stroll round on this planet. I will be able to’t recall to mind the ones issues as only a right kind disclosure that I am being filmed.” Consistent with Schroeder, the easiest way to alert others that you are dressed in a digicam or microphone is to show it on at any time. dressed in “a large signal or a t-shirt that claims ‘I am taking footage of you’ each and every time he makes use of it”—and added that he used to be simplest joking. it isn’t imaginable to switch it. Taking into consideration the volume of knowledge the corporate has, there could also be much more to mention about how it’ll use the knowledge captured by means of the interior cameras. Finally, the corporate has publicly made up our minds to incorporate facial popularity on its good glasses, despite the fact that the corporate does no longer come with (or promote) this generation on its wearables. Google Glass didn’t come with Google’s facial popularity generation, however customers have been in a position to hack their very own tool to apply it to the instrument. Its AI is lots of knowledge. “If you happen to take into accounts it, good glasses are an effective way to let an AI assistant see what you spot and listen to what you pay attention,” stated Zuckerberg when he introduced Meta Good Glasses. Meta admitted that the usage of public posts from Fb and Instagram, together with textual content and pictures , to coach its personal AI fashion. The corporate insists that pictures and movies are stored personal, and that the corporate simplest takes “necessities,” however it is not transparent if the corporate can or will use any data from the Good Glass to spice up its AI.  AI wearable firms would do neatly to understand that Google Glass did not fail since the generation wasn’t very best. It failed as it dragged other folks out. Reuters It isn’t unusual for firms to make use of “anonymized information” – data that has been stripped of any figuring out data – to coach its programs. Alternatively, it isn’t unusual for a poorly understood plan to be incomplete. Researchers as soon as purchased the cyber web surfing historical past of German voters and mixed it with publicly to be had information to expose other folks’s on-line conduct, together with a pass judgement on’s porn viewing conduct. It’s also very tough to learn the knowledge recorded by means of cameras and microphones. . “Face popularity programs can establish people who find themselves having a look, successfully offering an actual map of the place they’re, in response to the collection of wearable gadgets round them,” Schroeder warned. Movement monitoring or voice popularity can establish the individual although their face is roofed.” Moreover, there is not any possible approach for other folks to select to report their simulations and use them to coach AI. “An organization making the most of private information that an individual has no longer consented to offer and has taken no motion to consent to its assortment turns out unethical,” Schroeder stated. “What exists on this planet will have to no longer make us goals for information assortment.” Go back of the Glassholes It is transparent that probably the most firms leaping into the wearable AI house have thought of this — although their solutions are steadily obscure. Ella Geraerdts, Humane’s communications supervisor, advised The Day by day Beast that the corporate’s Ai Pin might be a “secret instrument” with out a “critique of reason why” and dependable listening. This, he stated, displays “Humane’s imaginative and prescient of establishing issues that put agree with on the heart.” Dan Siroker, co-founder and CEO of Rewind, advised The Day by day Beast that his corporate rushed to announce the filming and writing of Pendant whilst it used to be nonetheless there. early in building as a result of “I sought after to place the significance of privateness into the ether.” Of all of the AI wearables which were introduced in contemporary weeks, the Pendant has almost certainly garnered probably the most vitriol on-line. Despite the fact that the corporate emphasizes that it’s taking a “confidential manner” and plans to “give you options to be sure that nobody is being recorded with out their consent,” it simplest presented two tips on how to succeed in this: both to stay recordings of people that have verbally consented to being recorded or to create a abstract of the recorded speech. it is the most efficient AI determination of the essential info, as an alternative of writing phrase by means of phrase and not anything is mounted. has been established. “If we’ve just right concepts that come within the center at this time [launch]we can come with them, “stated Siroker. To its credit score, Rewind is clear about the way it handles information: Siroker has made it transparent that it does no longer use recordings to coach AI fashions, and that each one recordings and notes are stored and saved in the neighborhood at the person’s instrument. Siroker agreed The good wearables that got here alongside—and a few are going in the marketplace now—performed it speedy and free with the idea that of public pictures. When Google Glass hit the wild, companies banned other folks from dressed in it. Wearables have been picked up and referred to as Glassholes. Bit by bit of the brand new gadgets within the ‘ways cross a ways in overcoming the problems that experience held other folks again ten years in the past and as an alternative think that issues might be other this time round. “Google Glass used to be very onerous on privateness and I feel it disrupted the entire industry,” stated Siroker, “and we are nonetheless digging ourselves into it.” the outlet that Google Glass made.”

AI wearable firms would do neatly to understand that Google Glass did not fail since the generation wasn’t very best. It failed as it dragged other folks out. Reuters It isn’t unusual for firms to make use of “anonymized information” – data that has been stripped of any figuring out data – to coach its programs. Alternatively, it isn’t unusual for a poorly understood plan to be incomplete. Researchers as soon as purchased the cyber web surfing historical past of German voters and mixed it with publicly to be had information to expose other folks’s on-line conduct, together with a pass judgement on’s porn viewing conduct. It’s also very tough to learn the knowledge recorded by means of cameras and microphones. . “Face popularity programs can establish people who find themselves having a look, successfully offering an actual map of the place they’re, in response to the collection of wearable gadgets round them,” Schroeder warned. Movement monitoring or voice popularity can establish the individual although their face is roofed.” Moreover, there is not any possible approach for other folks to select to report their simulations and use them to coach AI. “An organization making the most of private information that an individual has no longer consented to offer and has taken no motion to consent to its assortment turns out unethical,” Schroeder stated. “What exists on this planet will have to no longer make us goals for information assortment.” Go back of the Glassholes It is transparent that probably the most firms leaping into the wearable AI house have thought of this — although their solutions are steadily obscure. Ella Geraerdts, Humane’s communications supervisor, advised The Day by day Beast that the corporate’s Ai Pin might be a “secret instrument” with out a “critique of reason why” and dependable listening. This, he stated, displays “Humane’s imaginative and prescient of establishing issues that put agree with on the heart.” Dan Siroker, co-founder and CEO of Rewind, advised The Day by day Beast that his corporate rushed to announce the filming and writing of Pendant whilst it used to be nonetheless there. early in building as a result of “I sought after to place the significance of privateness into the ether.” Of all of the AI wearables which were introduced in contemporary weeks, the Pendant has almost certainly garnered probably the most vitriol on-line. Despite the fact that the corporate emphasizes that it’s taking a “confidential manner” and plans to “give you options to be sure that nobody is being recorded with out their consent,” it simplest presented two tips on how to succeed in this: both to stay recordings of people that have verbally consented to being recorded or to create a abstract of the recorded speech. it is the most efficient AI determination of the essential info, as an alternative of writing phrase by means of phrase and not anything is mounted. has been established. “If we’ve just right concepts that come within the center at this time [launch]we can come with them, “stated Siroker. To its credit score, Rewind is clear about the way it handles information: Siroker has made it transparent that it does no longer use recordings to coach AI fashions, and that each one recordings and notes are stored and saved in the neighborhood at the person’s instrument. Siroker agreed The good wearables that got here alongside—and a few are going in the marketplace now—performed it speedy and free with the idea that of public pictures. When Google Glass hit the wild, companies banned other folks from dressed in it. Wearables have been picked up and referred to as Glassholes. Bit by bit of the brand new gadgets within the ‘ways cross a ways in overcoming the problems that experience held other folks again ten years in the past and as an alternative think that issues might be other this time round. “Google Glass used to be very onerous on privateness and I feel it disrupted the entire industry,” stated Siroker, “and we are nonetheless digging ourselves into it.” the outlet that Google Glass made.”