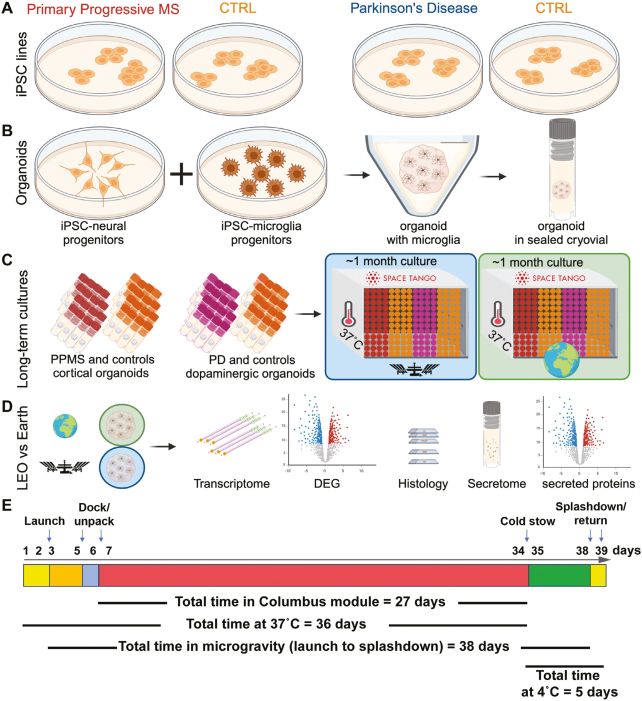

Abstract: Researchers have evolved MovieNet, an AI type encouraged by way of the human mind, to know and analyze shifting pictures with unheard of accuracy. Mimicking how neurons procedure visible sequences, MovieNet can determine refined adjustments in dynamic scenes whilst the usage of considerably much less information and effort than conventional AI.In checking out, MovieNet outperformed present AI fashions or even human observers in spotting behavioral patterns, similar to tadpole swimming underneath other prerequisites. Its eco-friendly design and attainable to revolutionize fields like drugs and drug screening spotlight the transformative energy of this step forward.Key Details:Mind-Like Processing: MovieNet mimics neurons to procedure video sequences with top precision, distinguishing dynamic scenes higher than conventional AI fashions.Prime Potency: MovieNet achieves awesome accuracy whilst the usage of much less power and information, making it extra sustainable and scalable for more than a few packages.Clinical Possible: The AI may just assist in early detection of sicknesses like Parkinson’s by way of figuring out refined adjustments in motion, in addition to bettering drug screening strategies.Supply: Scripps Analysis InstituteImagine a synthetic intelligence (AI) type that may watch and perceive shifting pictures with the subtlety of a human mind. Now, scientists at Scripps Analysis have made this a truth by way of developing MovieNet: an leading edge AI that processes movies just like how our brains interpret real-life scenes as they spread through the years.This brain-inspired AI type, detailed in a learn about printed within the Complaints of the Nationwide Academy of Sciences on November 19, 2024, can understand shifting scenes by way of simulating how neurons—or mind cells—make real-time sense of the arena.  MovieNet’s diminished information necessities be offering a greener selection that conserves power whilst functioning at a top same old. Credit score: Neuroscience NewsConventional AI excels at spotting nonetheless pictures, however MovieNet introduces a technique for machine-learning fashions to acknowledge complicated, converting scenes—a step forward that might become fields from clinical diagnostics to independent using, the place discerning refined adjustments through the years is the most important.MovieNet could also be extra correct and environmentally sustainable than standard AI.“The mind doesn’t simply see nonetheless frames; it creates an ongoing visible narrative,” says senior writer Hollis Cline, PhD, the director of the Dorris Neuroscience Middle and the Hahn Professor of Neuroscience at Scripps Analysis.“Static symbol reputation has come a ways, however the mind’s capability to procedure flowing scenes—like staring at a film—calls for a a lot more refined type of development reputation. Through learning how neurons seize those sequences, we’ve been in a position to use an identical ideas to AI.”To create MovieNet, Cline and primary writer Masaki Hiramoto, a personnel scientist at Scripps Analysis, tested how the mind processes real-world scenes as quick sequences, very similar to film clips. Particularly, the researchers studied how tadpole neurons replied to visible stimuli.“Tadpoles have an excellent visible device, plus we all know that they are able to stumble on and reply to shifting stimuli successfully,” explains Hiramoto.He and Cline known neurons that reply to movie-like options—similar to shifts in brightness and symbol rotation—and will acknowledge items as they transfer and alter. Situated within the mind’s visible processing area referred to as the optic tectum, those neurons bring together portions of a shifting symbol right into a coherent collection.Recall to mind this procedure as very similar to a lenticular puzzle: each and every piece on my own won’t make sense, however in combination they shape a whole symbol in movement.Other neurons procedure more than a few “puzzle items” of a real-life shifting symbol, which the mind then integrates into a continual scene.The researchers additionally discovered that the tadpoles’ optic tectum neurons prominent refined adjustments in visible stimuli through the years, taking pictures knowledge in more or less 100 to 600 millisecond dynamic clips moderately than nonetheless frames.Those neurons are extremely delicate to patterns of sunshine and shadow, and each and every neuron’s reaction to a particular a part of the sight view is helping assemble an in depth map of a scene to shape a “film clip.”Cline and Hiramoto skilled MovieNet to emulate this brain-like processing and encode video clips as a sequence of small, recognizable visible cues. This authorised the AI type to tell apart refined variations amongst dynamic scenes.To check MovieNet, the researchers confirmed it video clips of tadpoles swimming underneath other prerequisites.No longer simplest did MovieNet succeed in 82.3 % accuracy in distinguishing commonplace as opposed to odd swimming behaviors, but it surely exceeded the skills of skilled human observers by way of about 18 %. It even outperformed present AI fashions similar to Google’s GoogLeNet—which completed simply 72 % accuracy in spite of its in depth coaching and processing assets.“That is the place we noticed genuine attainable,” issues out Cline.The workforce decided that MovieNet used to be now not simplest higher than present AI fashions at figuring out converting scenes, but it surely used much less information and processing time.MovieNet’s skill to simplify information with out sacrificing accuracy additionally units it aside from standard AI. Through breaking down visible knowledge into very important sequences, MovieNet successfully compresses information like a zipped report that keeps important main points.Past its top accuracy, MovieNet is an eco-friendly AI type. Typical AI processing calls for immense power, leaving a heavy environmental footprint. MovieNet’s diminished information necessities be offering a greener selection that conserves power whilst functioning at a top same old.“Through mimicking the mind, we’ve controlled to make our AI a long way much less hard, paving the best way for fashions that aren’t simply tough however sustainable,” says Cline. “This potency additionally opens the door to scaling up AI in fields the place standard strategies are pricey.”As well as, MovieNet has attainable to reshape drugs. Because the generation advances, it will change into a treasured device for figuring out refined adjustments in early-stage prerequisites, similar to detecting abnormal center rhythms or recognizing the primary indicators of neurodegenerative sicknesses like Parkinson’s.For instance, small motor adjustments associated with Parkinson’s which might be incessantly laborious for human eyes to discern may well be flagged by way of the AI early on, offering clinicians treasured time to interfere.Moreover, MovieNet’s skill to understand adjustments in tadpole swimming patterns when tadpoles have been uncovered to chemical substances may just result in extra actual drug screening ways, as scientists may just learn about dynamic cell responses moderately than depending on static snapshots.“Present strategies omit important adjustments as a result of they are able to simplest analyze pictures captured at periods,” remarks Hiramoto.“Watching cells through the years implies that MovieNet can monitor the subtlest adjustments right through drug checking out.”Taking a look forward, Cline and Hiramoto plan to proceed refining MovieNet’s skill to evolve to other environments, bettering its versatility and attainable packages.“Taking inspiration from biology will proceed to be a fertile space for advancing AI,” says Cline. “Through designing fashions that assume like residing organisms, we will succeed in ranges of potency that merely aren’t imaginable with standard approaches.”Investment: This paintings for the learn about “Id of film encoding neurons allows film reputation AI,” used to be supported by way of investment from the Nationwide Institutes of Well being (RO1EY011261, RO1EY027437 and RO1EY031597), the Hahn Circle of relatives Basis and the Harold L. Dorris Neurosciences Middle Endowment Fund.About this AI analysis newsAuthor: Press Place of business

MovieNet’s diminished information necessities be offering a greener selection that conserves power whilst functioning at a top same old. Credit score: Neuroscience NewsConventional AI excels at spotting nonetheless pictures, however MovieNet introduces a technique for machine-learning fashions to acknowledge complicated, converting scenes—a step forward that might become fields from clinical diagnostics to independent using, the place discerning refined adjustments through the years is the most important.MovieNet could also be extra correct and environmentally sustainable than standard AI.“The mind doesn’t simply see nonetheless frames; it creates an ongoing visible narrative,” says senior writer Hollis Cline, PhD, the director of the Dorris Neuroscience Middle and the Hahn Professor of Neuroscience at Scripps Analysis.“Static symbol reputation has come a ways, however the mind’s capability to procedure flowing scenes—like staring at a film—calls for a a lot more refined type of development reputation. Through learning how neurons seize those sequences, we’ve been in a position to use an identical ideas to AI.”To create MovieNet, Cline and primary writer Masaki Hiramoto, a personnel scientist at Scripps Analysis, tested how the mind processes real-world scenes as quick sequences, very similar to film clips. Particularly, the researchers studied how tadpole neurons replied to visible stimuli.“Tadpoles have an excellent visible device, plus we all know that they are able to stumble on and reply to shifting stimuli successfully,” explains Hiramoto.He and Cline known neurons that reply to movie-like options—similar to shifts in brightness and symbol rotation—and will acknowledge items as they transfer and alter. Situated within the mind’s visible processing area referred to as the optic tectum, those neurons bring together portions of a shifting symbol right into a coherent collection.Recall to mind this procedure as very similar to a lenticular puzzle: each and every piece on my own won’t make sense, however in combination they shape a whole symbol in movement.Other neurons procedure more than a few “puzzle items” of a real-life shifting symbol, which the mind then integrates into a continual scene.The researchers additionally discovered that the tadpoles’ optic tectum neurons prominent refined adjustments in visible stimuli through the years, taking pictures knowledge in more or less 100 to 600 millisecond dynamic clips moderately than nonetheless frames.Those neurons are extremely delicate to patterns of sunshine and shadow, and each and every neuron’s reaction to a particular a part of the sight view is helping assemble an in depth map of a scene to shape a “film clip.”Cline and Hiramoto skilled MovieNet to emulate this brain-like processing and encode video clips as a sequence of small, recognizable visible cues. This authorised the AI type to tell apart refined variations amongst dynamic scenes.To check MovieNet, the researchers confirmed it video clips of tadpoles swimming underneath other prerequisites.No longer simplest did MovieNet succeed in 82.3 % accuracy in distinguishing commonplace as opposed to odd swimming behaviors, but it surely exceeded the skills of skilled human observers by way of about 18 %. It even outperformed present AI fashions similar to Google’s GoogLeNet—which completed simply 72 % accuracy in spite of its in depth coaching and processing assets.“That is the place we noticed genuine attainable,” issues out Cline.The workforce decided that MovieNet used to be now not simplest higher than present AI fashions at figuring out converting scenes, but it surely used much less information and processing time.MovieNet’s skill to simplify information with out sacrificing accuracy additionally units it aside from standard AI. Through breaking down visible knowledge into very important sequences, MovieNet successfully compresses information like a zipped report that keeps important main points.Past its top accuracy, MovieNet is an eco-friendly AI type. Typical AI processing calls for immense power, leaving a heavy environmental footprint. MovieNet’s diminished information necessities be offering a greener selection that conserves power whilst functioning at a top same old.“Through mimicking the mind, we’ve controlled to make our AI a long way much less hard, paving the best way for fashions that aren’t simply tough however sustainable,” says Cline. “This potency additionally opens the door to scaling up AI in fields the place standard strategies are pricey.”As well as, MovieNet has attainable to reshape drugs. Because the generation advances, it will change into a treasured device for figuring out refined adjustments in early-stage prerequisites, similar to detecting abnormal center rhythms or recognizing the primary indicators of neurodegenerative sicknesses like Parkinson’s.For instance, small motor adjustments associated with Parkinson’s which might be incessantly laborious for human eyes to discern may well be flagged by way of the AI early on, offering clinicians treasured time to interfere.Moreover, MovieNet’s skill to understand adjustments in tadpole swimming patterns when tadpoles have been uncovered to chemical substances may just result in extra actual drug screening ways, as scientists may just learn about dynamic cell responses moderately than depending on static snapshots.“Present strategies omit important adjustments as a result of they are able to simplest analyze pictures captured at periods,” remarks Hiramoto.“Watching cells through the years implies that MovieNet can monitor the subtlest adjustments right through drug checking out.”Taking a look forward, Cline and Hiramoto plan to proceed refining MovieNet’s skill to evolve to other environments, bettering its versatility and attainable packages.“Taking inspiration from biology will proceed to be a fertile space for advancing AI,” says Cline. “Through designing fashions that assume like residing organisms, we will succeed in ranges of potency that merely aren’t imaginable with standard approaches.”Investment: This paintings for the learn about “Id of film encoding neurons allows film reputation AI,” used to be supported by way of investment from the Nationwide Institutes of Well being (RO1EY011261, RO1EY027437 and RO1EY031597), the Hahn Circle of relatives Basis and the Harold L. Dorris Neurosciences Middle Endowment Fund.About this AI analysis newsAuthor: Press Place of business

Supply: Scripps Analysis Institute

Touch: Press Place of business – Scripps Analysis Institute

Symbol: The picture is credited to Neuroscience NewsOriginal Analysis: Open get right of entry to.

“Id of film encoding neurons allows film reputation AI” by way of Hollis Cline et al. PNASAbstractIdentification of film encoding neurons allows film reputation AINatural visible scenes are ruled by way of spatiotemporal symbol dynamics, however how the visible device integrates “film” knowledge through the years is unclear.We characterised optic tectal neuronal receptive fields the usage of sparse noise stimuli and opposite correlation research.Neurons known films of ~200-600 ms intervals with outlined get started and forestall stimuli. Film intervals from begin to forestall responses have been tuned by way of sensory revel in although a hierarchical set of rules.Neurons encoded households of symbol sequences following trigonometric purposes. Spike collection and data drift recommend that repetitive circuit motifs underlie film detection.Rules of frog topographic retinotectal plasticity and cortical easy cells are hired in mechanical device studying networks for static symbol reputation, suggesting that discoveries of ideas of film encoding within the mind, similar to how symbol sequences and length are encoded, might benefit film reputation generation.We constructed and skilled a mechanical device studying community that mimicked neural ideas of visible device film encoders.The community, named MovieNet, outperformed present mechanical device studying symbol reputation networks in classifying herbal film scenes, whilst lowering information measurement and steps to finish the classification job.This learn about finds how film sequences and time are encoded within the mind and demonstrates that brain-based film processing ideas permit environment friendly mechanical device studying.

Mind-Impressed AI Learns to Watch Movies Like a Human – Neuroscience Information