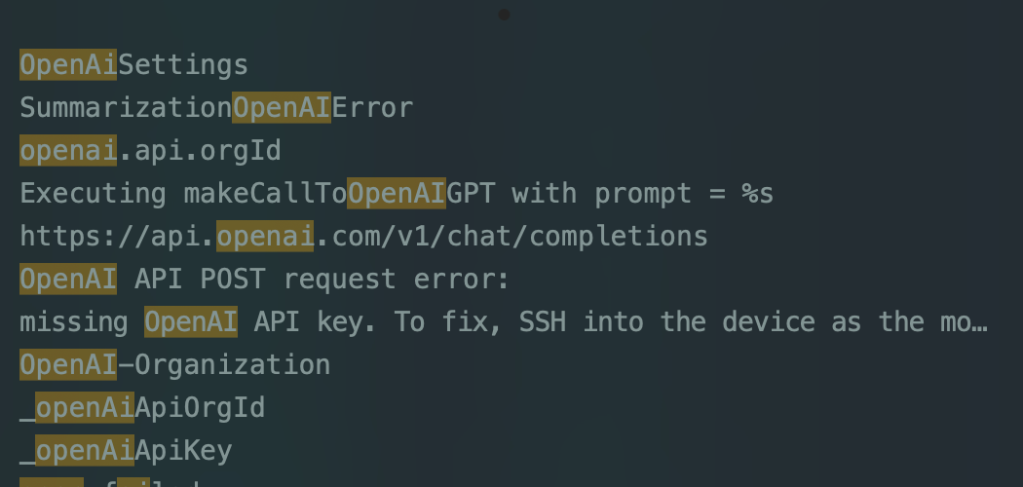

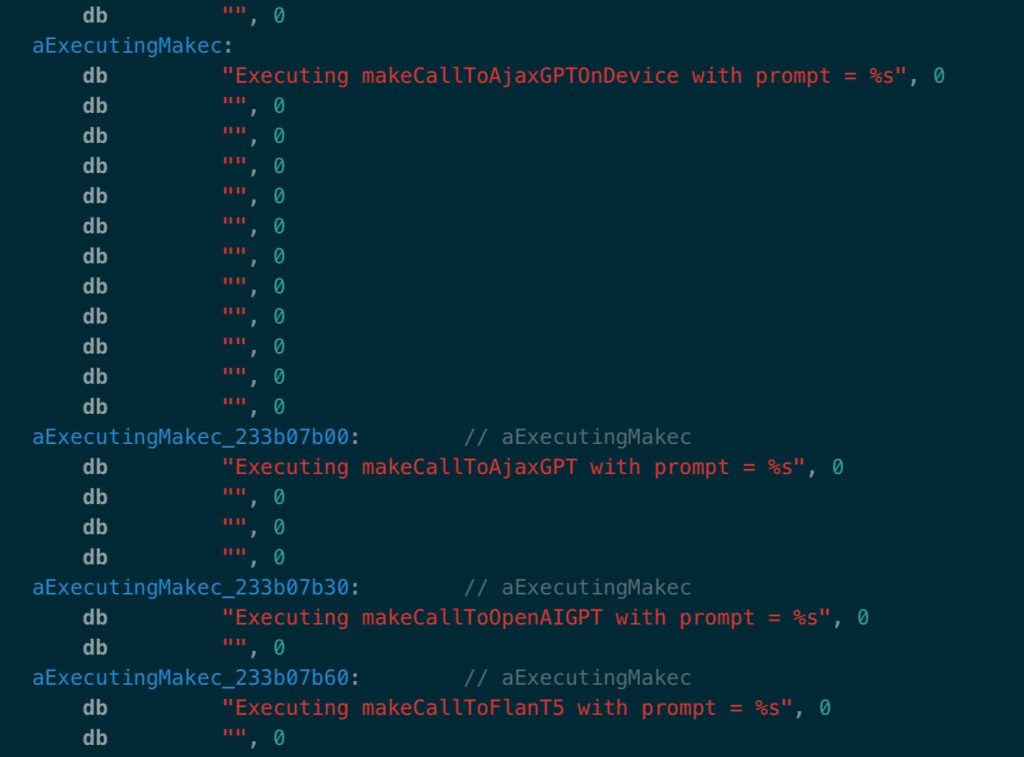

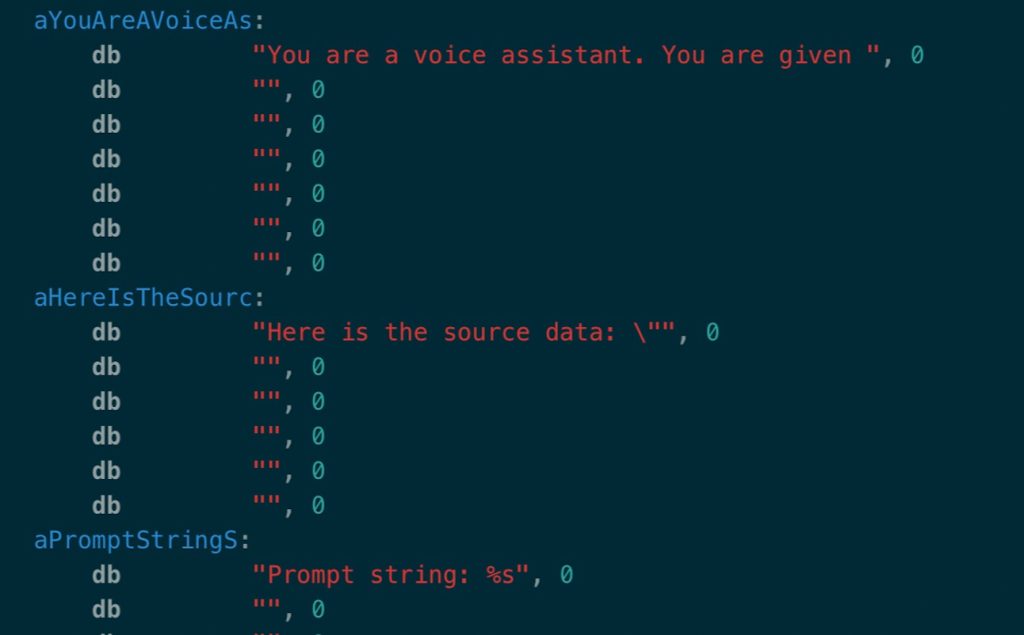

Apple is expected to unveil a new set of iOS 18 devices in June. The code found by 9to5Mac in the first beta of iOS 17.4 shows that Apple continues to use the new version of Siri powered by the technology of the main models, with a little help from other sources. Instead, Apple appears to be using OpenAI’s ChatGPT API for internal testing to help develop its own AI models. According to this code, iOS 17.4 includes a secret SiriSummarization protocol that calls OpenAI’s ChatGPT API, which Apple is using to test within its new AI products. The code reveals that Apple is testing four types of AI, including its internal version called “Ajax,” which will be integrated into iOS 18. Other models introduced with iOS 17.4 include the aforementioned ChatGPT and FLAN-T5. These developments indicate Apple’s ongoing work to integrate a wide variety of languages into iOS and compare the results with products such as ChatGPT and FLAN-T5. In October, Bloomberg’s Mark Gurman detailed Apple’s plans for AI in iOS 18, explaining that the software team is aiming to incorporate significant language-related features into the upcoming version. Apple seems to be using its own AI models to run the SiriSummarization framework and internally compare the results to ChatGPT’s. Apple is unlikely to use OpenAI models to power any of its AI features in iOS 18. For example, the SiriSummarization system can perform summarization using device models. There are several mechanical examples of the SiriSummarization framework in iOS 17.4. These include directives such as “please summarize,” “please answer these questions,” and “please summarize the given statement.” The system also recommends specifying what to do when delivering iMessages or SMS. This is in line with previous reports from Bloomberg, which stated that Apple is working on integrating AI into the Messages app to automatically ask questions and sentences. The code in iOS 17.4 includes fields such as senders, content, send_time, action type, value, current version, and reliability score in JSON format. The system offers the Voice Agent guidance on the appropriate action to take in response to these inputs. Possible action types include MessageReply, GetDirection, Call, SaveContact, Remind, MessageContact, and None, while possible ActionValueTypes are message, address, phoneNumber, contact, and reminder. The probability score ranges from 0 to 1, indicating the confidence level of the target action. This information highlights Apple’s efforts to integrate AI into the Messages app and provide features such as action guidance based on received SMS. The provided details also give insights into the codenames and different types of AI models being utilized by Apple for internal testing. The integration of such advanced AI features into iOS 18 suggests Apple’s commitment to creating a more sophisticated and language-capable system.

FTC: We use affiliate links to make money. More information.