This week, the clinical magazine Frontiers in Mobile and Developmental Biology revealed analysis that includes bogus imagery made with Midjourney, one of the vital fashionable AI symbol turbines.

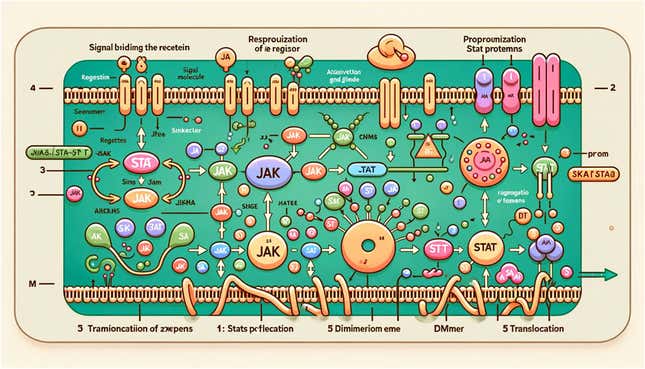

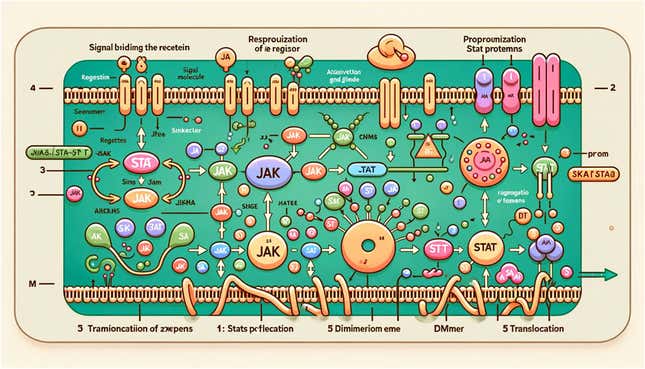

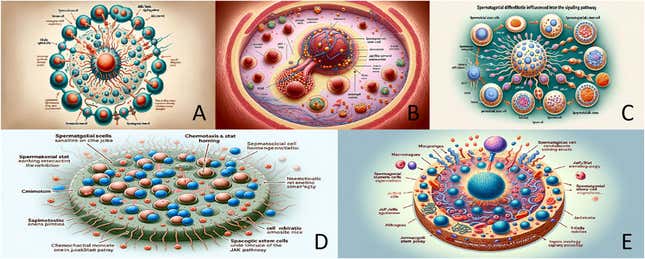

Like It or Now not, Your Physician Will Use AI | AI UnlockedThe open-access paper explores the connection between stem cells in mammalian testes and a signaling pathway chargeable for mediating irritation and most cancers in cells. The paper’s written content material does no longer seem to be bogus, however its maximum eye-popping sides don’t seem to be within the analysis itself. Relatively, they’re the wrong and ugly depictions of rat testes, signaling pathways, and stem cells.The AI-generated rat diagram depicts a rat (helpfully and accurately categorized) whose higher frame is categorized as “senctolic stem cells.” What seems to be an excessively huge rat penis is categorized “Dissilced,” with insets at proper to spotlight the “iollotte sserotgomar mobile,” “dck,” and “Retat.” Hmm.Consistent with Frontiers’ editor tips, manuscripts are topic to “preliminary high quality exams” through the analysis integrity group and the dealing with editor previous to the peer-review procedure. In different phrases, many eyes supposedly reviewed this paintings sooner than the pictures have been revealed.To the researchers’ credit score, they state within the paper that pictures within the article have been generated through Midjourney. However Frontiers’ website for insurance policies and e-newsletter ethics notes that corrections could also be submitted if “there may be an error in a determine that doesn’t adjust the conclusions” or “there are mislabeled figures,” amongst different elements. The AI-generated imagery indisputably turns out to fall below the ones classes. Dingjun Hao, a researcher at Xi’an Jiaotong College and co-author of the find out about, didn’t right away reply to Gizmodo’s request for remark.The rat symbol is obviously mistaken, despite the fact that you’ve by no means minimize open a rat’s genitals. However the different figures within the paper may move as credible to the untrained eye, a minimum of in the beginning look. But even any person who hasn’t ever opened a biology textbook would see, upon additional scrutiny, that the labels on each and every diagram don’t seem to be relatively English—a telltale signal of AI-generated textual content in imagery. The thing used to be edited through knowledgeable in animal copy on the Nationwide Dairy Analysis Institute in India, and it used to be reviewed through researchers at Northwestern Drugs and the Nationwide Institute of Animal Diet and Body structure. So how did the wacky pictures get revealed? Frontiers in Mobile and Developmental Biology didn’t right away reply to a request for remark.The OpenAI textual content generator ChatGPT is talented sufficient to get farkakte analysis previous the supposedly discerning eyes of reviewers. A find out about carried out through researchers at Northwestern College and the College of Chicago discovered that human mavens have been duped through ChatGPT-produced clinical abstracts 32% of the time. So, simply since the illustrations are obviously nonsense cosplaying as science, we shouldn’t forget AI engines’ skill to move off BS as actual. Crucially, the ones find out about authors warned, AI-generated articles may reason a systematic integrity disaster. It sort of feels like that disaster could also be smartly underway. Alexander Pearson, an information scientist on the College of Chicago and co-author of that find out about, famous on the time that “Generative textual content generation has a really perfect attainable for democratizing science, for instance making it more straightforward for non-English-speaking scientists to percentage their paintings with the wider neighborhood,” however “it’s crucial that we predict moderately on easiest practices to be used.”

The thing used to be edited through knowledgeable in animal copy on the Nationwide Dairy Analysis Institute in India, and it used to be reviewed through researchers at Northwestern Drugs and the Nationwide Institute of Animal Diet and Body structure. So how did the wacky pictures get revealed? Frontiers in Mobile and Developmental Biology didn’t right away reply to a request for remark.The OpenAI textual content generator ChatGPT is talented sufficient to get farkakte analysis previous the supposedly discerning eyes of reviewers. A find out about carried out through researchers at Northwestern College and the College of Chicago discovered that human mavens have been duped through ChatGPT-produced clinical abstracts 32% of the time. So, simply since the illustrations are obviously nonsense cosplaying as science, we shouldn’t forget AI engines’ skill to move off BS as actual. Crucially, the ones find out about authors warned, AI-generated articles may reason a systematic integrity disaster. It sort of feels like that disaster could also be smartly underway. Alexander Pearson, an information scientist on the College of Chicago and co-author of that find out about, famous on the time that “Generative textual content generation has a really perfect attainable for democratizing science, for instance making it more straightforward for non-English-speaking scientists to percentage their paintings with the wider neighborhood,” however “it’s crucial that we predict moderately on easiest practices to be used.” The higher approval for AI has led to scientifically faulty imagery to make its means into clinical publications and information articles. AI pictures are simple to make and ceaselessly visually compelling—however AI is as unwieldy, and it’s unsurprisingly tricky to put across all of the nuance of clinical accuracy in a urged for a diagram or representation.The new paper is a a long way cry from the synthetic papers of years previous, a pantheon that incorporates such hits as “What’s the Deal With Birds?” and the Celebrity Trek-themed paintings “Speedy Genetic and Developmental Morphological Trade Following Excessive Celerity.” Occasionally, a paper that will get via peer-review is simply humorous. Different instances, it’s an indication that “paper turbines” are churning out so-called analysis that has no clinical benefit. In 2021, Springer Nature used to be pressured to retract 44 papers within the Arabian Magazine of Geosciences for being general nonsense. On this case, the analysis can have been OK, however the entire find out about is thrown into query through the inclusion Midjourney-generated pictures. The typical reader can have a troublesome time bearing in mind signaling pathways once they’re nonetheless busy counting precisely what number of balls the rat is meant to have.Extra: ChatGPT Writes Neatly Sufficient to Idiot Medical Reviewers

The higher approval for AI has led to scientifically faulty imagery to make its means into clinical publications and information articles. AI pictures are simple to make and ceaselessly visually compelling—however AI is as unwieldy, and it’s unsurprisingly tricky to put across all of the nuance of clinical accuracy in a urged for a diagram or representation.The new paper is a a long way cry from the synthetic papers of years previous, a pantheon that incorporates such hits as “What’s the Deal With Birds?” and the Celebrity Trek-themed paintings “Speedy Genetic and Developmental Morphological Trade Following Excessive Celerity.” Occasionally, a paper that will get via peer-review is simply humorous. Different instances, it’s an indication that “paper turbines” are churning out so-called analysis that has no clinical benefit. In 2021, Springer Nature used to be pressured to retract 44 papers within the Arabian Magazine of Geosciences for being general nonsense. On this case, the analysis can have been OK, however the entire find out about is thrown into query through the inclusion Midjourney-generated pictures. The typical reader can have a troublesome time bearing in mind signaling pathways once they’re nonetheless busy counting precisely what number of balls the rat is meant to have.Extra: ChatGPT Writes Neatly Sufficient to Idiot Medical Reviewers

'Rat Dck' Amongst Gibberish AI Photographs Revealed in Science Magazine