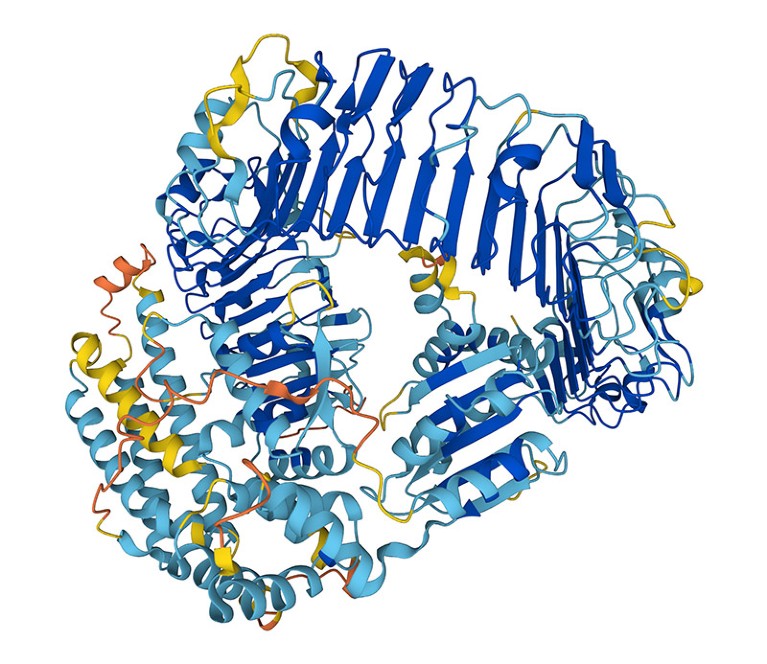

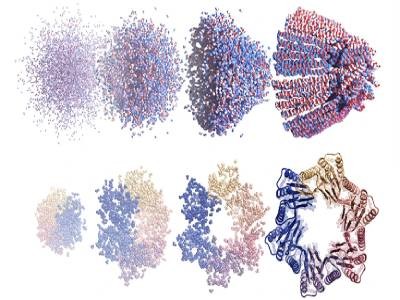

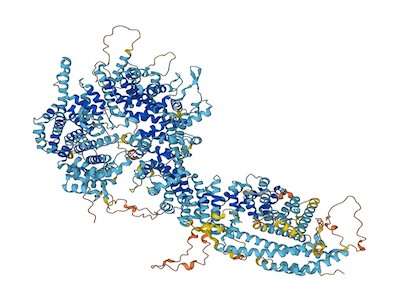

AlphaFold's sensible instrument can design proteins for explicit purposes.Credit score: Google DeepMind/EMBL-EBI (CC-BY-4.0) Can synthetic intelligence (AI)-engineered proteins be used as guns? With the hope of getting rid of this chance – and the hope of burdening executive laws – researchers as of late have introduced a procedure to name for the protected and clever use of protein synthesis. [AI] than the present possibility,” mentioned David Baker, a medical physicist on the College of Washington in Seattle, who is a part of the volunteer challenge. Many different scientists who use AI in organic design have signed an inventory of pledges.

AI gear are growing new proteins that may trade medication “It's a just right get started. I'll signal it,” mentioned Mark Dybul, a world well being knowledgeable at Georgetown College in Washington DC who led the 2023 document on AI and biosecurity on the Helena suppose tank. in Los Angeles, California. However he additionally thinks that “we want movements and rules of the federal government, no longer guided through freedom”. The theory comes after stories from america Congress, suppose tanks and different organizations are investigating the likelihood that AI gear – ranging from the prediction of the construction of proteins. networks similar to AlphaFold to examples of huge languages similar to those who energy ChatGPT – might enable you create organic guns, together with new toxins or extremely infectious viruses. The risks of protein Researchers, together with Baker and his colleagues, had been looking to design and engineer new proteins for years . However their talent to take action has grown exponentially in recent times due to advances in AI. Experiments that when took years or had been unimaginable – similar to creating a protein that binds to a selected molecule – can now be carried out in mins. Lots of the AI gear that scientists have advanced to make this imaginable are freely to be had. To learn the way to misuse synthetic proteins, the Baker's Institute of Protein Design on the College of Washington held an AI protection convention in October 2023. The query was once: what, if in anyway , how will have to they be managed through protein construction, and what, if any, are the dangers?” says Baker.

AI gear are growing new proteins that may trade medication “It's a just right get started. I'll signal it,” mentioned Mark Dybul, a world well being knowledgeable at Georgetown College in Washington DC who led the 2023 document on AI and biosecurity on the Helena suppose tank. in Los Angeles, California. However he additionally thinks that “we want movements and rules of the federal government, no longer guided through freedom”. The theory comes after stories from america Congress, suppose tanks and different organizations are investigating the likelihood that AI gear – ranging from the prediction of the construction of proteins. networks similar to AlphaFold to examples of huge languages similar to those who energy ChatGPT – might enable you create organic guns, together with new toxins or extremely infectious viruses. The risks of protein Researchers, together with Baker and his colleagues, had been looking to design and engineer new proteins for years . However their talent to take action has grown exponentially in recent times due to advances in AI. Experiments that when took years or had been unimaginable – similar to creating a protein that binds to a selected molecule – can now be carried out in mins. Lots of the AI gear that scientists have advanced to make this imaginable are freely to be had. To learn the way to misuse synthetic proteins, the Baker's Institute of Protein Design on the College of Washington held an AI protection convention in October 2023. The query was once: what, if in anyway , how will have to they be managed through protein construction, and what, if any, are the dangers?” says Baker.

AlphaFold has been touted as the following large factor in drug discovery – however isn't it? The challenge that he and plenty of different scientists in america, Europe and Asia are launching as of late requires the biodesign neighborhood to possess the police. This comprises steadily comparing the features of AI gear and comparing their analysis efficiency. Baker desires to look his division determine a committee of mavens to study this system sooner than it turns into to be had in lots of spaces and to inspire 'supervisors' if vital. explicit molecules. Recently, many corporations that supply this carrier are registered to the business crew, the Global Gene Synthesis Consortium (IGSC), which calls for them to reveal laws to spot destructive molecules similar to toxins or pathogens. “One of the best ways to offer protection to towards AI-generated threats is to have AI fashions that may determine those threats,” mentioned James Diggans, head of biosecurity at Twist Bioscience, a DNA production corporate in South San Francisco, California, and chairman of the IGSC. Injuries led to through AI. In October 2023, US President Joe Biden signed a record calling for a assessment of such dangers and elevating the potential for the will for DNA-synthesis analysis supported through the federal government. it will possibly prohibit the manufacturing of gear, vaccines and merchandise that AI-engineered proteins can produce. Diggans provides that it's no longer transparent how the protein-generating units shall be pushed, given the speedy tempo of building. “It's laborious to consider law that may well be suitable one week and be irrelevant the following week.” However David Relman, a microbiologist at Stanford College in California, says that efforts led through scientists aren’t sufficient to make certain that AI is used safely. “Herbal scientists on my own can not constitute the pursuits of the hundreds.”

AlphaFold has been touted as the following large factor in drug discovery – however isn't it? The challenge that he and plenty of different scientists in america, Europe and Asia are launching as of late requires the biodesign neighborhood to possess the police. This comprises steadily comparing the features of AI gear and comparing their analysis efficiency. Baker desires to look his division determine a committee of mavens to study this system sooner than it turns into to be had in lots of spaces and to inspire 'supervisors' if vital. explicit molecules. Recently, many corporations that supply this carrier are registered to the business crew, the Global Gene Synthesis Consortium (IGSC), which calls for them to reveal laws to spot destructive molecules similar to toxins or pathogens. “One of the best ways to offer protection to towards AI-generated threats is to have AI fashions that may determine those threats,” mentioned James Diggans, head of biosecurity at Twist Bioscience, a DNA production corporate in South San Francisco, California, and chairman of the IGSC. Injuries led to through AI. In October 2023, US President Joe Biden signed a record calling for a assessment of such dangers and elevating the potential for the will for DNA-synthesis analysis supported through the federal government. it will possibly prohibit the manufacturing of gear, vaccines and merchandise that AI-engineered proteins can produce. Diggans provides that it's no longer transparent how the protein-generating units shall be pushed, given the speedy tempo of building. “It's laborious to consider law that may well be suitable one week and be irrelevant the following week.” However David Relman, a microbiologist at Stanford College in California, says that efforts led through scientists aren’t sufficient to make certain that AI is used safely. “Herbal scientists on my own can not constitute the pursuits of the hundreds.”