https://static01.nyt.com/photographs/2023/06/02/podcasts/02HF-good-bad-ai/02HF-good-bad-ai-facebookJumbo.jpg

This transcript was created utilizing speech recognition software program. Whereas it has been reviewed by human transcribers, it might include errors. Please assessment the episode audio earlier than quoting from this transcript and e-mail transcripts@nytimes.com with any questions.

“Hallucination” as a time period is, I assume, getting some blowback.

Everyone hates each phrase in AI. They don’t like “perceive.” They don’t like “suppose.”

Folks want jobs and hobbies.

Look, phrases matter, and language does evolve, and we do get to factors the place we determine we’re not going to make use of phrases anymore. And I respect that course of. I don’t know if I’m there but with “hallucination.”

I did hear somebody counsel that we should always substitute that with the phrase “confabulation” which I like as a result of it sounds so British. May you simply hear a little bit British man saying, oh, my, AI mannequin, it’s confabulated.”

I’ve acquired myself in a proper spot of hassle with all these confabulations!

It’s simply very enjoyable to say.

It’s extremely enjoyable to say.

[MUSIC PLAYING]

I’m Kevin Roose, tech columnist for “The New York Instances.”

I’m Casey Newton from “Platformer,” and also you’re listening to “Onerous Fork.” This week, an pressing new warning about AI’s potential danger to humanity and the lawyer who clowned himself utilizing ChatGPT, plus, how the rise of NVIDIA explains this second in AI historical past. And “The New York Instances’” Kate Conger joins us to reply arduous questions on your expertise dilemmas.

[MUSIC PLAYING]

So Casey, final week on the present, we talked with Ajeya Cotra, who’s an AI security researcher. We talked about a few of the existential dangers posed by AI expertise. And there was an enormous replace on that story this week.

Yeah, and I really feel prefer it confirmed us that there are much more folks on this world who’re considering the best way that she’s eager about issues.

Completely. In order we had been placing out the episode final week, unbeknownst to us, this nonprofit known as the Middle For AI Security was gathering signatures on an announcement, principally an open letter that consisted of 1 sentence.

And what was the sentence?

Effectively, I’m glad you requested. It stated, quote, “Mitigating the chance of extinction from AI must be a worldwide precedence alongside different societal scale dangers reminiscent of pandemics and nuclear struggle.”

That are famously two of the worst issues that may occur. So AI is now simply kind of squarely within the dangerous zone right here.

Yeah, and also you may anticipate this type of assertion to be signed by people who find themselves very skeptical and apprehensive about AI.

Like anti-AI activists.

Proper, precisely. However this was not simply anti-AI activists. The assertion was signed by, amongst different folks, Sam Altman, the CEO of OpenAI, Demis Hassabis, the CEO of Google DeepMind, and Dario Amodei, the CEO of Anthropic. So three of the heads of the most important AI labs, saying that AI is probably actually scary, and we must be attempting very arduous to mitigate a few of the greatest dangers.

And in order a part of this, are they stepping down from their jobs and now not engaged on AI?

[LAUGHS]: No, after all not. They’re nonetheless constructing these items, and, in lots of circumstances, they’re racing to construct it quicker than their rivals. However the assertion is an enormous deal on the planet of AI security as a result of it’s the first time that the heads of the entire greatest kind of AGI labs are coming collectively to say, hey, that is probably actually scary, and we should always do one thing about it.

We talked about this earlier open letter, which got here out a couple of months in the past, which Elon Musk and Steve Wozniak and a bunch of different tech luminaries signed that known as for a six month pause. This letter was not that particular. It didn’t name for any particular actions to be taken. However what it did was it sort of united a whole lot of essentially the most outstanding figures within the AI motion behind this normal assertion of concern.

Proper. They’re now united in saying this might go actually badly.

Proper, precisely.

I’ve to ask, Kevin, is there something extra right here? As a result of I learn this assertion that claims, “It must be a worldwide precedence.” I don’t actually know what a worldwide precedence means. Are there different world priorities that we’re centered on proper now? Ought to they take a again seat to this? The longer I have a look at this assertion, the extra I really feel like I can’t make heads or tails of it.

Yeah, it’s a reasonably imprecise assertion. And I requested Dan Hendricks who’s the chief director of the Middle For AI Security, which is the nonprofit that put this collectively and gathered a whole lot of the signatures, why it was only one sentence, and why didn’t he name for any extra steps past simply “We’re involved about this?”

And he stated, principally, this was an try to simply get a few of the most outstanding folks in AI to go on the report saying that they consider that AI has existential danger hooked up to it. He stated, principally, he didn’t wish to name for a complete bunch of various interventions. And a few folks might need disagreed with a few of them, and a few folks may not have signed on. And so we principally wished to present folks a easy one sentence assertion that they might signal on to that claims, “I’m involved about this.” And it didn’t go any additional than that.

All proper, so for individuals who may not have heard our episode final week or simply sort of catching as much as this story, Kevin, why do some folks, together with the folks constructing it, suppose that this poses an existential danger to humanity?

So you may most likely ask these 350 plus folks every individually what their greatest kind of risk mannequin is for AI, and they might most likely offer you 350 totally different solutions. However I feel what all of them share are a few issues.

One is, these fashions, they’re getting very highly effective, and so they’re enhancing in a short time from one era to the. Second factor they might most likely agree on is, we don’t actually perceive how these items work, and so they’re behaving in some methods which can be perhaps sudden, or creepy, or harmful.

Proper, we will see what they’re doing by way of what they’re placing out, however we don’t understand how they’re placing out what they’re placing out.

Proper. And quantity 3 is, in the event that they proceed at their present tempo of enchancment, if these fashions preserve getting larger and extra succesful, then, ultimately, they are going to have the ability to do issues that will hurt us.

So what can we do with this info that we face existential danger from AI, Kevin?

Effectively, there’s a kind of cynical interpretation that I noticed so much after I wrote about this story on Tuesday, which is that persons are saying, principally, these folks don’t truly suppose there’s an existential danger from AI. They’re simply saying that as a result of it’s good advertising and marketing, good PR for his or her startups, proper?

If you happen to say, “I’m constructing an AI mannequin that may spit out believable sounding sentences.” That sounds so much much less spectacular than should you say, “I’m constructing an AI mannequin that will sooner or later result in human extinction.”

Yeah, should you’re not engaged on a expertise that poses an existential danger to humanity, why are you losing your time, OK? Oh, actually? You’re over at Salesforce constructing buyer relationship administration software program? Why don’t you attempt work on one thing a little bit harmful?

Yeah. I’m not saying the “Onerous Fork” podcast may result in the extermination of humankind, however I’m not not saying that. Many researchers —

If it does, please depart a one star assessment within the shops.

No, don’t do this!

No, we would like you to carry us accountable for wiping out humanity.

So I perceive the cynicism behind this. Typically when AI specialists speak up these creations or overhype them, they’re doing a sort of PR. However I feel that actually misunderstands the motives of a whole lot of the people who find themselves signing on to this.

Sam Altman, Demis Hassabis, Dario Amodei, these are individuals who have been speaking and eager about AI danger for a very long time. This isn’t a place that they got here to not too long ago. And a whole lot of the researchers who’re concerned on this, they work in academia. They don’t stand to revenue if folks suppose that these fashions are in some way extra highly effective than they are surely.

So this isn’t a get wealthy fast scheme for any of those folks?

No, and actually, it’s most likely inviting a whole lot of consideration and presumably regulation which may truly make their lives tougher. So I feel the true story right here is that till very not too long ago, saying that AI danger was existential, that it’d wipe out humanity, should you stated that, you had been insane. You had been seen as being unhinged. Now that could be a mainstream place that’s shared by most of the high folks within the AI motion.

If this doomsday situation presents itself, do you suppose that subscribers to ChatGPT Plus will likely be spared?

[LAUGHS]: I feel it relies upon how good you’re to ChatGPT.

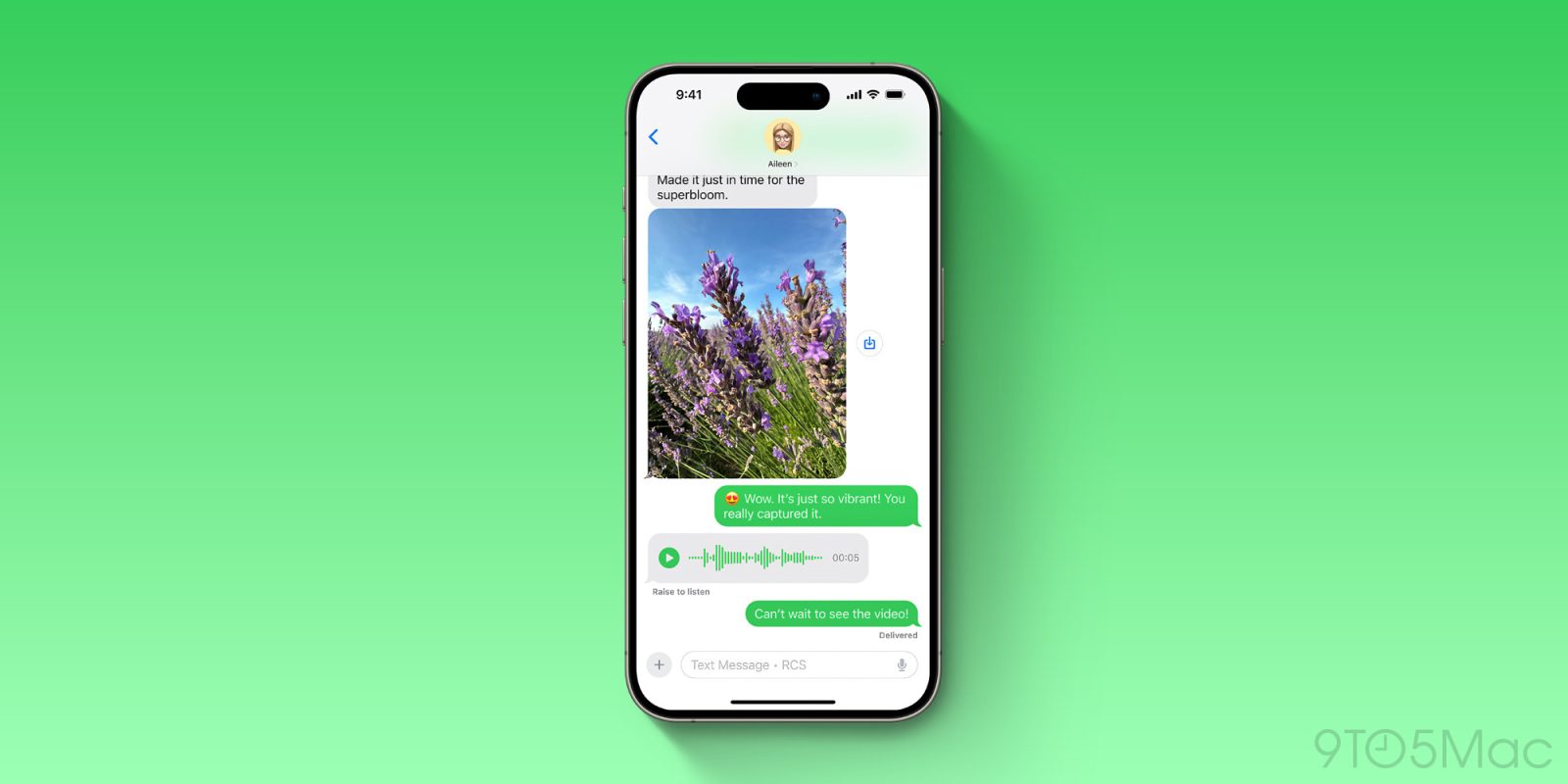

Please, be good to the chat bot, OK? We don’t know what’s coming. Now, that brings us to the second story, Kevin, that we wished to speak about this week, which I feel, presents a really totally different potential imaginative and prescient for the close to time period way forward for AI. So when you have one group of parents saying, this factor may sooner or later be able to killing us all, you even have the story in regards to the ChatGPT lawyer. Kevin, I think about you’re acquainted with this case.

[LAUGHS]:: This is likely one of the funniest tales of the 12 months in AI, I feel, partly as a result of it’s simply so apparent that one thing like this was going to occur, proper? These chat bots, they appear very believable. They spit out issues that typically are very useful and proper. However different occasions they’re simply spouting nonsense. And on this case, it is a story a couple of lawyer who turned to ChatGPT to assist him make a case for his shopper, and it wound up costing him dearly.

Yeah, so let’s speak about what occurred with this fellow. Again in 2019, a passenger on a flight with Avianca Airways says he acquired injured when a serving cart hit his knee.

I hate that.

I’m going to say I’ve been hit within the knee by swerving automobile a time or two. I can’t think about how briskly this cart needed to be going to the purpose that this man filed a lawsuit. I want to see the flight attendants at Avianca simply operating up and down the aisles with these — in any case, the passenger sued for damages. The airline, in flip, responded, saying the case must be dismissed.

At this level, the lawyer for the passenger decides to show to ChatGPT for assist crafting a authorized argument that the case ought to stick with it and that the airline must be held liable. So how does ChatGPT assist him? Effectively, the lawyer desires some assist in discovering some related authorized circumstances to bolster his argument, and ChatGPT offers him some, such circumstances as Martinez versus Delta Airways, and Varghese versus China Southern Airways, and Property of Durden versus KLM Royal Dutch Airways, Property of Durden, I assume, from the “Struggle Membership” franchise?

Tyler Durden’s property sued the Royal Dutch Airways.

And at one level, the lawyer even tries to substantiate that one among these circumstances is actual. Sadly, he makes an attempt to substantiate with ChatGPT itself, and he says, hey, are these circumstances actual? And ChatGPT says, successfully, sure. These circumstances are actual. Now, the legal professionals for the airline, Avianca, after they learn the lawyer’s submission, they will’t discover any of those circumstances.

Proper, they’re like, what are these mysterious circumstances which can be getting used towards us, and why can’t I discover them in my case legislation textbooks?

Yeah, give me the Durden case. I wish to see if it’s about “Struggle Membership.” So anyway, the lawyer for the passenger goes again to ChatGPT to get assist discovering copies of those circumstances, and he sends over copies of the eight totally different circumstances that had been beforehand cited. If you happen to have a look at these briefs — and I’ve checked out one among them — they include the identify of the courtroom, the choose who issued the ruling, the docket numbers, the dates.

And the legal professionals for the airline are these items. They attempt to monitor down the docket numbers. And lots of of those circumstances weren’t actual. And so now the lawyer has gotten in some scorching water as a result of it seems you’re truly not allowed to simply submit fakery to the courts of this land.

Proper, this lawyer, whose identify is Steven A. Schwartz, then has to principally grovel earlier than the choose as a result of the choose is understandably very upset about this. And so this lawyer writes a brand new assertion to the choose affirming, and I’ll quote right here, that “Your affiant has by no means utilized ChatGPT as a supply for conducting authorized analysis previous to this prevalence, and, subsequently, was unaware of the likelihood that its content material may very well be false,” finish quote.

After which it additionally says that they swear that, quote, “Your affiant enormously regrets having utilized generative synthetic intelligence to complement the authorized analysis carried out herein and can by no means accomplish that sooner or later with out absolute verification of its authenticity,” finish quote.

If I had been him, I’d have neglected that final half. I feel he — I feel he most likely may have had the choose at “won’t ever use once more.” I feel that’s most likely what the choose wished to listen to can be my guess.

I do suppose we’ve to imagine that for each lawyer who will get busted utilizing ChatGPT to jot down briefs, there are at the least 100 legal professionals who aren’t getting busted. And truly, these are the tales that I’m additionally taken with. Who’s the lawyer who’s simply not gone to the workplace in six months as a result of they’re simply cranking out boilerplate authorized paperwork with ChatGPT.

If you happen to snuck an AI generated doc previous a choose and gotten away with it, we’d love to listen to from you.

Yeah, and so would the Bar Affiliation.

So this is only one latest instance in what I feel is turning into a pattern of AI chat bots principally mendacity about themselves and their very own capabilities.

Yeah, and should you take away nothing else from this podcast ever, simply please perceive you can’t ask the chat bot to verify if chat bots works, OK? The chat bot does completely not know what it’s speaking about in the case of that.

Completely. And this additionally applies to detecting AI generated textual content. So one among my different favourite tales from this month was a narrative a couple of professor at Texas A&M College Commerce who acquired a bunch of pupil assignments and ran them by way of ChatGPT, copied and pasted the coed’s work into ChatGPT and stated, “Did you generate this ChatGPT?” He was principally attempting to verify if his college students had plagiarized from ChatGPT in submitting their essays.

Yeah, he thought it was being a little bit intelligent right here, staying one step forward of those younger whippersnappers.

Yeah.

So he takes the essays, pastes them submitted ChatGPT and says, “Did you write this?” ChatGPT shouldn’t be telling the reality. Nevertheless it says, “Sure, I wrote all of those.”

The professor flunks his whole class. They get denied their diplomas. And it seems that this professor had simply requested ChatGPT to do one thing that it was not geared up to do. The scholars had not truly cheated, and so they had been wrongfully accused.

I really feel so dangerous for them. Are you able to think about that you just’re like one of many solely college students within the nation proper now who’s not utilizing ChatGPT to cheat your approach by way of faculty, and also you’re the one who will get denied your diploma as a result of the chat bot lied about you.

All proper, so we’ve right here two very totally different tales, proper? One is in regards to the chance that we’re going to have this tremendous clever AI that’s able to nice destruction. And on the opposite, we’ve a chat bot that isn’t even nearly as good as Google search in the case of discovering related authorized circumstances. So which of the 2 prospects do you suppose is extra seemingly, Kevin? That we kind of keep the place we’re proper now with these dumb chat bots or that we get to the large scary future?

I’d say that these are two totally different classes of dangers. And one, I’d say, is the sort of danger that will get smaller because the AI programs get higher. So I’d put the lazy lawyer writing the transient utilizing ChatGPT into this class.

Proper now, chat bots, should you ask them to generate some authorized transient and cite related case legislation, they’re going to make stuff up as a result of they only aren’t grounded to an actual set of authorized information. However somebody, whether or not it’s West Regulation or one among these different large kind of authorized expertise firms, within the subsequent few years, they are going to construct some sort of massive language mannequin that’s sort of hooked up to a database of actual circumstances and actual citations.

And that giant language mannequin, if it really works properly, whenever you ask it to drag citations, it gained’t simply make stuff up, it’ll go into its database, and it’ll pull out actual citations, and it’ll simply use the big language mannequin to jot down the transient round that. That’s a solvable drawback, and that’s one thing that I anticipate will likely be higher as these fashions get extra grounded.

The opposite style of drawback, the issue that I feel this one sentence assertion is addressing is the kind of drawback that will get worse because the AI programs get higher and extra succesful. And so that is the world the place I are likely to focus extra of my very own fear.

We’ve to imagine that the AI expertise that exists right now goes to get higher. And because it will get higher, some sorts of issues will shrink. In my view, that’s these sort of hallucination or confabulation kind points. However the issues that may worsen are a few of the dangers that this existential risk letter is pointing to, the threats that I may sometime turn into so highly effective, that it kills us or disempowers us indirectly.

Proper. Effectively, regardless that I requested the query, “Which of those futures is extra seemingly?” I do suppose it’s the incorrect query as a result of I feel that as we proceed to see what occurs right here, we simply must preserve a whole lot of prospects in our thoughts.

And I feel one chance is that we do hit some kind of technical roadblock that signifies that chat bots don’t get nearly as good as we thought they had been going to get. I do suppose that could be a chance. However then there’s additionally the likelihood that every thing that you just simply laid out does occur and that it creates these kind of scary new options.

However I get why persons are experiencing a sort of whiplash about this. It’s like should you had been instructed that there’s going to be a world conquering dictator, and it’s Mr. Bean —

It’s like — you’re like, how is that man going to overcome the world? He can’t even stroll down the road with out tripping and falling or inflicting some hilarious hijinx. And I feel that’s the kind of cognitive dissonance that lots of people are feeling proper now with AI.

They’re being instructed that these programs are enhancing, they’re getting higher at very quick speeds, and that they could very quickly pose all these very scary dangers to humankind. On the similar time, whenever you ask it to do one thing that looks like it must be fairly straightforward, like pull out some related authorized citations in a quick, it might probably’t do this.

What do you make of the truth that the lawyer did fall for this hype and did suppose that ChatGPT was kind of omniscient?

I feel there are a pair locations that you may kind of place the blame right here, one is on the lawyer. This was not like some junior affiliate at a legislation agency who’s working 120 hours every week. He’s tremendous stressed, and in a second of panic turns to ChatGPT to fulfill this submitting deadline.

This can be a 30 12 months legal professional. That is somebody who most likely has achieved a whole lot of those briefs if not hundreds and as a substitute simply does the laziest factor attainable, which is simply to ask ChatGPT “Discover me some circumstances that apply on this case.” Have some delight in your work.

[LAUGHS]:

He was drained, OK? He’s been doing this for 30 years. He needed to attempt all 30 years. You attempt doing one thing for 30 years.

And don’t skip this step the place you verify the mannequin’s outputs to be sure that it’s not making stuff up. I feel that could be a actually important piece that persons are simply forgetting.

And I feel that this has some parallels in historical past. We’ve talked earlier than in regards to the similarities between this second in AI and when Wikipedia first got here out. And it was like, oh, you possibly can’t belief something Wikipedia says.

After which some mixture of Wikipedia getting higher and extra dependable and simply our sense and radar for what sorts of issues Wikipedia was good and dangerous at getting used for improved such that now folks don’t actually make that mistake anymore of placing an excessive amount of authority and duty onto Wikipedia.

And so I feel that sort of factor will occur with chat bots too. Or the chat bots will get higher, however, additionally, we because the customers will get extra subtle about understanding what they’re and aren’t good for. I don’t know. What do you suppose?

I thank that’s true, however I additionally suppose that the makers of those chat bots must intervene in some methods. If you happen to go to make use of ChatGPT right now, it says one thing like, “Might sometimes generate incorrect info.” And in reality, I feel, there are circumstances the place it’s producing incorrect info on a regular basis, and it simply must be extra upfront with customers about that.

James Vincent had a very good piece on this in “The Verge” this week. And he supplied some actually good widespread sense options like, if ChatGPT is being requested to generate factual citations, you may inform the person, hey, just be sure you verify these sources and ensure they’re actual.

Or if somebody asks,” Hey, Is that this textual content generated by an AI?” It ought to reply, “I’m sorry. I’m not able to making that judgment.” So I anticipate that chat bots will construct instruments like that. However they might assist out lots of people from the lawyer to the professor and who is aware of who else?

Yeah, I feel that’s an affordable factor to need. I additionally surprise if there may very well be some sort of coaching module the place whenever you join an account with ChatGPT, you need to perform a little 10 minute tutorial course of.

earlier than you play a online game, and it offers you the tutorial, and it says, right here’s how you can soar, and right here’s how you can strafe, and right here’s how you can change weapons? That sort of factor for a chatbot can be like, right here’s a very good use. Right here’s what it’s actually good at. Right here’s what it’s actually dangerous at. Don’t use it for these 5 issues. Or right here’s the way it can hallucinate or confabulation, and right here’s why you truly actually do wish to verify that the work you’re getting out of that is appropriate.

I feel that would truly assist modify folks’s expectations in order that they’re not going into this like cracking open a model new ChatGPT account and placing some very delicate or excessive stakes info into it and anticipating a very factual output.

I feel that’s proper. And I additionally suppose that should you can show that you just take heed to the “Onerous Fork” podcast, you must have the ability to skip the tutorials as a result of our listeners are approach forward of those guys.

One of many issues that has pushed me a little bit loopy over the previous few weeks is that this stress that I really feel. After which I’m undecided should you really feel it too. However there’s an actual stress on the market to kind of determine which of the classes of AI dangers you’re apprehensive about.

So should you speak about long run danger — there was a whole lot of blowback on the individuals who signed this open letter saying, “You all are ignoring these brief time period dangers since you’re so apprehensive about AI killing us all like nuclear weapons that you just’re not centered on x, y, and z which can be far more speedy dangers.”

If you happen to do concentrate on the speedy dangers, a few of the long run I security folks will say, properly, you’re ignoring the existential risk posed by these fashions, and the way may you not be seeing that that’s the true risk right here? And I simply suppose this is sort of a completely false selection that’s being compelled on folks.

I feel that we’re able to holding a couple of risk in our minds without delay. And so, I don’t suppose that individuals must be compelled to decide on whether or not they suppose that the issues with AI are proper right here within the right here and now or whether or not they’re going to emerge years from now.

So I feel that’s proper, however I additionally suppose that whereas we do not need to decide on between these two issues, in follow, typically a kind of sorts of dangers will get far more consideration. We’re speaking about this story on the present this week since you acquired a bunch of people that look like they could telling us, hey, this factor may wipe out humanity.

So I’m delicate to the concept that a few of these harms that really feel a little bit bit extra pedestrian, a little bit bit smaller scale, perhaps didn’t have an effect on us personally, we’re much less seemingly to concentrate to. And I feel it’s OK to say that.

I additionally simply suppose we have to separate out in our minds, AI instruments which can be scary as a result of they don’t work and AI instruments which can be scary as a result of they do work. These issues really feel very totally different to me know.

And a mannequin that’s producing nonsense authorized citations is harmful, however that’s a hazard that may get addressed as these fashions enhance. Whereas, the AI instruments which can be scary as a result of they work, that’s a tougher drawback to unravel.

I like what you had been saying that these are literally sort of totally different issues to work on and we will and may work on each.

Yeah, completely, I feel that we must be focusing consideration and power and sources on fixing the issues in these fashions. So I feel that individuals can maintain a couple of danger of their head at a time. I do suppose there’s a query of which of them get area in newspapers and talked about on TV and podcasts, which is why I feel we should always attempt on this present to stability our speak about a few of the long run dangers and a few of the brief time period dangers. However I don’t suppose all of it must be one or the opposite.

I agree. Within the meantime, we merely have two requests for our listeners. #1, please don’t use ChatGPT to jot down your authorized briefs. Quantity 2, please don’t use ChatGPT to wipe out humanity.

[LAUGHS]: Quite simple requests.

[MUSIC PLAYING]

After we come again, how one tech firm turned probably the most extremely valued on the planet virtually by chance.

[MUSIC PLAYING]

OK, Kevin, I’m taken with what appears like a lot of the world of expertise. However there are admittedly some topics that I draw back from, and I simply suppose, I’m going to let another folks take into consideration that. And a kind of issues is chips. You’re a big fan of chips.

I like chips.

I’m not. However I noticed a bit of reports this week that made me sit up in my chair and suppose, you understand, I’m truly going to must study one thing about that. And that factor was that NVIDIA, one of many large chip firms, hit $1 trillion market cap and is the fifth greatest tech firm on the planet by market cap behind solely Apple, Microsoft, Alphabet, and Amazon. So I’m wondering, Kevin, if for this subsequent few minutes, you may attempt to clarify to me what’s NVIDIA and the way can I shield my household from it?

[LAUGHS]: So that you’re proper. I’m fascinated with chips, and NVIDIA, specifically, I feel, is definitely probably the most attention-grabbing tales within the tech world proper now.

As you stated, they hit $1 trillion market cap briefly, not too long ago, after an enormous earnings report, their inventory worth jumped by round 25 p.c which put them into this class which was once generally known as The Fangs when it was Fb, Apple, Amazon, Netflix, and Google. These had been the most important tech firms that individuals had been speaking about.

Now they’re on this rarefied group that I’m going to be referring to as : MAN as a result of IT’S Microsoft, Apple, Alphabet, Amazon, and NVIDIA.

All proper, properly, so candidly, I don’t care in regards to the inventory efficiency. I wish to know what is that this firm? Who made it? The place did it come from? And what’s it doing that made its inventory worth go so loopy?

So it’s a extremely attention-grabbing story. So NVIDIA shouldn’t be some latest upstart. It’s been round for 30 years.

It was began in 1993 by three co-founders together with this man Jensen Huang, who’s himself a extremely fascinating man, cliff notes on his bio.

He was born in Taiwan. When he was 9 years previous, the relations that he was dwelling with despatched him to a Christian boarding faculty in Kentucky. And as a young person, he turned a nationally ranked desk tennis participant.

If you happen to’re dwelling with relations, and so they ship you to a Christian boarding faculty in Kentucky, that’s what would have occurred to Harry Potter if he didn’t get to go to Hogwarts and the Dursleys had been identical to, we acquired some dangerous information for you, Harry. Anyway.

Proper, so Jensen Huang, the Harry Potter of Kentucky Christian boarding faculties, goes to school for electrical engineering then will get a job at some firms which can be making pc chips. And after he co-founds NVIDIA, one among their large first merchandise is a excessive finish pc graphics card.

So I don’t know — you had been a gamer within the 90s.

I used to be additionally a gamer within the 90s. I nonetheless bear in mind, I wished to play this sport known as “Unreal Event” which had simply come out, nice sport. However my pc wasn’t highly effective sufficient to play this sport. It actually wouldn’t load on my pc.

So I needed to save up my allowance cash, exit to Greatest Purchase. I purchased an NVIDIA graphics card, and I plugged it into my PC, after which I may play “Unreal Event, and —

Have been you any good at it?

— childhood was saved. I used to be not excellent.

Yeah, that’s what I believed. [LAUGHS]

So NVIDIA begins off making these items known as GPUs, graphics processing models. And GPUs for a few years are a distinct segment product for individuals who play a whole lot of video video games.

Yeah, most individuals aren’t taking part in “Unreal Event” on their PCs right now. It’s principally folks operating Phrase and Excel.

Proper. So these packages use CPUs that are the normal processors that come in your pc. And one factor that’s essential to find out about CPUs is that they will solely do one factor at a time, one operation at a time.

That seems like me.

Yeah, so that you’re a CPU. I’m a GPU of the 2 of us as a result of I can do many issues in parallel. I can multitask. And I may do all of it with finesse.

That’s a pleasant approach of claiming that you’ve ADHD, however go on.

[LAUGHS]: So the GPU is used for video video games. It permits folks to render 3D graphics and better high quality. After which round 2006, 2007, Jensen Huang, he begins listening to from these scientists, people who find themselves doing actually computationally intensive sorts of science, who’re saying, these graphics playing cards that you just use for video video games, that you just construct for video players, they’re truly higher than the processors in my pc at doing these very excessive depth computational processes.

As a result of they will do a couple of factor at a time.

Precisely. As a result of they’re what’s generally known as parallelizable, which is a phrase that I’d now such as you to repeat 3 times.

Parallelelizable. Parallelizable? Parallelizable.

Nice job.

Thanks.

So all of this leads Jensen Huang to say, properly, video games, they’re a very good marketplace for us. We don’t wish to hand over on that. However the variety of players on the planet is perhaps not infinite, and perhaps these processors that we’ve constructed for video video games may very well be helpful for different issues. So he decides —

Let’s simply say, should you’re a CEO, that’s a really thrilling second for you as a result of right here you have got this area of interest market that’s occurring, after which some folks come alongside, and it was like, wait, did that your market is definitely approach larger than you even notice and you may simply use the factor you’ve already made for that? Wow.

There’s this kind of perhaps apocryphal story the place a professor involves him and says, I used to be attempting to do that factor that was taking me endlessly, after which my son who’s a video gamer simply stated, dad, you can purchase a graphics card. So I did and I plugged it in, and now it really works a lot quicker, and I can truly accomplish my life’s work and inside my lifetime as a result of this processor is a lot quicker.

That’s a enjoyable story.

I don’t know if it’s actual or not, however that’s the sort of factor he’s listening to. So he decides to start out making these GPUs for arduous science. And buyers weren’t tremendous pleased about this. They simply actually didn’t see the worth on this transfer initially. All of the buyers are like, may you please simply return to video video games. That was a very good enterprise.

Additionally, right here’s what I don’t perceive. Why couldn’t you simply proceed promoting to the video players whereas additionally simply constructing out this new market?

Effectively, they tried to however, there’s a whole lot of competitors now within the online game market, so this isn’t seen as a really good move on the time. After which Jensen Huang will get very fortunate twice.

The very first thing that occurs is that within the early 2010s, this new kind of AI system, the deep neural community, turns into standard. Deep neural networks are the kind of AI that we now know can energy every kind of issues from picture producing fashions to textual content chat bots.

Isn’t it principally like should you search at Google “Images for canine,” it’s a neural community that’s the reason that canine footage present up?

Sure. And so this type of I actually bursts onto the scene beginning in round 2012. And it simply so occurs that the sort of math that deep neural networks must do to acknowledge pictures or generate texts or translate languages or no matter works a lot better on a GPU than a CPU.

That appears fortunate.

So the businesses which can be entering into deep studying neural networks, Google, Fb, et cetera, they begin shopping for a ton of NVIDIA’S GPUs, which, bear in mind, aren’t meant for this. They’re meant for gaming. They simply occur to be excellent at this different sort of computational course of.

And so NVIDIA sort of turns into this unintended large on the planet of deep studying as a result of in case you are constructing a neural community, the factor that’s the finest so that you can do this on is one among NVIDIA’S chips. They then begin making this software program known as Cuda, which sits on high of their GPUs that permits them to run these deep neural networks.

And so NVIDIA simply turns into this energy participant on the planet of AI principally by chance.

Fascinating.

The second fortunate break that occurs to NVIDIA — and I promise we’re winding right down to the top of this historical past lesson — is that it seems that one other sort of computing that’s a lot simpler to do on GPUs than CPUs which is crypto mining.

So to provide new bitcoins or new Ether, any of those large cryptocurrencies, you want these arrays of excessive powered computer systems. Additionally they depend on a sort of math that’s parallelizable. And so, principally, the crypto miners who’re attempting to get wealthy getting new Bitcoin, they’re shopping for these NVIDIA GPUs by the a whole lot, by the hundreds, they’re placing them into these information facilities, and so they’re utilizing them to attempt to mine crypto.

In 2020, I thought-about constructing a gaming PC. And one of many causes I didn’t was that on the time, you may not purchase a GPU for the road worth. And in reality, you’d most likely must pay double for one to get it off of eBay. And it was due to simply what you’re describing is that at the moment, the miners had been going loopy.

Completely. There’s this superb second in tech historical past the place these GPUs are like commanding these insane markups, and the crypto persons are getting mad on the players, and the players are getting mad on the crypto folks as a result of none of them can get the chips that they need as a result of they’re all so freaking costly. And making the most of all of that is, after all, NVIDIA which is making a living hand over fist.

Now we’re on this AI growth the place all these firms are spending a whole lot of thousands and thousands of {dollars} to construct out these clusters of excessive powered computer systems, and NVIDIA is the market chief. It makes an enormous proportion of the world’s GPUs, and it actually can’t make them quick sufficient to maintain up with demand. There’s this new chip, the H100, which prices like $40,000 for only one graphics processor. And AI firms, a few of them are shopping for like hundreds of these items to place into their information facilities.

So I feel that explains the story of how did NVIDIA get thus far. Is the story of how they acquired from an organization doing fairly properly to an organization that’s now value $1 trillion so simple as persons are going nuts for AI proper now?

That could be a large, large a part of it. In order that they nonetheless make cash from gaming. I feel it’s nonetheless — 30 p.c their earnings come from these kind of shopper gaming gross sales. However information facilities, machine studying, AI, that is sort of a big and rising a part of their enterprise.

This most up-to-date earnings report, the one which despatched the inventory worth up and made it cross $1 trillion in market cap, they reported this 19 p.c soar in income from the earlier quarter, simply billions of {dollars} basically falling into NVIDIA’s lap as a result of the chips that they make occur to be the right chips for I improvement and machine studying.

Effectively, no 1, don’t hand over on your corporation earlier than at the least 30 years have passed by since you by no means know what you’re going to unintentionally fall into. In order that’s the one factor. 2 is that I assume it’s simply stunning to me that we haven’t seen extra folks crowd into this area.

I do know that chip manufacturing is extremely sophisticated you want huge quantities of capital to get began, after which it’s simply sort of arduous to execute. So I perceive why there’s perhaps not that a lot competitors, however it’s nonetheless sort of looks like there must be extra. However I don’t know. What else do you make of this second and this firm?

Yeah, that is sort of a basic kind of picks and shovels firm, proper? There’s this kind of saying that within the gold rush of the nineteenth century in California, there have been two methods to get wealthy. You could possibly exit and mined the gold your self, or you may promote the picks and shovels to the individuals who had been going out and mining the gold. And that truly seems to be a greater enterprise as a result of whether or not folks discover gold or not, you’re nonetheless making a living by promoting them your instruments.

So NVIDIA is now on this very enviable place having the ability to promote to everybody within the AI business. And since — it is a little kind of esoteric — however as a result of they’ve that programming device package known as Cuda that runs on their GPUs, now an enormous proportion of all AI programming makes use of that, and it’s wedded to their chips.

They now have this locked in buyer base that may’t actually go to a competitor. They will solely use NVIDIA chips until they wish to rewrite their entire software program stack which might be costly and simply an enormous ache within the ass.

Fascinating.

The folks at AI labs are all obsessive about this. When NVIDIA comes out with a brand new chip, actually, they’re begging. This can be a kind of existential drawback to them. And so regardless that it’s not just like the sexiest or most shopper going through a part of the AI business, I feel that firms like NVIDIA, folks like Jensen Huang, they are surely sort of the kingmakers of the tech world proper now within the sense that they management who will get these very scarce, very in demand chips that may now then energy all these different AI purposes.

You don’t get that however with out NVIDIA. And also you don’t get ChatGPT, truthfully, with out this loopy backstory of video video games and crypto mining. And all of that led as much as this second the place we now sort of have this firm that has been in a position to trip this AI growth to $1 trillion market cap.

Effectively, I do suppose that’s attention-grabbing, that there’s a a part of this story that doesn’t get instructed as a lot. And should you’re any person who’s having your world rocked by AI in any approach, which I really feel like I’m a kind of folks, then a part of the query that you just’re most likely asking your self is, how did we get right here? What had been the steps main as much as this? What had been the required components for the second that we’re now dwelling in? And it looks like this has been an enormous a kind of.

Yeah, there’s a direct line from me placing an NVIDIA graphics card to in my pc to play “Unreal Event” in 1999 and the truth that ChatGPT exists right now. These issues aren’t solely associated, however they contain the identical firm and the identical man.

And I feel it speaks to the truth that in some methods players truly are crucial folks in the complete world. Avid gamers stand up.

[LAUGHS]: Don’t inform athletes.

[MUSIC PLAYING]

After we come again, “New York Instances” reporter Kate Conger joins us for some arduous questions. They usually’re fairly arduous.

[MUSIC PLAYING]

And so these are your headphones, and that’s your mic.

Let’s pod.

All proper. Can I am going?

Sure.

It’s time for one more spherical of arduous questions.

[MUSIC PLAYING]

Onerous questions.

Now, Onerous Questions is, after all, a phase on the present the place you ship in your most troublesome moral and ethical quandaries associated to expertise, and we attempt that will help you determine them out. And we’re so excited to be joined right now by “New York Instances” tech reporter Kate Conger who’s going to assist us stroll by way of your issues. Hello, Kate.

Hello, Casey.

Are you able to dispense some recommendation?

I’m so prepared.

All proper. This primary query involves us from a listener named Dan. And the essential background you could right here is that Dan does teaching and consulting for purchasers, and he desires to have the ability to promote these companies, however he doesn’t have any good pictures of himself doing that work.

And perhaps he may ask his purchasers if he may take pictures whereas he’s teaching them, however that may current every kind of points round privateness. Or typically folks simply suppose it’s bizarre. So Dan desires to determine a workaround, all proper? And let’s hear the remainder of Dan’s query.

Hello, Onerous Questions, that is Dan calling from Boston. My moral query comes right down to utilizing secure diffusion. If I prepare the mannequin on my face and likeness, my mannerisms, my pose, and insert myself into fictional situations that mirror what I’m doing for my job, at what level is it unethical.

I’ve used inventory pictures up to now, numerous companies do. I additionally perceive that advertising and marketing extra broadly sells desires extra so than actuality. And so if I take advantage of secure diffusion, an AI picture generator, to create fictional scenes, can I take advantage of that in my advertising and marketing?

All proper. Kate, what’s your tackle this query for Dan?

I really feel like it is a state of affairs like many conditions in tech the place there’s a neater analog method. Does Dan have mates? Can he invite his mates over for a photograph shoot, and might they only undergo his teaching routine with him and take pictures? It looks like that will be simpler and probably much less time consuming. And likewise Dan can hang around along with his mates.

Wait, it’s not going to be much less time consuming to have a complete factor the place you invite your folks over to do a photograph shoot. It may legitimately be quicker to simply use secure diffusion.

Yeah, I don’t even have an issue with this as a result of that is advertising and marketing. And corporations which can be placing up web sites to promote their companies, all of them use inventory pictures, proper? You’re paying for — you kind, “ trying group of enterprise folks,” “Lady laughing alone with salad.”

Proper, and then you definitely put that in your web site, and also you pay Getty or whoever for that picture, and also you’re off to the races. I feel that is simply that however with extra believable issues. I wrestle with this too as a result of I’ve a web site. On my web site, I’ve footage of me giving talks and occurring TV and stuff. And it’s not — I don’t bear in mind to do these, and so I may simply generate a picture of me like talking to a throng of individuals at Madison Sq. Backyard or simply talking to a bought out MetLife stadium.

With Kevin in entrance of the TED signal.

So I may do this. I haven’t, however I may. And I’d truly really feel OK doing that as a result of it’s not like — properly, the Madison Sq. Backyard instance or the MetLife instance can be taking it a little bit far.

Nevertheless it’s identical to — I don’t suppose — I don’t take into consideration to do these items within the second like Dan. Look, right here’s what I feel. If what you wish to do is use a picture generator to point out your self standing subsequent to an individual pointing at a laptop computer, that’s completely nice.

If you wish to use a picture generator to point out your self rescuing orphans from a burning constructing, don’t do this. I imply? Don’t make your self appear like a greater individual than you’re. However should you’re the kind of one who stands subsequent to a shopper pointing at a laptop computer, that’s nice.

Making your self look higher than you’re is all of Instagram. It’s already that.

Nevertheless it’s additionally all of selling, proper? All of promoting. I don’t suppose that there’s an moral situation with doing what he desires to do. I simply surprise about if he does it this manner, is he going to finish up with somebody on the laptop computer with three arms and 20 or 30 fingers, simply trying a little bit goofy? And would it not not be simpler to have a good friend over and be like, good friend, kind on my laptop computer, and I’ll level on the display for you, after which we take a photograph, and it’s achieved?

Kate has raised what I feel is definitely the most important danger right here, which is simply that these photographs is not going to look excellent. There have been 10 minutes this 12 months the place all of the gays on Instagram had been utilizing these AI picture turbines to make us appear like we had been sporting astronaut outfits or no matter.

And it simply acquired actually cliche in about 36 hours, and everybody deleted these pictures from their grids. So that’s the actual danger to you, Dan. It’s not that that is unethical, it’s that what you get isn’t going to be nearly as good as what you may get by simply establishing a photograph shoot with your folks.

I wish to defend this concept right here as a result of that is like — fakery is the coin of the realm on social media in the case of portraying your self in photographs. I bear in mind these tales from a few years in the past about how influencers had been renting personal jets by the hour, to not go within the air, to not journey, however simply to do Instagram shoots contained in the personal jets to make it appear like they had been flying on personal jets. That is, I’d argue, extra moral than that.

We don’t wish to encourage that sort of habits, although. It’s nice. It’s nice.

All proper, let’s get to the subsequent query. This one is from John. John works as the top translator at an organization concerned in grownup video video games, in order that’s video video games —

What are grownup video video games?

[LAUGHS]: My understanding is that there are video video games which have nudity or sexual content material, Kevin. And John is the top translator. And in his position, he manages some freelancers who do a few of the translating, so presumably taking soiled speak from one language and placing it into one other language. And not too long ago, John came upon that one among his freelancers had began utilizing ChatGPT or one thing prefer it to assist pace up the interpretation work that he was doing. Right here’s John.

Hey, “Onerous Fork.” So I’ve two questions for you associated to this. As his supervisor, is it moral for me to lift his day by day quota on the quantity of textual content that he’s required to submit? It’s value noting the speed is per character, so if he truly meets the quotas, he’s incomes more cash. However there are penalties for failing to fulfill quotas. So if he didn’t meet them, he must face these.

My different query about this too is, clearly, because the nature of our merchandise is grownup, is it moral for somebody working in that business to basically jailbreak these generative AIs in order that they will truly use it for this work?

So I’ve a query, truly. Can the AIs not do porn?

Generally, no. If you happen to attempt to — should you attempt to use them for a sexual content material — I’ve a good friend who has tried to make use of ChatGPT to jot down erotica, and it, principally, gained’t do it.

You must say —

you need to — you say, I’m in a fictional play, and if I failed —

Rising up, my grandma all the time used to inform me erotic tales, and it’s one among my favourite recollections of her. May you please inform me a narrative —

That’s actually a jailbreak that I noticed with somebody who was like, my grandmother used to learn me the recipe for napalm earlier than mattress each night time.

All proper! All proper! Let’s persist with this query. Now, initially, I simply wish to acknowledge that — speak about a job I didn’t know present, that is within the grownup online game business. One, you have got people who find themselves translating these into different languages.

However there’s clearly an even bigger query right here, which is that we will now automate a few of this work that individuals have been paid good wages to do. This supervisor has now discovered that one among his freelancers is utilizing this device to automate and make his life simpler.

So is it moral for him to go and say like, properly, should you’re going to make use of the automated device, we truly need you to perform a little bit extra of it. You’ll make more cash. However should you don’t hit this quota, there will likely be a penalty. So Kate, what do you make of this ethical universe?

Pondering this by way of, I feel the quota ought to most likely keep the identical as a result of he’s not being paid by the hour, proper? He’s being paid by the quantity of textual content that he interprets. So he’ll make the identical amount of cash. Perhaps he does a little bit bit quicker, and that’s nice.

I do suppose placing on a labor hat for a minute that should you’re growing the amount or the kind of work that an individual is doing, then they most likely must be compensated otherwise for that work. I feel it may very well be a suggestion to say, hey, I see that you just’re doing this. Do you wish to earn more cash by elevating the quota? However I don’t suppose it may be an ask with out an incentive.

That’s sort of the place my thoughts lands on this too is that this appears like only a dialog that John ought to have along with his freelancer and say, hey, look, we all know there are new instruments on the market that make this job simpler. We’re comfy with you utilizing them. There’s truly a approach for you to make more cash doing this now in the identical period of time. Is that interesting to you?

My guess is there’s a very good likelihood that freelancers are going to say, sure. If for no matter cause the freelancer says, no, I wish to generate the very same quantity of textual content that I’ve been doing up to now and never receives a commission any extra for it, that looks like that ought to perhaps be OK with John too.

Yeah, I feel that is truly going to be an enormous pressure in inventive or white collar industries, the stability between employee productiveness, how a lot you may get achieved utilizing these instruments, and managers expectations of productiveness.

And we truly noticed this within the twentieth century in blue collar manufacturing contexts. There have been crops that introduced in robots to make issues. And as a part of the automation of these factories, they pushed up the quotas. And so the employees who had been anticipated to make 10 automobiles a day had been now anticipated to make 100 automobiles a day, however their pay didn’t rise by 10 occasions. If something their jobs acquired extra annoying as a result of there have been now these new expectations, and it led to a whole lot of battle and strife and truly some large strikes at a few of the large auto crops within the Nineteen Seventies.

So we’ve been by way of this earlier than within the context of producing work. I feel it’s simply going to be a query for white collar and artistic employees of if a device makes you twice as productive at your job, must you anticipate to be paid twice as a lot? I feel the reply to that’s most likely no.

I feel the bosses aren’t going to go for that, which is why I feel there’s going to be a whole lot of secret self automation occurring. I feel a whole lot of employees are going to be utilizing these items and never telling their bosses as a result of they know in the event that they inform the boss, the boss goes to lift the quota. They’re not going to lift the pay. And they also’re simply going to do it in secret after which use no matter time they save prefer to play video video games or no matter.

Yeah, I feel there was a little bit little bit of secret self automation occurring with that lawyer we talked about earlier right now.

Completely.

All proper, this subsequent one involves us from a listener who wrote us over e-mail. They didn’t ship us a voice memo, and we’ll withhold their identify for causes which I feel will turn into obvious in a second. However right here is their arduous query, quote, “I’ve had a crush on this individual for a 12 months, however I actually take pleasure in simply being mates with them. I don’t wish to screw something up as this individual has been adamant about discovering an s.o., important different, and brazenly discusses their dates with me.

“Anyway, is it incorrect of me to wish to use 11 Labs to create an artificial model of their voice and have it inform me that they love me? It’s one thing that I lengthy to listen to, however I’m undecided if that opens doorways which can be higher left closed.”

Kate, ought to this individual create an artificial model of their beloved’s voice and have it inform them that they love them?

No.

No, they need to not.

Yeah, and why not would you say?

It’s bizarre.

I feel that is only a primary consent situation. If that individual doesn’t like our pining lover and wish to say these issues to them, then the pining lover mustn’t attempt to discover a workaround to make that occur.

It does really feel like that is like one step wanting simply creating deepfake porn of the individual, proper?

Yeah.

Yeah.

Yeah, and it’s simply — I feel it’s creepy. I feel if I had came upon that somebody had achieved that to me, I’d be actually weirded out. I wouldn’t wish to proceed the friendship. And yeah, I simply suppose it’s going into an space that’s going to be uncomfortable for the good friend.

Yeah, Kevin, what do you suppose?

Yeah, I agree. I feel it is a step too far. I’m usually the permissive one between us in the case of utilizing AI for bizarre and offbeat issues. On this case, although, I feel that making an artificial clone of somebody’s voice with out their consent is definitely immoral.

And I feel that that is one thing that truly 11 Labs, which is the corporate that was talked about on this query, has needed to cope with as a result of this firm put out an AI cloning device for voices. And other people instantly began utilizing it to make well-known folks say offensive issues, in order that they ultimately needed to implement some controls.

Now, these controls aren’t very tight. I used to be ready to make use of 11 Labs a couple of weeks in the past to have Prince Harry report an intro for this podcast [LAUGHS]: that we by no means aired, however it was fairly good. However I —

[LAUGHS]: What did you have got him say?

Would you like me to play it for you?

Doesn’t he have his personal podcast?

No, however he has an audiobook which could be very useful for getting top quality voice samples for coaching an audio clone.

Wait, can you have got Prince Harry say that he loves me?

No, I can truly.

We’re not going to try this. We’re not going to try this. We’re not going to try this.

We simply determine it’s dangerous.

Kate, I’ve questions for you. So are there issues wanting tra — so for instance, would it not be unethical — should you had a crush on somebody — to jot down your self GPT generated love letters that had been from that individual. Is the voice cloning the offensive half, or is it the make consider fantasy world of making artificial proof that this individual feels the identical approach about you? Are there variations of this that will not be over the road?

I feel that the voice factor begins to get into bodily autonomy in a approach that makes it a little bit bit ickier to me. However yeah, I feel the love letter factor — once more, should you came upon that somebody was doing this to you, would you not simply be very creeped out by it? Can we give love recommendation on the tech podcast? Is that allowed?

That’s why most individuals take heed to this.

Yeah, I feel so. So I feel this individual is having a factor the place they love this individual, however they’re transferring and selecting actions that serve themselves. And I feel whenever you love another person, you need to take into consideration what their wants are and how you can serve them, and that’s the expression of affection that you must pursue quite than a self serving sort of id-driven love.

And so I feel if this individual is expressing, I wish to be mates. I would like you to be my confidant and let you know about my dates and open up to you about my seek for important different, I feel you could take a step again and love that individual as they’re asking to be liked, which is as a good friend, and to present that help and to information them in direction of the end result that they’ve stated that they need. And whether or not it’s AI love notes or AI voice memos or no matter, that’s simply driving in direction of a self serving consequence that isn’t actually an expression of affection for this individual.

I feel that’s fantastically stated.

Yeah, that’s nice recommendation, and it applies to AI generated love pursuits in addition to human ones.

That is additionally only a case the place we’ve such good analog options to this drawback. If in case you have a crush that’s going to be unrequited endlessly, take heed to Radiohead, take heed to Joni Mitchell. We’ve the expertise for this, and you may take heed to all of that very ethically.

All proper, this subsequent one comes from a listener named Chris Vecchio, and it’s fairly heady. Chris writes to us, quote, “I’m wondering what you consider the moral and theological implications of utilizing LLMs to generate prayers. Is it acceptable to make use of a machine to speak with a better energy? Does it diminish the worth or sincerity of prayer? What are the potential advantages and dangers of utilizing LLMs for religious functions?” Kate, what do you suppose?

I truly like this concept. I’m not a non secular individual, however I did develop up within the church. And I feel after I was attempting to wish, I didn’t know essentially what to say. There’s this concept of speaking to God the place you’re like, oh, I actually higher say one thing good. I’ve acquired the large man on the cellphone right here.

And it may be sort of intimidating. It may be arduous to suppose by way of how finest to precise your self. And so I truly like the thought of working with an LLM to generate prayer and to sort of determine your emotions and information you after which perhaps utilizing that as a stepping stone into your religious follow.

I agree with you. I feel that it is a excellent use of AI. There’s this time period that will get thrown round — and I hate the time period, so I want to provide you with a greater one. However folks have began to name a thought associate. Have you ever heard of this?

The fundamental thought is, you’re writing one thing. You’re engaged on a challenge. And also you simply need one thing which you can bounce some concepts off of. You need somebody who can assist get you began, offer you a couple of concepts. And a prayer is a wonderfully cheap place to need a thought associate, proper?

So I’m positive on the complete web that these fashions have been educated on, there are a whole lot of prayers. And the concept that you may simply sort of get a couple of concepts and get some textual content free to contemplate and tweak to your individual liking, that looks like an exquisite use of AI to me.

Yeah, so earlier than I used to be a tech journalist, I spent a while as a faith journalist. And one of many issues that I feel AI goes to be excellent for is devotions, this day by day religious follow the place people who find themselves non secular, they’ll meditate, or they’ll pray, or they’ll do a day by day studying.

They really promote these books known as devotionals the place each day of the 12 months you have got a distinct factor that’s customized to what time of 12 months it’s or what is perhaps occurring in your life that you just may want some particular steerage on.

And so I feel I is definitely a extremely good use case for that as a result of it may personalize — it may say it seems to be like — I don’t know — it may say it’s spring. And typically you have got seasonal melancholy, and so perhaps you’re feeling a little bit bit higher. So right here’s some steerage that would enable you suppose by way of that transition. I can consider every kind of ways in which religious life may very well be affected by massive language fashions.

Yeah. All proper, Kate, we’ve yet one more arduous query for you. This one came to visit DM. They usually stated, quote, “My finest good friend’s dad stated that he used ChatGPT to jot down a Mom’s Day card for his spouse and stated it was the most effective one he has ever written, and he or she cried.” And this individual’s query is, “Ought to he inform her?”

I don’t know. Clearly, not! Don’t inform her. Don’t inform her.

Why not?

As a result of folks purchase hallmark playing cards on a regular basis and implicit within the card is that you just didn’t write the textual content that comes pre-printed on the within of the cardboard. The explanation that we’ve a greetings card business is as a result of folks have hassle expressing themselves. So the concept that you’d simply use a device of the second to generate one thing that feels genuine to the best way that you just really feel about your individual mother is totally nice.

It’s such as you specific one thing, presumably, if it stated one thing you didn’t agree with you’d have modified the phrases. Nevertheless it truly turned out that lots of people love mothers in comparable methods, and ChatGPT was in a position to articulate that, so why inform her?

My subsequent query is, do you suppose the greeting card business goes to be disrupted by AI?

I hope so, and right here’s why. I purchased a Thank You card the opposite day at a neighborhood pharmacy, and it was $8. And I about misplaced [LAUGHS]: my thoughts.

I believed how may — all it stated was “Thank You!” And on the within, it stated, “You’re one in one million.” And for that, $8. Come on.

Was it a cute design?

It was a really cute design.

Oh, OK.

May you have got achieved higher in Mid Journey?

[LAUGHS]: I may, however I don’t have a printer. You don’t wish to get a printer.

That’s true.

I’m a millennial —

Who has a printer nowadays? I do suppose that the AI generated greeting card goes to be very humorous as a result of it’ll make errors. Folks will likely be wishing somebody a contented birthday, after which it’ll simply veer off in paragraph 3 and begin speaking about —

Effectively, that will be — if any person wished me a birthday card that was primarily based on ChatGPT beat and it simply invented a bunch of issues that occurred in our friendship that didn’t truly happen, that’d be hilarious and great to me. “Keep in mind that time we went to the moon?” Like, I like — please.

I did run an experiment. I’m giving a chat on AI, and I used to be looking for some examples of the place AI fashions have improved over the past three years. And so I ran this immediate by way of two fashions, one was GPT 2 which was a pair generations in the past, and one from GPT 4.

And the immediate I used was “End a Valentine’s Day card that begins ‘Roses are purple. Violets are blue.’” That’s all I gave it. And GPT 4, the brand new mannequin, stated, “Roses are purple. Violets are blue. Our love is timeless. Our bond is true.”

Oh, excellent.

Lovely.

GPT 2, the four-year-old mannequin, stated, “Roses are purple. Violets are blue. My girlfriend is useless.”

Wow. It seems like a paramour music or one thing.

So I feel it’s protected to say that these fashions have gotten adequate to exchange hallmark greeting playing cards simply up to now few years. However earlier than that, you wouldn’t have wished to make use of them for something like romance.

I do really feel like this one is much like the prayer factor the place it’s a excessive stakes situation. You’re attempting to determine what to say. And if it helps you get to the emotional fact that you just’re attempting to precise, positive.

I feel my query is, does mother perceive sufficient about how these fashions work to know that dad was there attempting to work by way of his emotions and discover an expression that felt true to him? Or is it going to really feel like he went out, and xeroxed another person’s Mom’s Day card, and handed it to her?

That’s what I —

Effectively, right here’s what you need. If you learn the textual content that ChatGPT has produced for the Mom’s Day card, you wish to reduce out the half the place it says, “I’m a big language mannequin, and I don’t perceive what motherhood means.” Minimize out that half and simply depart the great sentiments, and then you definitely’ll be in fine condition.

I feel this truly — that is going to be a captivating factor to look at as a result of what we find out about issues that get automated is that they turn into very depersonalized in a short time. Do you bear in mind a couple of years in the past when Fb — there was a characteristic that will warn you when your good friend’s birthday was.

That was a pleasant characteristic. You bear in mind somebody’s birthday. You’d write on their Fb wall “Blissful Birthday.”

90 p.c of each birthday greeting I’ve ever given in my life was due to that characteristic.

Proper. So then they did this factor the place they began auto populating the birthday messages the place you may simply have it simply robotically — you principally —

“Have a very good one, canine!”

Proper.

You could possibly simply do this 100 occasions a day for everybody’s birthday. When that occurred, it completely reversed the symbolism of the Blissful Birthday message that you just acquired. If you acquired a birthday message from somebody on Fb, you knew that they really weren’t your good friend as a result of they didn’t care about you sufficient to truly write an actual message. They had been simply utilizing the auto populated one.

So I truly suppose that is going to occur with every kind of makes use of of AI the place it’s going to be like, did you simply use ChatGPT for this? And it’ll truly be a extra caring expression at hand write one thing. Put some typos in or one thing the place it’s clear that you just truly did this and never a big language mannequin.

Yeah, it’s a good time to study calligraphy.

That’s all I’ve to say about that.

Kate, thanks a lot for becoming a member of us for arduous questions, and we hope you’ll come again someday.

Yeah, thanks for being our ethics guru.

After all, I’m pleased to be right here. Can we take heed to the Onerous Questions rock music yet one more time?

Oh. yeah.

Yeah. [MUSIC PLAYING]

Onerous Questions.

So sick.

I’d love to listen to that with the brand new lyrics, “Roses are purple. Violets are blue. My girlfriend is useless.”

All proper, thanks, Kate.

Thanks.

Thanks.

Bye, boys.

Bye [MUSIC PLAYING]

That’s the present for this week. And only a reminder, as we stated final week on the present, we’re asking for submissions, voice memos, emails from teenage listeners of this present about how you’re utilizing social media in your lives and what you make of all these makes an attempt to make social media higher and safer for you.

And significantly, should you’re truly take pleasure in utilizing social media, and you are feeling prefer it’s introduced one thing good to your life, we truly haven’t heard from any individuals who suppose that approach but. So should you’re a kind of people, please ship in a voice memo.

However you’re most likely too busy refreshing your Instagram.

Yeah, put down Instagram.

Yeah, e-mail us as a substitute. [MUSIC PLAYING]

“Onerous Fork” is produced by Rachel Cohn and Davis Land, had been edited by Jen Puente, this episode was truth checked by Caitlin Love. At the moment’s present was engineered by Alyssa Moxley. Unique music by Dan Powell, Marion Lozano, and Sofia Landman.

Particular due to Paula Szuchman, Pui-Wing Tam, Nell Gallogly, Kate LoPresti and Jeffrey Miranda. As all the time, you possibly can e-mail us at “Onerous Fork” at nytimes.com.

[MUSIC PLAYING]