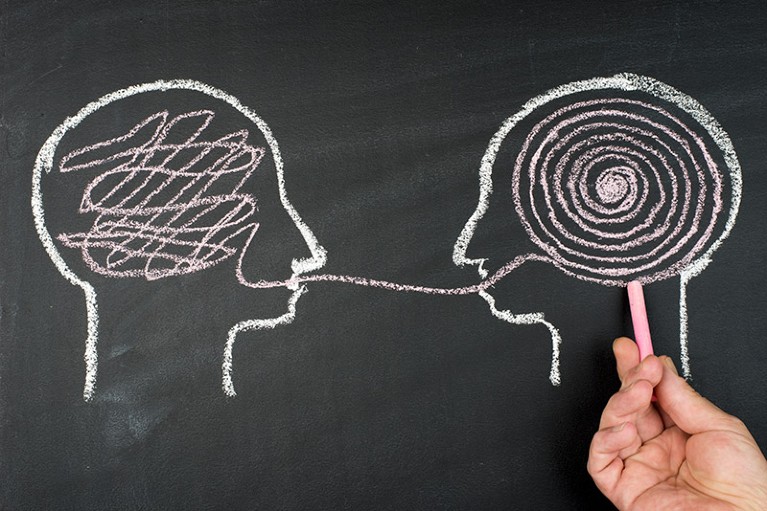

The human skill to make use of new phrases in versatile tactics has been made imaginable through neural networks. Credit score: marrio31/Getty Scientists have evolved a neural community with a human-like skill to make language generalizations1. Synthetic Intelligence (AI) programs paintings in addition to people through folding newly discovered phrases into current phrases and making use of them to new contexts, which is crucial a part of human cognition referred to as systematic generalization. The kind of AI that underlies the chatbot ChatGPT, and was once discovered to accomplish worse on such assessments than neural networks or people, despite the fact that chatbots can talk like a human. Nature, on the other hand, may just create machines that engage with people extra naturally than the most efficient AI programs do nowadays. Whilst language-based programs, comparable to ChatGPT, are conversational in lots of spaces, they provide evident gaps and inconsistencies in others. be arranged”, says Paul Smolensky, a cognitive language scientist at Johns Hopkins College in Baltimore, Maryland. Language finding out Arranged conversation is characterised through other folks’s skill to make use of newly got phrases without difficulty in new environments. As an example, as soon as any person understands the which means of the phrase ‘photobomb’, they’re going to be capable to use it in numerous contexts, comparable to ‘photobomb two times’ or ‘photobomb all over a Zoom name’. In a similar fashion, an individual who understands the sentence ‘the cat chases the canine’ additionally understands ‘the canine chases the cat’ with out a lot concept. – sensible analysis, says Brenden Lake, a pc scientist at New York College and co-author of the find out about. Not like people, neural networks have issue finding out new phrases till they’re skilled on many examples of textual content that use that phrase. Synthetic intelligence researchers have spent just about 40 years seeking to resolve whether or not neural networks generally is a legitimate type of human cognition in the event that they can not reveal this type of steadiness.

Untitled In an try to get to the bottom of this debate, the authors examined 25 other folks on how smartly they used newly discovered phrases in quite a lot of contexts. The researchers ensured that the contributors would be told the phrases first through checking out them on a faux language containing two teams of nonsense phrases. ‘Outdated phrases’ comparable to ‘dax,’ ‘wif’ and ‘lug’ constitute concrete movements comparable to ‘soar’ and ‘soar’. Different imprecise ‘serve as’ phrases comparable to ‘blicket’, ‘kiki’ and ‘fep’ are commonplace utilization regulations and mixtures of prefixes, leading to sequences comparable to ‘triple soar’ or ‘again soar’. Scholars have been taught to compare each and every previous phrase with a circle of a undeniable colour, so the crimson circle represented ‘dax’, and the blue circle represented ‘lug’. The researchers confirmed the compilers a mixture of classical and purposeful phrases and circuit patterns that may happen when the purposes have been carried out to the unique. As an example, the phrase ‘dax fep’ was once indicated through 3 crimson circles, and ‘lug fep’ through 3 blue circles, indicating that fep approach an unheard command to copy thrice. those imprecise regulations through giving them a mixture of previous issues and duties. Then that they had to make a choice the proper kind and selection of circles and position them accordingly. The logo of information As predicted, other folks did really well on this activity; they selected the proper mixture of coloured squares about 80% of the time, on reasonable. Once they made errors, the researchers noticed that this adopted a trend that displays well known human biases. Then, the researchers skilled the neural community to accomplish a job very similar to that given to the contributors, through programming it to be informed from its errors. This technique allowed the AI to be informed when it finished each and every activity as an alternative of the use of static information, which is the perfect strategy to educate neural networks. To make the neural web extra human-like, the authors skilled it to breed the mistakes they noticed in human check effects. When the neural web was once examined on new puzzles, its solutions virtually precisely matched the ones of volunteers, and once in a while even surpassed them.

Untitled In an try to get to the bottom of this debate, the authors examined 25 other folks on how smartly they used newly discovered phrases in quite a lot of contexts. The researchers ensured that the contributors would be told the phrases first through checking out them on a faux language containing two teams of nonsense phrases. ‘Outdated phrases’ comparable to ‘dax,’ ‘wif’ and ‘lug’ constitute concrete movements comparable to ‘soar’ and ‘soar’. Different imprecise ‘serve as’ phrases comparable to ‘blicket’, ‘kiki’ and ‘fep’ are commonplace utilization regulations and mixtures of prefixes, leading to sequences comparable to ‘triple soar’ or ‘again soar’. Scholars have been taught to compare each and every previous phrase with a circle of a undeniable colour, so the crimson circle represented ‘dax’, and the blue circle represented ‘lug’. The researchers confirmed the compilers a mixture of classical and purposeful phrases and circuit patterns that may happen when the purposes have been carried out to the unique. As an example, the phrase ‘dax fep’ was once indicated through 3 crimson circles, and ‘lug fep’ through 3 blue circles, indicating that fep approach an unheard command to copy thrice. those imprecise regulations through giving them a mixture of previous issues and duties. Then that they had to make a choice the proper kind and selection of circles and position them accordingly. The logo of information As predicted, other folks did really well on this activity; they selected the proper mixture of coloured squares about 80% of the time, on reasonable. Once they made errors, the researchers noticed that this adopted a trend that displays well known human biases. Then, the researchers skilled the neural community to accomplish a job very similar to that given to the contributors, through programming it to be informed from its errors. This technique allowed the AI to be informed when it finished each and every activity as an alternative of the use of static information, which is the perfect strategy to educate neural networks. To make the neural web extra human-like, the authors skilled it to breed the mistakes they noticed in human check effects. When the neural web was once examined on new puzzles, its solutions virtually precisely matched the ones of volunteers, and once in a while even surpassed them.

The check of man-made intelligence By contrast, GPT-4 struggled with the similar activity, failing, on reasonable, between 42 and 86% of the time, relying on how the researchers introduced the duty. “It isn’t magic, it is apply,” says Nyanja. “Very similar to the best way a kid learns their language, the youngsters give a boost to their writing talents through the use of other actions to be informed song.” Melanie Mitchell, a pc scientist and highbrow on the Santa Fe Institute in New Mexico, mentioned that the analysis is a fascinating evidence of concept, nevertheless it continues to be observed whether or not this coaching way may also be prolonged to be simpler on massive units of information or pictures. Nyanja hopes to unravel this drawback through learning how other folks increase systematic making plans talents from an early age, and mix their findings to create a extra robust neural community. to be smartly skilled. It will cut back the quantity of information had to educate programs like ChatGPT and cut back ‘prediction’, which happens when AI detects one thing is lacking and produces improper output. “Getting a gadget right into a neural community is a huge deal,” says Bruni. “It might probably resolve a lot of these issues on the similar time.”

The check of man-made intelligence By contrast, GPT-4 struggled with the similar activity, failing, on reasonable, between 42 and 86% of the time, relying on how the researchers introduced the duty. “It isn’t magic, it is apply,” says Nyanja. “Very similar to the best way a kid learns their language, the youngsters give a boost to their writing talents through the use of other actions to be informed song.” Melanie Mitchell, a pc scientist and highbrow on the Santa Fe Institute in New Mexico, mentioned that the analysis is a fascinating evidence of concept, nevertheless it continues to be observed whether or not this coaching way may also be prolonged to be simpler on massive units of information or pictures. Nyanja hopes to unravel this drawback through learning how other folks increase systematic making plans talents from an early age, and mix their findings to create a extra robust neural community. to be smartly skilled. It will cut back the quantity of information had to educate programs like ChatGPT and cut back ‘prediction’, which happens when AI detects one thing is lacking and produces improper output. “Getting a gadget right into a neural community is a huge deal,” says Bruni. “It might probably resolve a lot of these issues on the similar time.”