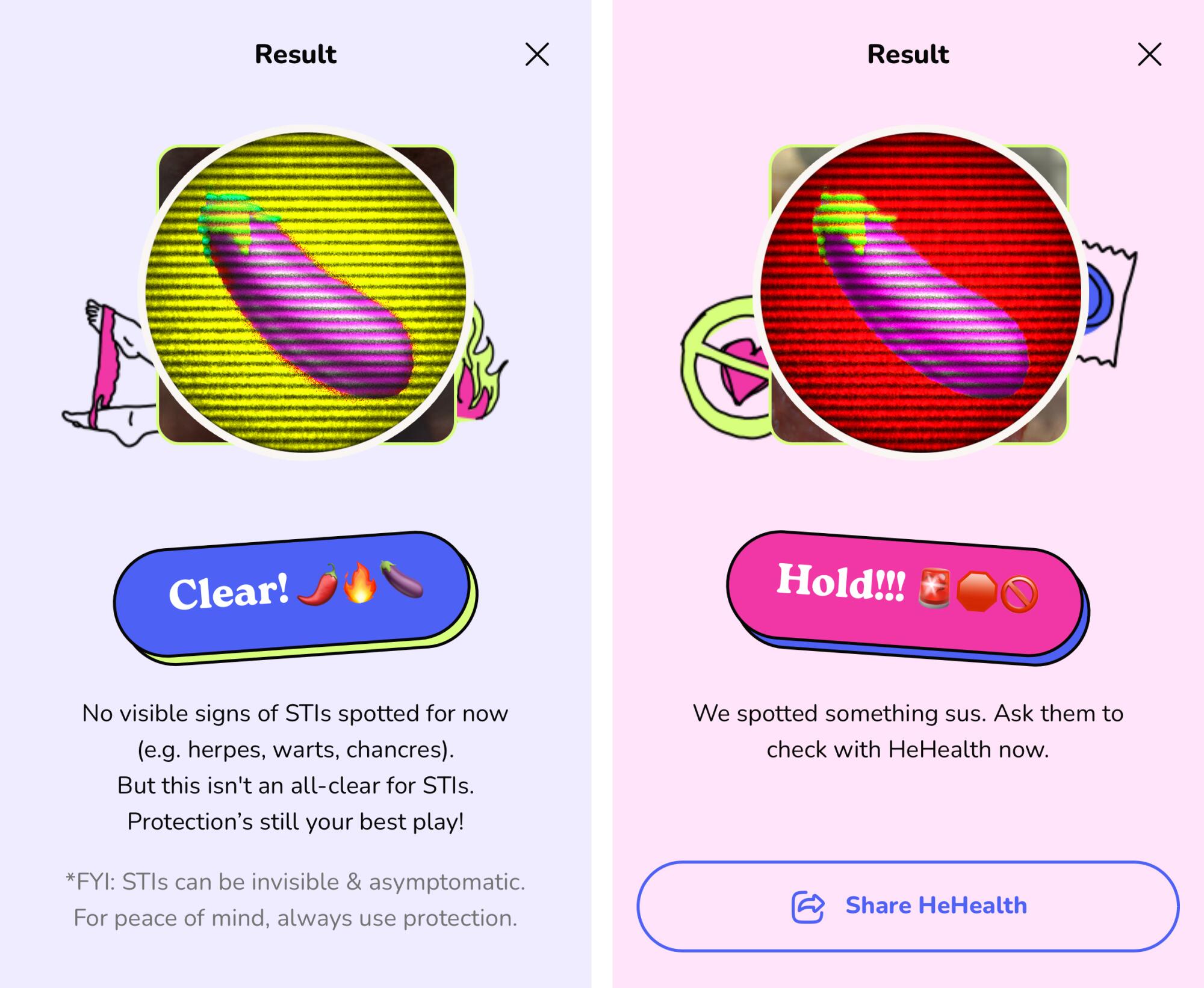

Past due closing month, the San Francisco-based startup HeHealth introduced the release of Calmara.ai, a happy, emoji-laden web site the corporate describes as “your tech savvy BFF for STI exams.”The idea that is unassuming. A person interested in their spouse’s sexual fitness standing simply snaps a photograph (with consent, the provider notes) of the spouse’s penis (the one a part of the human frame the device is educated to acknowledge) and uploads it to Calmara.In seconds, the website scans the picture and returns considered one of two messages: “Transparent! No visual indicators of STIs noticed for now” or “Grasp!!! We noticed one thing sus.”Calmara describes the loose provider as “the following absolute best factor to a lab take a look at for a fast take a look at,” powered by way of synthetic intelligence with “as much as 94.4% accuracy charge” (even though finer print at the website clarifies its exact efficiency is “65% to 96% throughout quite a lot of stipulations.”)Since its debut, privateness and public fitness mavens have pointed with alarm to plenty of important oversights in Calmara’s design, equivalent to its flimsy consent verification, its possible to obtain kid pornography and an over-reliance on photographs to display for stipulations which might be ceaselessly invisible.However whilst a rudimentary screening software for visible indicators of sexually transmitted infections in a single particular human organ, assessments of Calmara confirmed the provider to be faulty, unreliable and liable to the similar more or less stigmatizing knowledge its dad or mum corporate says it needs to struggle.A Los Angeles Instances reporter uploaded to Calmara a vast vary of penis photographs taken from the Facilities for Illness Keep an eye on and Prevention’s Public Well being Symbol Library, the STD Heart NY and the Royal Australian School of Normal Practitioners.Calmara issued a “Grasp!!!” to a couple of photographs of penile lesions and bumps led to by way of sexually transmitted stipulations, together with syphilis, chlamydia, herpes and human papillomavirus, the virus that reasons genital warts.  Screenshots, with genitals obscured by way of illustrations, display that Calmara gave a “Transparent!” to a photograph from the CDC of a critical case of syphilis, left, uploaded by way of The Instances; the app stated “Grasp!!!” on a photograph, from the Royal Australian School of Normal Practitioners, of a penis without a STIs. (Screenshots by way of Calmara.ai; picture representation by way of Los Angeles Instances) However the website failed to acknowledge some textbook photographs of sexually transmitted infections, together with a chancroid ulcer and a case of syphilis so pronounced the foreskin was once now not in a position to retract.Calmara’s AI incessantly inaccurately known naturally going on, non-pathological penile bumps as indicators of an infection, flagging a couple of photographs of disease-free organs as “one thing sus.”It additionally struggled to tell apart between inanimate items and human genitals, issuing a cheery “Transparent!” to pictures of each a novelty penis-shaped vase and a penis-shaped cake.“There are such a large amount of issues unsuitable with this app that I don’t even know the place to start out,” stated Dr. Ina Park, a UC San Francisco professor who serves as a clinical advisor for the CDC’s Department of STD Prevention. “With any assessments you’re doing for STIs, there’s at all times the potential of false negatives and false positives. The problem with this app is that it sounds as if to be rife with each.”Dr. Jeffrey Klausner, an infectious-disease specialist at USC’s Keck College of Drugs and a systematic adviser to HeHealth, said that Calmara “can’t be promoted as a screening take a look at.” “To get screened for STIs, you’ve were given to get a blood take a look at. It’s a must to get a urine take a look at,” he stated. “Having anyone take a look at a penis, or having a virtual assistant take a look at a penis, isn’t going so that you can stumble on HIV, syphilis, chlamydia, gonorrhea. Even maximum instances of herpes are asymptomatic.”Calmara, he stated, is “an excessively other factor” from HeHealth’s signature product, a paid provider that scans photographs a person submits of his personal penis and flags the rest that deserves follow-up with a healthcare supplier. Klausner didn’t reply to requests for extra remark concerning the app’s accuracy.Each HeHealth and Calmara use the similar underlying AI, even though the 2 websites “could have variations at figuring out problems of shock,” co-founder and CEO Dr. Yudara Kularathne stated. “Powered by way of patented HeHealth wizardry (assume an AI so sharp you’d assume it aced its SATs), our AI’s been battle-tested by way of over 40,000 customers,” Calmara’s web site reads, earlier than noting that its accuracy levels from 65% to 96%. “It’s nice that they divulge that, however 65% is horrible,” stated Dr. Sean Younger, a UCI professor of emergency drugs and government director of the College of California Institute for Prediction Generation. “From a public fitness viewpoint, if you happen to’re giving other folks 65% accuracy, why even inform any individual the rest? That’s doubtlessly extra damaging than recommended.”Kularathne stated the accuracy vary “highlights the complexity of detecting STIs and different visual stipulations at the penis, each and every with its distinctive traits and demanding situations.” He added: “It’s essential to remember the fact that that is simply the place to begin for Calmara. As we refine our AI with extra insights, we think those figures to give a boost to.”On HeHealth’s web site, Kularathne says he was once impressed to begin the corporate after a pal changed into suicidal after “an STI scare magnified by way of on-line incorrect information.”“A lot of physiological stipulations are ceaselessly flawed for STIs, and our era can give peace of thoughts in those eventualities,” Kularathne posted Tuesday on LinkedIn. “Our era goals to deliver readability to younger other folks, particularly Gen Z.”Calmara’s AI additionally mistook some physiological stipulations for STIs.The Instances uploaded plenty of photographs onto the website that had been posted on a clinical web site as examples of non-communicable, non-pathological anatomical permutations within the human penis which might be now and again at a loss for words with STIs, together with pores and skin tags, visual sebaceous glands and enlarged capillaries.Calmara known each and every one as “one thing sus.”Such faulty knowledge will have precisely the other impact on younger customers than the “readability” its founders intend, stated Dr. Joni Roberts, an assistant professor at Cal Poly San Luis Obispo who runs the campus’s Sexual and Reproductive Well being Lab.“If I’m 18 years previous, I take an image of one thing that may be a customary prevalence as a part of the human frame, [and] I am getting this that claims that it’s ‘sus’? Now I’m stressing out,” Roberts stated.“We already know that psychological fitness [issues are] extraordinarily top on this inhabitants. Social media has run havoc on other folks’s self symbol, value, melancholy, et cetera,” she stated. “Announcing one thing is ‘sus’ with out offering any knowledge is problematic.”Kularathne defended the website’s number of language. “The word ‘one thing sus’ is intentionally selected to signify ambiguity and recommend the will for additional investigation,” he wrote in an electronic mail. “It’s a instructed for customers to hunt skilled recommendation, fostering a tradition of warning and accountability.”Nonetheless, “the misidentification of wholesome anatomy as ‘one thing sus’ if that occurs, is certainly now not the end result we goal for,” he wrote.Customers whose pictures are issued a “Grasp” understand are directed to HeHealth the place, for a charge, they are able to publish further pictures in their penis for additional scanning. Those that get a “Transparent” are informed “No visual indicators of STIs noticed for now . . . However this isn’t an all-clear for STIs,” noting, appropriately, that many sexually transmitted stipulations are asymptomatic and invisible. Customers who click on thru Calmara’s FAQs can even discover a disclaimer {that a} “Transparent!” notification “doesn’t imply you’ll skimp on additional exams.”Younger raised considerations that some other folks may use the app to make instant selections about their sexual fitness. “There’s extra moral duties so that you can be clear and clean about your knowledge and practices, and not to use the everyday startup approaches that numerous different firms will use in non-health areas,” he stated.In its present shape, he stated, Calmara “has the prospective to additional stigmatize now not best STIs, however to additional stigmatize virtual fitness by way of giving faulty diagnoses and having other folks make claims that each and every virtual fitness software or app is simply a large sham.”HeHealth.ai has raised about $1.1 million since its founding in 2019, co-founder Mei-Ling Lu stated. The corporate is these days in quest of any other $1.5 million from buyers, in step with PitchBook.Scientific mavens interviewed for this text stated that era can and will have to be used to cut back obstacles to sexual healthcare. Suppliers together with Deliberate Parenthood and the Mayo Health facility are the use of AI equipment to proportion vetted knowledge with their sufferers, stated Mara Decker, a UC San Francisco epidemiologist who research sexual fitness schooling and virtual era.However with regards to Calmara’s method, “I principally can see best negatives and no advantages,” Decker stated. “They may simply as simply substitute their app with an indication that claims, ‘If in case you have a rash or noticeable sore, move get examined.’”

Screenshots, with genitals obscured by way of illustrations, display that Calmara gave a “Transparent!” to a photograph from the CDC of a critical case of syphilis, left, uploaded by way of The Instances; the app stated “Grasp!!!” on a photograph, from the Royal Australian School of Normal Practitioners, of a penis without a STIs. (Screenshots by way of Calmara.ai; picture representation by way of Los Angeles Instances) However the website failed to acknowledge some textbook photographs of sexually transmitted infections, together with a chancroid ulcer and a case of syphilis so pronounced the foreskin was once now not in a position to retract.Calmara’s AI incessantly inaccurately known naturally going on, non-pathological penile bumps as indicators of an infection, flagging a couple of photographs of disease-free organs as “one thing sus.”It additionally struggled to tell apart between inanimate items and human genitals, issuing a cheery “Transparent!” to pictures of each a novelty penis-shaped vase and a penis-shaped cake.“There are such a large amount of issues unsuitable with this app that I don’t even know the place to start out,” stated Dr. Ina Park, a UC San Francisco professor who serves as a clinical advisor for the CDC’s Department of STD Prevention. “With any assessments you’re doing for STIs, there’s at all times the potential of false negatives and false positives. The problem with this app is that it sounds as if to be rife with each.”Dr. Jeffrey Klausner, an infectious-disease specialist at USC’s Keck College of Drugs and a systematic adviser to HeHealth, said that Calmara “can’t be promoted as a screening take a look at.” “To get screened for STIs, you’ve were given to get a blood take a look at. It’s a must to get a urine take a look at,” he stated. “Having anyone take a look at a penis, or having a virtual assistant take a look at a penis, isn’t going so that you can stumble on HIV, syphilis, chlamydia, gonorrhea. Even maximum instances of herpes are asymptomatic.”Calmara, he stated, is “an excessively other factor” from HeHealth’s signature product, a paid provider that scans photographs a person submits of his personal penis and flags the rest that deserves follow-up with a healthcare supplier. Klausner didn’t reply to requests for extra remark concerning the app’s accuracy.Each HeHealth and Calmara use the similar underlying AI, even though the 2 websites “could have variations at figuring out problems of shock,” co-founder and CEO Dr. Yudara Kularathne stated. “Powered by way of patented HeHealth wizardry (assume an AI so sharp you’d assume it aced its SATs), our AI’s been battle-tested by way of over 40,000 customers,” Calmara’s web site reads, earlier than noting that its accuracy levels from 65% to 96%. “It’s nice that they divulge that, however 65% is horrible,” stated Dr. Sean Younger, a UCI professor of emergency drugs and government director of the College of California Institute for Prediction Generation. “From a public fitness viewpoint, if you happen to’re giving other folks 65% accuracy, why even inform any individual the rest? That’s doubtlessly extra damaging than recommended.”Kularathne stated the accuracy vary “highlights the complexity of detecting STIs and different visual stipulations at the penis, each and every with its distinctive traits and demanding situations.” He added: “It’s essential to remember the fact that that is simply the place to begin for Calmara. As we refine our AI with extra insights, we think those figures to give a boost to.”On HeHealth’s web site, Kularathne says he was once impressed to begin the corporate after a pal changed into suicidal after “an STI scare magnified by way of on-line incorrect information.”“A lot of physiological stipulations are ceaselessly flawed for STIs, and our era can give peace of thoughts in those eventualities,” Kularathne posted Tuesday on LinkedIn. “Our era goals to deliver readability to younger other folks, particularly Gen Z.”Calmara’s AI additionally mistook some physiological stipulations for STIs.The Instances uploaded plenty of photographs onto the website that had been posted on a clinical web site as examples of non-communicable, non-pathological anatomical permutations within the human penis which might be now and again at a loss for words with STIs, together with pores and skin tags, visual sebaceous glands and enlarged capillaries.Calmara known each and every one as “one thing sus.”Such faulty knowledge will have precisely the other impact on younger customers than the “readability” its founders intend, stated Dr. Joni Roberts, an assistant professor at Cal Poly San Luis Obispo who runs the campus’s Sexual and Reproductive Well being Lab.“If I’m 18 years previous, I take an image of one thing that may be a customary prevalence as a part of the human frame, [and] I am getting this that claims that it’s ‘sus’? Now I’m stressing out,” Roberts stated.“We already know that psychological fitness [issues are] extraordinarily top on this inhabitants. Social media has run havoc on other folks’s self symbol, value, melancholy, et cetera,” she stated. “Announcing one thing is ‘sus’ with out offering any knowledge is problematic.”Kularathne defended the website’s number of language. “The word ‘one thing sus’ is intentionally selected to signify ambiguity and recommend the will for additional investigation,” he wrote in an electronic mail. “It’s a instructed for customers to hunt skilled recommendation, fostering a tradition of warning and accountability.”Nonetheless, “the misidentification of wholesome anatomy as ‘one thing sus’ if that occurs, is certainly now not the end result we goal for,” he wrote.Customers whose pictures are issued a “Grasp” understand are directed to HeHealth the place, for a charge, they are able to publish further pictures in their penis for additional scanning. Those that get a “Transparent” are informed “No visual indicators of STIs noticed for now . . . However this isn’t an all-clear for STIs,” noting, appropriately, that many sexually transmitted stipulations are asymptomatic and invisible. Customers who click on thru Calmara’s FAQs can even discover a disclaimer {that a} “Transparent!” notification “doesn’t imply you’ll skimp on additional exams.”Younger raised considerations that some other folks may use the app to make instant selections about their sexual fitness. “There’s extra moral duties so that you can be clear and clean about your knowledge and practices, and not to use the everyday startup approaches that numerous different firms will use in non-health areas,” he stated.In its present shape, he stated, Calmara “has the prospective to additional stigmatize now not best STIs, however to additional stigmatize virtual fitness by way of giving faulty diagnoses and having other folks make claims that each and every virtual fitness software or app is simply a large sham.”HeHealth.ai has raised about $1.1 million since its founding in 2019, co-founder Mei-Ling Lu stated. The corporate is these days in quest of any other $1.5 million from buyers, in step with PitchBook.Scientific mavens interviewed for this text stated that era can and will have to be used to cut back obstacles to sexual healthcare. Suppliers together with Deliberate Parenthood and the Mayo Health facility are the use of AI equipment to proportion vetted knowledge with their sufferers, stated Mara Decker, a UC San Francisco epidemiologist who research sexual fitness schooling and virtual era.However with regards to Calmara’s method, “I principally can see best negatives and no advantages,” Decker stated. “They may simply as simply substitute their app with an indication that claims, ‘If in case you have a rash or noticeable sore, move get examined.’”

An AI app claims it could stumble on sexually transmitted infections. Docs say it's a crisis

![Right here’s the whole lot new in Android 15 QPR2 Beta 2 [Gallery] Right here’s the whole lot new in Android 15 QPR2 Beta 2 [Gallery]](https://9to5google.com/wp-content/uploads/sites/4/2024/11/Android-15-on-Pixel-9-v1.jpg?quality=82&strip=all&w=1600)