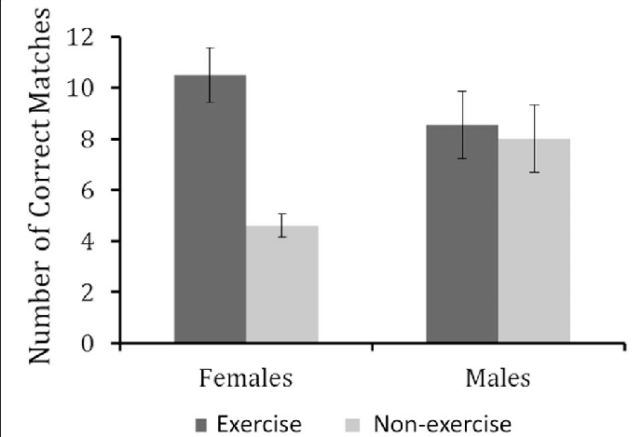

Efficiency of decided on forms of GPT and LLaMA in issue. Credit: Advent (2024). DOI: 10.1038 / s41586-024-07930-y A gaggle of AI researchers on the Universitat Politècnica de València, in Spain, have discovered that as widespread LLMs (Huge Language Fashions) develop and turn out to be extra complicated, they’re much less prone to settle for. the person does now not know the solution. Of their analysis revealed within the magazine Nature, the group examined the newest model of 3 widespread AI chatbots relating to their responses, accuracy, and the way just right customers understand fallacious responses. As LLMs have turn out to be extra commonplace, customers have turn out to be acquainted with the usage of them to put in writing papers, poems or songs and remedy math issues and different duties, and the correctness of the problem has turn out to be a significant factor. On this new learn about, the researchers puzzled whether or not the preferred LLMs are getting it proper with every new replace and what they do once they get it fallacious. With the intention to check the accuracy of the 3 hottest LLMs, BLOOM, LLaMA and GPT, the group subjected them to 1000’s of questions and when compared the solutions they gained with the solutions to the unique variations of the similar questions. Additionally they modified subjects, together with math, science, anagrams and geography, in addition to the facility for LLMs to make notes or do such things as order an inventory. For all questions, they have been first assigned an issue stage. They discovered that each and every time the chatbot was once up to date, the accuracy larger considerably. Additionally they discovered that the tougher the questions have been, the decrease the accuracy, as anticipated. However additionally they discovered that as LLMs grow older and extra complicated, they turn out to be much less pleased with their talent to reply to a query effectively. In earlier variations, maximum LLMs replied through telling customers that they might now not find the solution or that they wanted additional information. Within the new variations, LLMs have been speculative, resulting in many solutions, each right kind and flawed. Additionally they discovered that each one LLMs every now and then gave fallacious solutions even to easy questions, that means they weren’t dependable. The analysis group requested volunteers to fee the solutions from the primary a part of the survey as proper or fallacious and located that almost all had bother getting the solutions fallacious. Extra: Lexin Zhou et al, Huge and well-trained languages don’t seem to be dependable, Nature (2024). DOI: 10.1038/s41586-024-07930-y © 2024 Science X Community Quotation: As LLMs mature, they’re much more likely to present fallacious solutions than admit lack of understanding (2024, September 27) Retrieved September 28, 2024 from this web page. to copyright. Apart from for honest dealing for the aim of private analysis or investigation, no phase could also be supplied with out written permission. The ideas beneath is equipped on your knowledge.