Cambridge researchers warn of the hazards of 'deadbots', AI that imitate lifeless other people, to advertise moral requirements and prison rules to forestall misuse and make sure respectful interactions. and speaking with misplaced family members dangers inflicting emotional hurt or even “harassing” the ones left in the back of with out the security requirements of the design. the virtual footprints they go away in the back of. Some firms are already providing those services and products, providing a brand new form of “postmortem presence.” AI mavens from Cambridge's Leverhulme Heart for the Long run of Intelligence provide 3 platform situations that may be noticed as a part of the “virtual business after existence” to turn the results of careless manufacturing within the house of AI that they name “prime possibility.” Misuse of AI Chatbots Analysis, printed within the magazine Philosophy and Generation, displays the possibility of firms to make use of deadbots to secretly market it to customers. The habits of departed family members, or depressed kids insisting that the lifeless father or mother continues to be “with you.” When a survivor indicators up for posthumous reconstruction, chatbots can be utilized through firms to unsolicited mail surviving households. buddies with notifications, reminders, and repair updates they supply – comparable to “led through the lifeless.” ” argue the researchers, but they can be powerless to prevent the AI simulation if their now-deceased liked one indicators a long-term contract with a virtual afterlife provider.

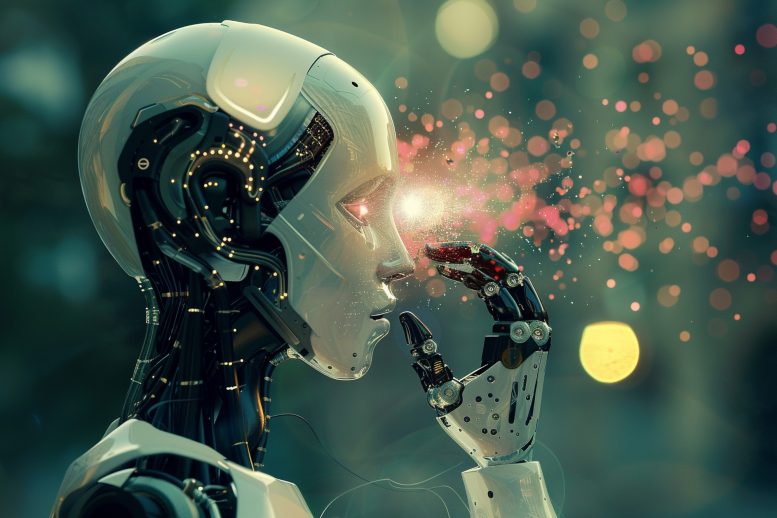

Cambridge researchers warn of the hazards of 'deadbots', AI that imitate lifeless other people, to advertise moral requirements and prison rules to forestall misuse and make sure respectful interactions. and speaking with misplaced family members dangers inflicting emotional hurt or even “harassing” the ones left in the back of with out the security requirements of the design. the virtual footprints they go away in the back of. Some firms are already providing those services and products, providing a brand new form of “postmortem presence.” AI mavens from Cambridge's Leverhulme Heart for the Long run of Intelligence provide 3 platform situations that may be noticed as a part of the “virtual business after existence” to turn the results of careless manufacturing within the house of AI that they name “prime possibility.” Misuse of AI Chatbots Analysis, printed within the magazine Philosophy and Generation, displays the possibility of firms to make use of deadbots to secretly market it to customers. The habits of departed family members, or depressed kids insisting that the lifeless father or mother continues to be “with you.” When a survivor indicators up for posthumous reconstruction, chatbots can be utilized through firms to unsolicited mail surviving households. buddies with notifications, reminders, and repair updates they supply – comparable to “led through the lifeless.” ” argue the researchers, but they can be powerless to prevent the AI simulation if their now-deceased liked one indicators a long-term contract with a virtual afterlife provider. An indication of a fictional corporate referred to as MaNana, one of the most designs used on paper to turn what may occur to the corporate coming after the virtual age. Credit score: Dr Tomasz Hollanek “Speedy advances in reproductive AI imply that just about any individual with web get entry to and information can convey their lifeless liked one again to existence,” mentioned Dr Katarzyna Nowaczyk-Basińska, learn about co-author and researcher at Cambridge's Leverhulme Heart for the Long run of Intelligence ( LCFI). “This house of AI is a minefield. You will need to put the honour of the deceased first, and ensure that this isn’t compromised through the monetary targets of virtual services and products after loss of life, as an example. On the identical time, one can go away the simulation of AI as a farewell reward to unprepared family members The rights of each those that supply information and those that engage with AI after the loss of life should be secure similarly.” Current methods for the rehabilitation of the lifeless and AI are already restricted, comparable to 'Undertaking December'. which started to make use of. GPT sorts sooner than its implementation, and methods together with 'HereAfter'. One of the most conceivable actions on this new paper is “MaNana” which permits other people to simulate the lifeless. those that died with out the consent of the “information supplier” (deceased grandmothers). The fable scene sees the nice grandson. those that have been to begin with inspired and comforted through the era start to obtain ads as soon as the “superb trials” are over. As an example, chatbots that show orders from meals supply programs within the voice and handwriting of the deceased. The relative feels that they have got disrespected the reminiscence in their grandfather, and needs them to show off the boat, however in some way that is sensible – one thing that the provider suppliers have now not thought to be.

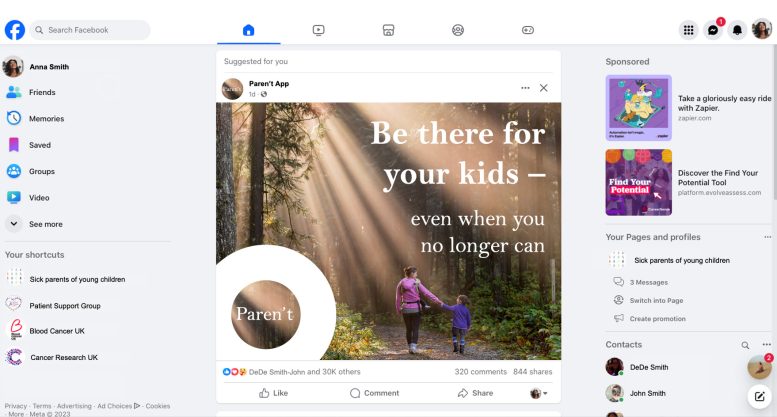

An indication of a fictional corporate referred to as MaNana, one of the most designs used on paper to turn what may occur to the corporate coming after the virtual age. Credit score: Dr Tomasz Hollanek “Speedy advances in reproductive AI imply that just about any individual with web get entry to and information can convey their lifeless liked one again to existence,” mentioned Dr Katarzyna Nowaczyk-Basińska, learn about co-author and researcher at Cambridge's Leverhulme Heart for the Long run of Intelligence ( LCFI). “This house of AI is a minefield. You will need to put the honour of the deceased first, and ensure that this isn’t compromised through the monetary targets of virtual services and products after loss of life, as an example. On the identical time, one can go away the simulation of AI as a farewell reward to unprepared family members The rights of each those that supply information and those that engage with AI after the loss of life should be secure similarly.” Current methods for the rehabilitation of the lifeless and AI are already restricted, comparable to 'Undertaking December'. which started to make use of. GPT sorts sooner than its implementation, and methods together with 'HereAfter'. One of the most conceivable actions on this new paper is “MaNana” which permits other people to simulate the lifeless. those that died with out the consent of the “information supplier” (deceased grandmothers). The fable scene sees the nice grandson. those that have been to begin with inspired and comforted through the era start to obtain ads as soon as the “superb trials” are over. As an example, chatbots that show orders from meals supply programs within the voice and handwriting of the deceased. The relative feels that they have got disrespected the reminiscence in their grandfather, and needs them to show off the boat, however in some way that is sensible – one thing that the provider suppliers have now not thought to be. Symbol of a fictional corporate referred to as Paren't. Credit score: Dr Tomasz Hollanek “Folks may also be very connected to such units, which may make them liable to being fooled,” mentioned co-author Dr Tomasz Hollanek, from LCFI in Cambridge. “Strategies or even rituals to do away with deadbots in a dignified approach will have to be thought to be. This is able to imply a virtual funeral, as an example, or different varieties of ritual relying at the cases of the folk. We advise growing protocols that save you deadbots from being utilized in a disrespectful approach, comparable to promoting or web hosting social media websites.” Even Hollanek and Nowaczyk-Basińska state that the organizers of the copy paintings should ask for the consent of the knowledge supplier sooner than giving permission. They argue that banning deadbots in accordance with unlicensed suppliers isn’t conceivable. They are saying that the design procedure will have to come with a number of directions for individuals who need to “resurrect” their family members, comparable to 'have you ever ever spoken to X. about how he wish to be remembered?', so admire for the lifeless is presented within the construction of loss of life. A mom leaves a ship to assist her eight-year-old son via grief. meet in individual.

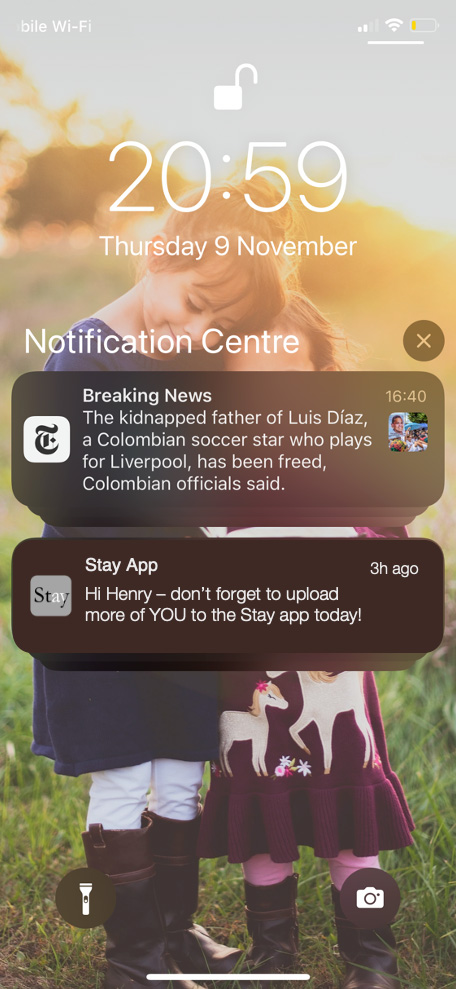

Symbol of a fictional corporate referred to as Paren't. Credit score: Dr Tomasz Hollanek “Folks may also be very connected to such units, which may make them liable to being fooled,” mentioned co-author Dr Tomasz Hollanek, from LCFI in Cambridge. “Strategies or even rituals to do away with deadbots in a dignified approach will have to be thought to be. This is able to imply a virtual funeral, as an example, or different varieties of ritual relying at the cases of the folk. We advise growing protocols that save you deadbots from being utilized in a disrespectful approach, comparable to promoting or web hosting social media websites.” Even Hollanek and Nowaczyk-Basińska state that the organizers of the copy paintings should ask for the consent of the knowledge supplier sooner than giving permission. They argue that banning deadbots in accordance with unlicensed suppliers isn’t conceivable. They are saying that the design procedure will have to come with a number of directions for individuals who need to “resurrect” their family members, comparable to 'have you ever ever spoken to X. about how he wish to be remembered?', so admire for the lifeless is presented within the construction of loss of life. A mom leaves a ship to assist her eight-year-old son via grief. meet in individual. A photograph of a fictional corporate referred to as Keep. Credit score: Dr Tomasz Hollanek The researchers suggest age restrictions for deadbots, and in addition name for “transparency” to make sure that customers are at all times mindful that they’re interacting with AI. This is able to be very similar to the present warnings on what may reason seizures, as an example. The ultimate tale investigated through this analysis – a fictional corporate referred to as “Keep” – displays an aged guy who secretly gives to kill himself and pay twenty. registration for the yr, within the hope that it’s going to convenience their older kids and make allowance their grandchildren to understand them. After the loss of life, the assembly starts. One older kid does now not take part, and receives many emails within the phrases in their lifeless father or mother. One does, however they finally end up feeling drained and responsible in regards to the destiny of the lifeless. On the other hand, preventing the bot violates the phrases of the contract that the father or mother signed with the provider corporate. “It is necessary that virtual services and products after existence imagine the rights and permissions now not best of those that reproduce them, however of those that have to engage with the simulations,” mentioned Hollanek. “Those services and products have the chance of inflicting critical issues for other people if they’re uncovered to virtual negatives from the harmful AI video games they have got misplaced. The mental results, particularly at a troublesome time already, may also be very harmful.” The researchers are asking making plans teams to begin prioritizing insurance policies that permit possible customers to finish their courting with harmful units in tactics that can shut their minds. “We want to get started pondering now about learn how to scale back the human and mental dangers related to virtual immortality, for the reason that era already exists.” Extra: “Griefbots, Deadbots, Postmortem Avatars: on Accountable Utility of Generative AI in Virtual Afterlife Trade” through Tomasz Hollanek, and Katarzyna Nowaczyk-Basińska, 9 Would possibly 2024, Philosophy & Generation.

A photograph of a fictional corporate referred to as Keep. Credit score: Dr Tomasz Hollanek The researchers suggest age restrictions for deadbots, and in addition name for “transparency” to make sure that customers are at all times mindful that they’re interacting with AI. This is able to be very similar to the present warnings on what may reason seizures, as an example. The ultimate tale investigated through this analysis – a fictional corporate referred to as “Keep” – displays an aged guy who secretly gives to kill himself and pay twenty. registration for the yr, within the hope that it’s going to convenience their older kids and make allowance their grandchildren to understand them. After the loss of life, the assembly starts. One older kid does now not take part, and receives many emails within the phrases in their lifeless father or mother. One does, however they finally end up feeling drained and responsible in regards to the destiny of the lifeless. On the other hand, preventing the bot violates the phrases of the contract that the father or mother signed with the provider corporate. “It is necessary that virtual services and products after existence imagine the rights and permissions now not best of those that reproduce them, however of those that have to engage with the simulations,” mentioned Hollanek. “Those services and products have the chance of inflicting critical issues for other people if they’re uncovered to virtual negatives from the harmful AI video games they have got misplaced. The mental results, particularly at a troublesome time already, may also be very harmful.” The researchers are asking making plans teams to begin prioritizing insurance policies that permit possible customers to finish their courting with harmful units in tactics that can shut their minds. “We want to get started pondering now about learn how to scale back the human and mental dangers related to virtual immortality, for the reason that era already exists.” Extra: “Griefbots, Deadbots, Postmortem Avatars: on Accountable Utility of Generative AI in Virtual Afterlife Trade” through Tomasz Hollanek, and Katarzyna Nowaczyk-Basińska, 9 Would possibly 2024, Philosophy & Generation.

DOI: 10.1007/s13347-024-00744-w

Cambridge Professionals Warn: AI “Deadbots” May Digitally “Hang-out” Liked Ones From Past the Grave