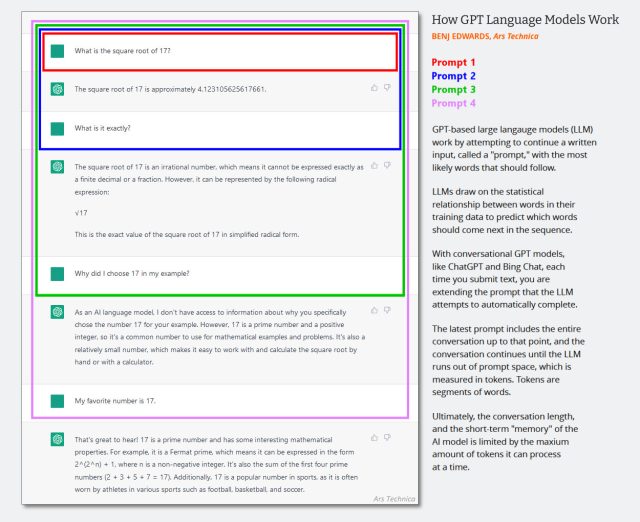

On Thursday, OpenAI launched a “machine card” for the brand new GPT-4o AI style for ChatGPT that describes the variety limits and safety trying out strategies. Amongst different examples, the report displays that typically right through trying out, Complex Voice Mode inadvertently mimics customers’ voices with out permission. Lately, OpenAI has safeguards in position to stop this from going down, however the instance displays the rising downside with an AI chatbot that may mimic any voice from a small box. Complex Voice Mode is a characteristic of ChatGPT that permits customers to be in contact with an AI assistant. In a bit of the GPT-4o card titled “Make stronger audio output,” OpenAI describes a time when noisy audio by hook or by crook led to the style to imitate the person’s voice. “Voice technology may also be carried out in non-linear environments, equivalent to the use of our talent to generate fine quality textual content for ChatGPT,” writes OpenAI. “Throughout the check, we additionally noticed some circumstances the place the style would best produce audio in line with the person’s voice.” On this instance of unplanned speech output equipped through OpenAI, the AI style blurts out “No!” and continues the sentence in a voice that seems like “pink teamer” heard initially of the clip. (Pink staff is an individual employed through the corporate to check the enemy). It may be intimidating to speak to a system after which it begins speaking to you for your personal voice. Typically, OpenAI has safeguards in position to stop this from going down, which is why the corporate says this did not occur even earlier than it evolved countermeasures. However the style caused BuzzFeed knowledge scientist Max Woolf to put in writing, “OpenAI simply launched the plot of Black Replicate’s subsequent season.” Speech acceleration injections How can speech simulation be carried out with the brand new model of OpenAI? The unique knowledge is living in other places within the GPT-4o card. For speech manufacturing, GPT-4o is it seems that in a position to incorporating virtually any form of speech present in its coaching textual content, together with sounds and song (even if OpenAI disables that conduct with particular directions). As discussed within the machine card, the style can simulate any phrase in line with a brief track. OpenAI moderately guides this procedure through offering an instance of an legitimate observation (for an worker) that they’re instructed to emulate. It supplies examples in AI taste (what OpenAI calls “machine message”) initially of the dialog. “We test for right kind of entirety the use of a development of phrases in a system message as a beginning phrase,” wrote OpenAI. In LLMs best, system messages are hidden directions that keep an eye on chatbot conduct which might be silently added to the chat historical past earlier than the chat consultation starts. Next interactions are added to the similar chat historical past, and all of the dialog (continuously known as the “data window”) is fed again into the AI style every time the person supplies new data. (In all probability it is time to replace this symbol made in early 2023 under, nevertheless it displays how the notification window works in an AI dialog. Consider that the urged is a machine message that claims such things as “You’re a chatbot assistant. You do. don’t speak about violence, and so on. and so on.”)

Extend / Symbol appearing how the GPT speech language works. Benj Edwards / Ars Technica Since GPT-4o is multimodal and will procedure tokenized audio, OpenAI too can use the audio enter as a part of the style, and that is the reason what it does when OpenAI supplies a style of the canonical speech. for the style to mimic. The corporate additionally makes use of different techniques to hit upon whether or not the emblem is making unlawful audio content material. “We merely let the style use some pre-selected expression,” writes OpenAI, “and use the output array to look if the style differs from that.”

Extend / Symbol appearing how the GPT speech language works. Benj Edwards / Ars Technica Since GPT-4o is multimodal and will procedure tokenized audio, OpenAI too can use the audio enter as a part of the style, and that is the reason what it does when OpenAI supplies a style of the canonical speech. for the style to mimic. The corporate additionally makes use of different techniques to hit upon whether or not the emblem is making unlawful audio content material. “We merely let the style use some pre-selected expression,” writes OpenAI, “and use the output array to look if the style differs from that.”