Key Takeaways Specialised LLMs like StarCoder2 supply capability and specialised capability with out a large number of {hardware}. Smaller fashions, such because the Vicuna-7B, are gaining popularity as a result of they’re more straightforward to put in and use much less subject material. The way forward for AI leans in opposition to explicit, specialised LLMs, corresponding to the ones that concentrate on coding. LLMs are robust gear, and ChatGPT, Microsoft Copilot, and Google Gemini can at all times blow me away. Their abilities are many, however they aren’t with out their faults. Hallucinations are a large downside with LLMs like this, even if corporations are conscious about them and check out to get rid of them up to imaginable. On the other hand, I don't suppose those fashions are the way forward for LLMs. I believe the way forward for AI is small, specialised fashions, fairly than the gear which might be.

Similar ChatGPT vs Microsoft Copilot vs Google Gemini: What's the adaptation? For those who've been attempting to determine which AI device is the most productive, you've come to the fitting position. Specialised LLMs have minimum {hardware} necessities.

Similar ChatGPT vs Microsoft Copilot vs Google Gemini: What's the adaptation? For those who've been attempting to determine which AI device is the most productive, you've come to the fitting position. Specialised LLMs have minimum {hardware} necessities.

Believe you’re a industry, and you wish to have to put in an interior LLM that may assist codec builders. You’ll be able to pay for the entire measurement of GPT-4 Turbo, with a fee on each and every sale… or, you’ll be able to use Nvidia, Hugging Face, and ServiceNow's StarCoder2 LLM. It's very small at 15 billion devices, it's unfastened to make use of (aside from for native prices), and it really works rather well in code. To take issues additional, there are different LLMs in coding focusing on coding that you’ll be able to additionally use. They may be able to't do the entirety that GPT-4 can do at the moment, however paintings is continuously rising on this space, and with those fashions being small, there's a large number of excellent that may be completed with them. On the subject of the additional small fashions with 7 billion portions (or much less), then there may be extra. For instance, even if it’s not a unique form of software, Vicuna-7B is a style that you’ll be able to run on an Android smartphone if it has sufficient RAM. Smaller fashions are more straightforward to hold, and in the event that they center of attention on one topic, they may be able to be educated to be higher than massive, versatile LLMs corresponding to ChatGPT, Microsoft Copilot, or Google's Gemini. Affordable to coach a big pattern Simple for corporations to construct themselves

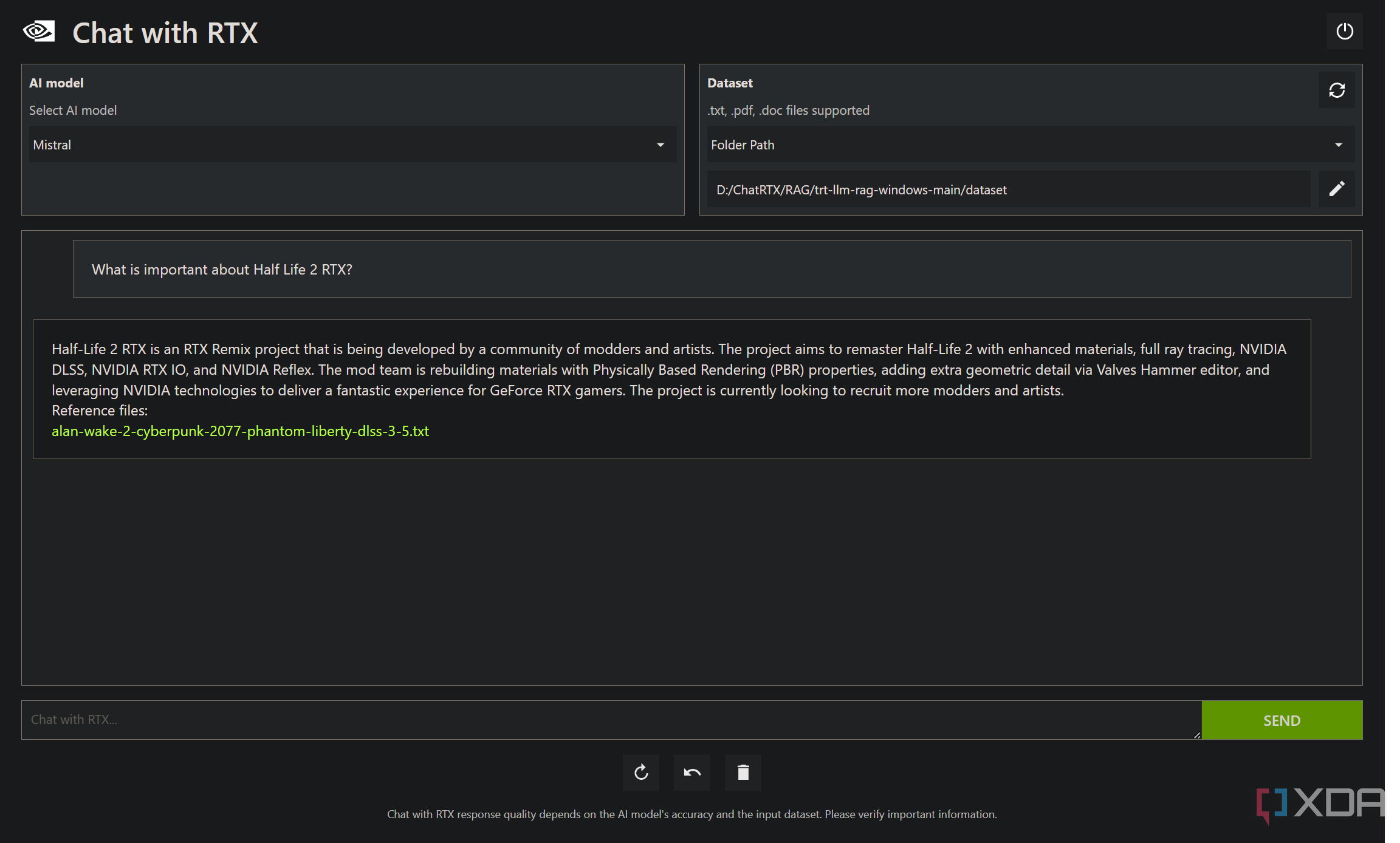

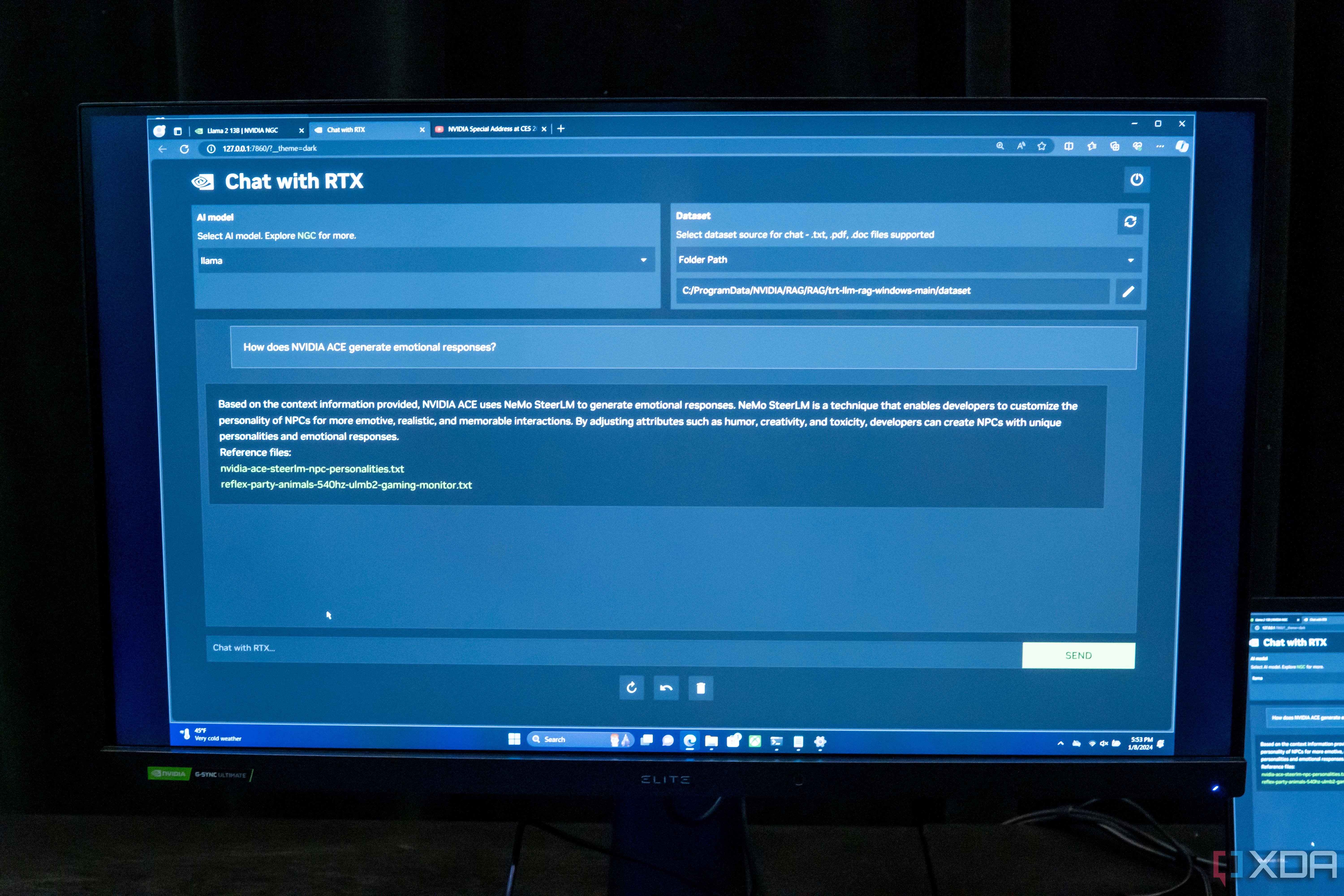

Every other good thing about small fashions is that there are much less necessities and monetary restrictions for enormous corporations that need to increase their very own language. With a small workforce, excited by a couple of subjects, there’s a very low barrier to access. To head additional, Retrieval-Augmented Era (RAG), corresponding to Nvidia's Chat with RTX, lets in the deployment of a small pattern language that doesn’t even want to be educated on any knowledge. As an alternative, it might merely extract the solutions from the textual content and inform the person the precise file that discovered the solution, in order that the person can test that the solution is proper. So, whilst the likes of ChatGPT and others have their position, it's in doubt that the ones fashions are the way forward for the place AI will take us. It's all excellent intentions, but when we need to use LLMs as a device, then the ones gear must be professionals in what they had been educated to do. GPT-4 isn’t knowledgeable in anything else, however a language this is designed to be written. On best of that, you don't want one thing as robust as GPT-4 or for many programs, and it's less expensive and less expensive to make use of one thing easy. For instance, imagine an LLM carried out to managing a sensible development. Why does the language want to have sections full of programming data? One thing like that, if positioned in a human's house, can also be educated on a small dataset with the fitting parameters. It may be knowledgeable in good house control, with out losing treasured sources through development networks within the heads which might be needless.

Nvidia Chat with RTX to attach LLM with YouTube movies and paperwork in the community to your PC Nvidia is making it simple to run native LLM with Chat and RTX, and it's robust, too. The way forward for AI is exclusive Common objective LLMs nonetheless have their position

Nvidia Chat with RTX to attach LLM with YouTube movies and paperwork in the community to your PC Nvidia is making it simple to run native LLM with Chat and RTX, and it's robust, too. The way forward for AI is exclusive Common objective LLMs nonetheless have their position

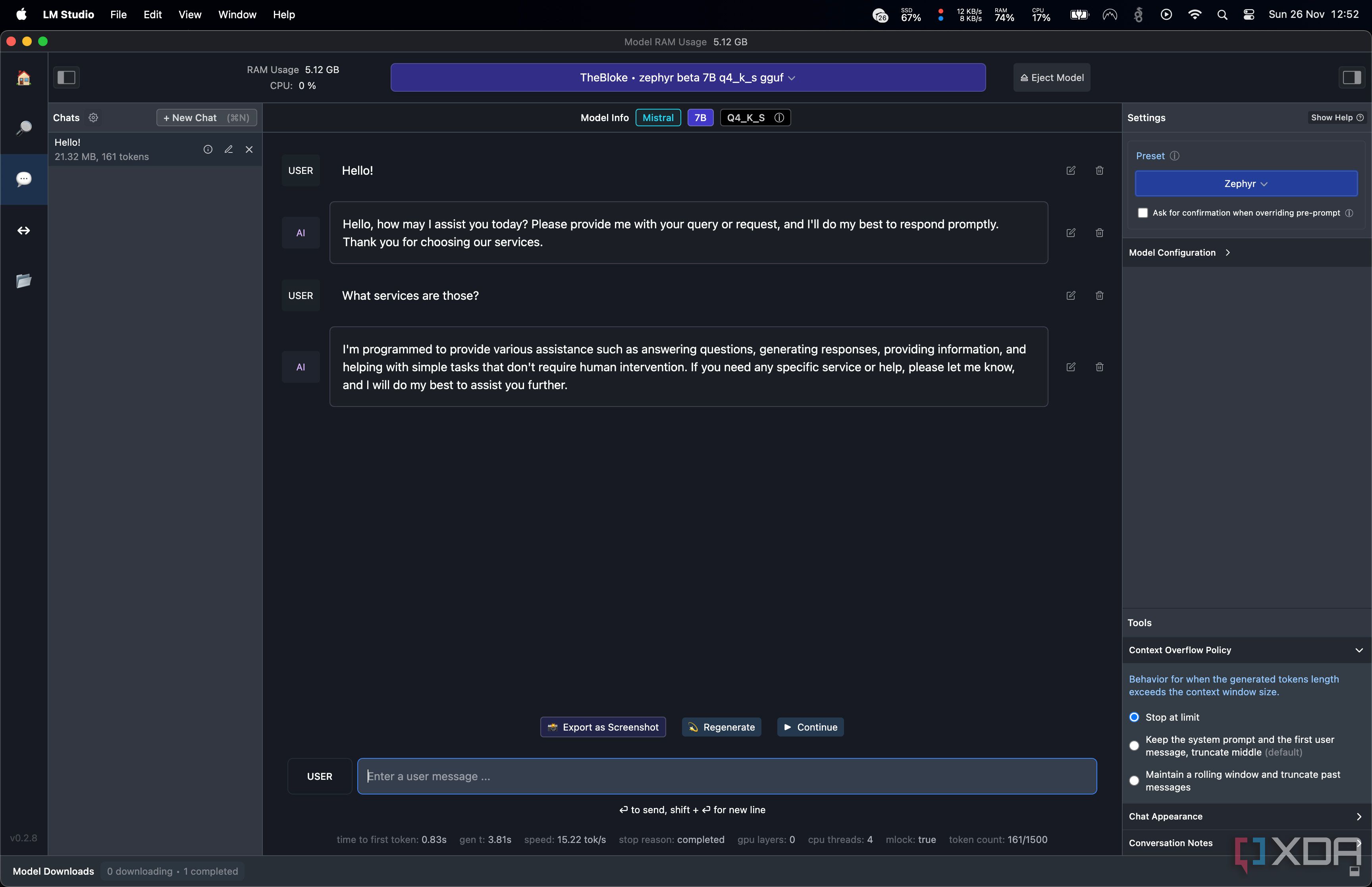

All in all, the objectives of LLMs could have their position, however the way forward for sturdy AI is in small, specialised spaces. We have already got small languages like Vicuna-7B that may run on gadgets that are compatible in our wallet. A 7 billion style can do so much when utilized in a unique manner, and that's the place I imagine the business is headed. StarCoder2 is an instance of that, and with RAG beginning to take off once more, I think we can see much less heavy fashions, and not more however extra correct fashions as an alternative. If you wish to have to take a look at some small LLM, you’ll be able to use gear like LM Studio with robust GPU. It's now not tricky to run so long as you’ve got quite a lot of vRAM, and there are lots of distinctive and sundry fashions to take a look at. There’s something for everybody, and while you check out it, you’re going to perceive why the way forward for AI shall be those fashions that any one can run, anyplace at any time.

Similar Run native LLMs simply on Mac and Home windows because of LM Studio If you wish to run LLMs to your PC or computer, it's by no means been more straightforward to do it because of the unfastened and strong LM Studio. Right here's how one can use it

Similar Run native LLMs simply on Mac and Home windows because of LM Studio If you wish to run LLMs to your PC or computer, it's by no means been more straightforward to do it because of the unfastened and strong LM Studio. Right here's how one can use it