Increase / There is been a large number of AI communicate this week, and overlaying it will possibly occasionally really feel like working thru a corridor filled with creepy CRTs, as this Getty Pictures photograph presentations. It is been a hectic week in AI information due to OpenAI, together with arguable weblog posts from CEO Sam Altman, the unfold of Complicated Voice Mode, rumors of a 5GW information middle, primary worker shake-ups, and unexpected restructuring plans. However the remainder of the AI global does not move to the similar beat, it does the similar factor by means of liberating new AI fashions and researching by means of the minute. Listed below are one of the crucial hottest AI tales from the previous week. Google Gemini updates

On Tuesday, Google introduced updates to its Gemini construct, together with the discharge of 2 new production-ready variations that duplicate earlier releases: Gemini-1.5-Professional-002 and Gemini-1.5-Flash-002. The corporate additionally reported enhancements around the board, reaching higher math, long-term control, and imaginative and prescient services and products. Google claims a 7 p.c building up in efficiency at the MMLU-Professional benchmark and a 20 p.c development in math-related duties. However as you understand, in case you’ve been studying Ars Technica for some time, AI benchmarks don’t seem to be as helpful as we might like them to be. Together with the fashion improve, Google offered a worth drop for Gemini 1.5 Professional, decreasing the cost of tokens by means of 64 p.c and the cost of tokens by means of 52 p.c for a complete of lower than 128,000. As AI researcher Simon Willison famous on his weblog, “Relatively, GPT-4o is these days $5/[million tokens] enter and $15/m output and Claude 3.5 Sonnet is $3/m enter and $15/m output. Gemini 1.5 Professional used to be already the most affordable model of the prohibit and now it is the most cost-effective.” Google has larger the prohibit, Gemini 1.5 Flash now helps 2,000 requests in keeping with minute and Gemini 1.5 Professional handles 1,000 requests in keeping with minute. Google says the newest variations be offering two times the output velocity and not more than 3 times not up to the former replace

On Wednesday, Meta introduced the discharge of Llama 3.2, a significant development over its open AI requirements that now we have discovered such a lot about up to now. The brand new unlock contains the imaginative and prescient of huge languages (LLMs) in 11 billion and 90B parameter dimension, in addition to gentle examples of texts of 1B and 3B parameters designed for cell gadgets. Meta says the imaginative and prescient is aggressive with main block fashions for symbol popularity and comprehension duties, whilst the smaller fashions are stated to outperform their competition in a lot of text-based duties. Willison experimented with 3.2 small samples and confirmed spectacular effects for the pattern sizes. AI researcher Ethan Mollick demonstrated working Llama 3.2 on his iPhone the usage of an app known as PocketPal. Meta additionally offered the primary portions of the “Llama Stack”, which have been designed to simplify building and deployment in several environments. As with earlier releases, Meta is making those variations to be had at no cost obtain, with licensing restrictions. The brand new fashions make stronger far off home windows of as much as 128,000 tokens. Google’s AlphaChip AI helps chip design

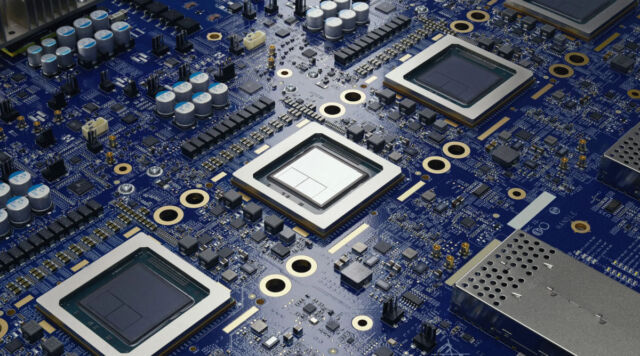

On Thursday, Google DeepMind introduced what seems to be a significant advance within the design of its AI-powered chip, the AlphaChip. It began as a analysis undertaking in 2020 and is now a dynamic studying approach for chip format design. Google says it used the AlphaChip to create “superhuman designs” up to now 3 generations of Tensor Processing Gadgets (TPUs), which might be GPU-like chips designed to hurry up AI duties. Google claims that AlphaChip can generate top of the range scores in hours, in comparison to weeks or months of human effort. (Reportedly, Nvidia has additionally been the usage of AI to lend a hand design its personal chips.) Particularly, Google additionally launched the AlphaChip’s pre-trained learn about on GitHub, sharing the fashion weights with the general public. The corporate stated that AlphaChip’s effects have already unfold past Google, with chip firms reminiscent of MediaTek taking and development at the generation for their very own chips. In keeping with Google, AlphaChip has pioneered new analysis in AI in chip design, which is able to reinforce each facet of chip design from pc design to production. It wasn’t all that came about, however those are one of the crucial giant ones. With the AI business appearing no indicators of slowing down but, we’re going to see how subsequent week unfolds.