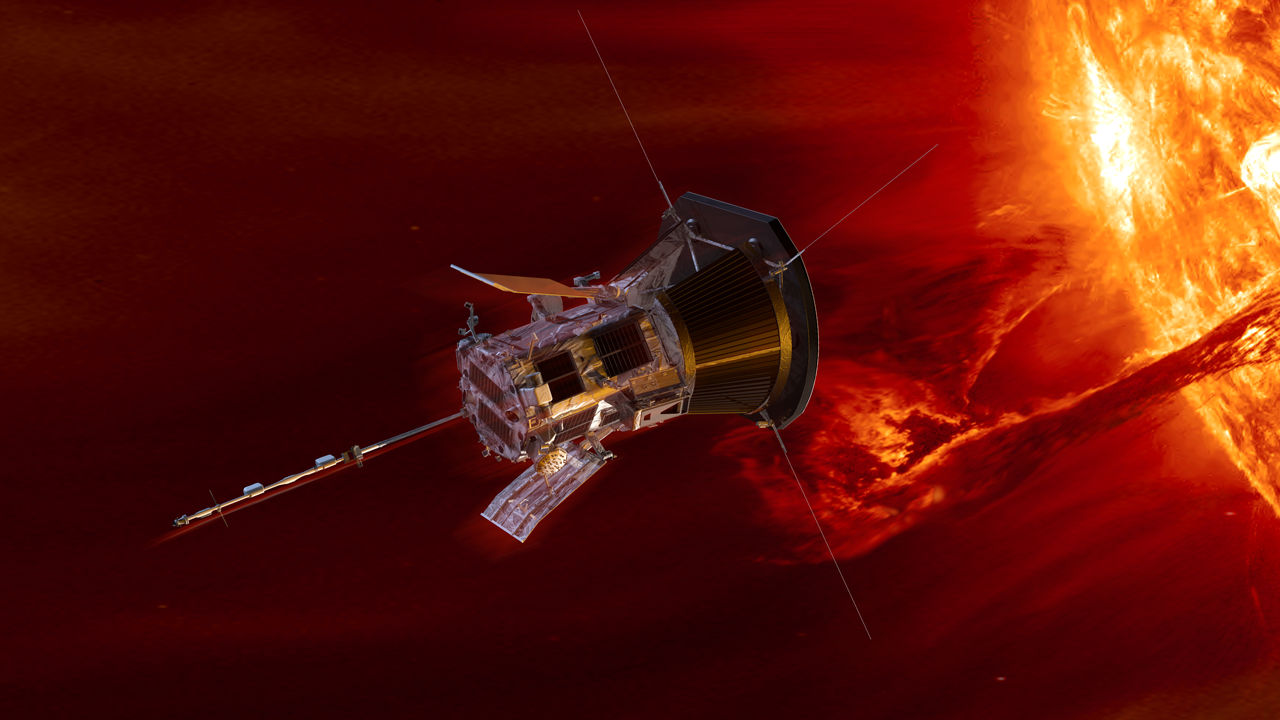

In an open-plan place of job in Mountain View, California, a tall and skinny robotic has been busy taking part in excursion information and mysterious place of job assistant – due to the improve of a big language style, Google DeepMind published as of late. The robotic makes use of the most recent model of Google’s primary language Gemini to give an explanation for the entire instructions and to find its means. When informed through a human, “To find me someplace to write down,” as an example, the robotic will mechanically vibrate, main the human to a steerage wheel. whiteboard this is someplace in the home. Gemini’s skill to make use of video and textual content—blended with its skill to enter massive quantities of knowledge similar to video excursions that experience already been recorded within the place of job—permits the “Google helper” robotic to be extra intuitive. nature is to transport in the best route when given laws that require rational concept. The robotic combines Gemini with an set of rules that creates particular movements for the robotic to take, similar to turning, in accordance with instructions and what it sees in entrance of it. Gemini was once presented in December, Demis Hassabis, CEO of Google DeepMind, mentioned. WIRED that its multimodal features may open new chances for robotics. He additionally mentioned that the corporate’s researchers have been onerous at paintings checking out the prototype robotic’s features. In a brand new paper describing the undertaking, researchers operating at the undertaking say their robotic looked to be 90 % dependable in strolling, even if given demanding situations. laws like “The place did I depart my coaster?” DeepMind’s device “has revolutionized human and robotic habits, expanding efficiency,” the crew writes. Courtesy of Google DeepMind

Courtesy of Google DeepMind Picture: Muinat Abdul; Google DeepMindThe demonstration obviously displays the possibility of massive varieties of languages to achieve the sector and carry out helpful duties. Gemini and different chatbots frequently paintings inside a browser or app, despite the fact that they are able to use visible and audio options, as Google and OpenAI just lately demonstrated. Within the month of Might, Hassabis offered a well-designed Gemini that may create concepts for the place of job as observed via a smartphone digicam. Instructional and business analysis laboratories are racing to peer how languages can be utilized to give a boost to the robotic’s talents. The Might program of the Global Convention on Robotics and Automation, a well-liked tournament for robotics researchers, options a couple of dozen papers that come with the usage of visible language fashions. A number of researchers who labored at Google left the corporate to discovered a startup known as Bodily Intelligence, which won an preliminary funding of $ 70 million; is operating to mix all kinds of languages with real-world finding out to present robots problem-solving talents. Skild AI, based through roboticists at Carnegie Mellon College, has a equivalent purpose. This month he introduced an funding of 300 million bucks. A couple of years in the past, a robotic would wish a map of its setting and moderately decided on instructions to navigate. Main languages include necessary details about the sector, and new translations taught in photos and movies and textual content, referred to as visible languages, can solution questions that require perception. Gemini permits the Google robotic to turn visible and spoken directions, following drawings on a white board that displays how to a brand new location. Of their paper, the researchers say they’re making plans to check the program on several types of robots. They upload {that a} Gemini must be capable of solution probably the most tough questions, similar to “Does he have a drink that I really like as of late?” from a consumer who has many empty Coke cans on their table.

Picture: Muinat Abdul; Google DeepMindThe demonstration obviously displays the possibility of massive varieties of languages to achieve the sector and carry out helpful duties. Gemini and different chatbots frequently paintings inside a browser or app, despite the fact that they are able to use visible and audio options, as Google and OpenAI just lately demonstrated. Within the month of Might, Hassabis offered a well-designed Gemini that may create concepts for the place of job as observed via a smartphone digicam. Instructional and business analysis laboratories are racing to peer how languages can be utilized to give a boost to the robotic’s talents. The Might program of the Global Convention on Robotics and Automation, a well-liked tournament for robotics researchers, options a couple of dozen papers that come with the usage of visible language fashions. A number of researchers who labored at Google left the corporate to discovered a startup known as Bodily Intelligence, which won an preliminary funding of $ 70 million; is operating to mix all kinds of languages with real-world finding out to present robots problem-solving talents. Skild AI, based through roboticists at Carnegie Mellon College, has a equivalent purpose. This month he introduced an funding of 300 million bucks. A couple of years in the past, a robotic would wish a map of its setting and moderately decided on instructions to navigate. Main languages include necessary details about the sector, and new translations taught in photos and movies and textual content, referred to as visible languages, can solution questions that require perception. Gemini permits the Google robotic to turn visible and spoken directions, following drawings on a white board that displays how to a brand new location. Of their paper, the researchers say they’re making plans to check the program on several types of robots. They upload {that a} Gemini must be capable of solution probably the most tough questions, similar to “Does he have a drink that I really like as of late?” from a consumer who has many empty Coke cans on their table.

Google DeepMind’s Chatbot-Powered Robotic Is A part of a Larger Revolution

/cdn.vox-cdn.com/uploads/chorus_asset/file/25807125/2100335117.jpg)