Abstract: A brand new learn about unearths how our mind distinguishes between track and speech the usage of easy acoustic parameters. Researchers discovered that slower, secure sounds are perceived as track, whilst sooner, abnormal sounds are perceived as speech.Those insights may just optimize healing systems for language issues like aphasia. The analysis supplies a deeper figuring out of auditory processing.Key Information:Easy Parameters: The mind makes use of elementary acoustic parameters to distinguish track from speech.Healing Doable: Findings may just fortify treatments for language issues like aphasia.Analysis Main points: Learn about concerned over 300 members taking note of synthesized audio clips.Supply: NYUMusic and speech are a few of the maximum common varieties of sounds we listen. However how will we determine what we expect are variations between the 2? A world group of researchers mapped out this procedure via a chain of experiments—yielding insights that supply a possible approach to optimize healing systems that use track to regain the facility to talk in addressing aphasia.  Figuring out how the human mind differentiates between track and speech can probably receive advantages folks with auditory or language issues corresponding to aphasia, the authors observe. Credit score: Neuroscience NewsThis language dysfunction afflicts greater than 1 in 300 American citizens every yr, together with Wendy Williams and Bruce Willis.“Even if track and speech are other in some ways, starting from pitch to timbre to sound texture, our effects display that the auditory device makes use of strikingly easy acoustic parameters to tell apart track and speech,” explains Andrew Chang, a postdoctoral fellow in New York College’s Division of Psychology and the lead creator of the paper, which seems within the magazine PLOS Biology.“Total, slower and secure sound clips of mere noise sound extra like track whilst the speedier and abnormal clips sound extra like speech.”Scientists gauge the speed of alerts by way of exact gadgets of dimension: Hertz (Hz). A bigger choice of Hz approach a better choice of occurrences (or cycles) in step with 2d than a decrease quantity. As an example, folks in most cases stroll at a tempo of one.5 to two steps in step with 2d, which is 1.5-2 Hz.The beat of Stevie Surprise’s 1972 hit “Superstition” is roughly 1.6 Hz, whilst Anna Karina’s 1967 wreck “Curler Lady” clocks in at 2 Hz. Speech, against this, is in most cases two to 3 instances sooner than that at 4-5 Hz.It’s been neatly documented {that a} track’s quantity, or loudness, through the years—what’s referred to as “amplitude modulation”—is slightly secure at 1-2 Hz. Against this, the amplitude modulation of speech is in most cases 4-5 Hz, which means its quantity adjustments often. Regardless of the ubiquity and familiarity of track and speech, scientists up to now lacked transparent figuring out of ways we easily and routinely determine a valid as track or speech. To raised perceive this procedure of their PLOS Biology learn about, Chang and associates carried out a chain of 4 experiments by which greater than 300 members listened to a chain of audio segments of synthesized music- and speech-like noise of more than a few amplitude modulation speeds and regularity. The audio noise clips allowed handiest the detection of quantity and velocity. The members had been requested to pass judgement on whether or not those ambiguous noise clips, which they had been informed had been noise-masked track or speech, appeared like track or speech.Staring at the development of members sorting masses of noise clips as both track or speech printed how a lot every velocity and/or regularity characteristic affected their judgment between track and speech. It’s the auditory model of “seeing faces within the cloud,” the scientists conclude: If there’s a definite characteristic within the soundwave that fits listeners’ thought of ways track or speech must be, even a white noise clip can sound like track or speech. The consequences confirmed that our auditory device makes use of strangely easy and elementary acoustic parameters to tell apart track and speech: to members, clips with slower charges (<2Hz) and extra common amplitude modulation sounded extra like track, whilst clips with upper charges (~4Hz) and extra abnormal amplitude modulation sounded extra like speech. Figuring out how the human mind differentiates between track and speech can probably receive advantages folks with auditory or language issues corresponding to aphasia, the authors observe.Melodic intonation remedy, as an example, is a promising strategy to teach folks with aphasia to sing what they need to say, the usage of their intact “musical mechanisms” to avoid broken speech mechanisms. Due to this fact, figuring out what makes track and speech equivalent or distinct within the mind can assist design simpler rehabilitation systems.The paper’s different authors had been Xiangbin Teng of Chinese language College of Hong Kong, M. Florencia Assaneo of Nationwide Self sufficient College of Mexico (UNAM), and David Poeppel, a professor in NYU’s Division of Psychology and managing director of the Ernst Strüngmann Institute for Neuroscience in Frankfurt, Germany.Investment: The analysis was once supported by way of a grant from the Nationwide Institute on Deafness and Different Communique Issues, a part of the Nationwide Institutes of Well being (F32DC018205), and Leon Levy Scholarships in Neuroscience.About this auditory neuroscience analysis newsAuthor: James Devitt

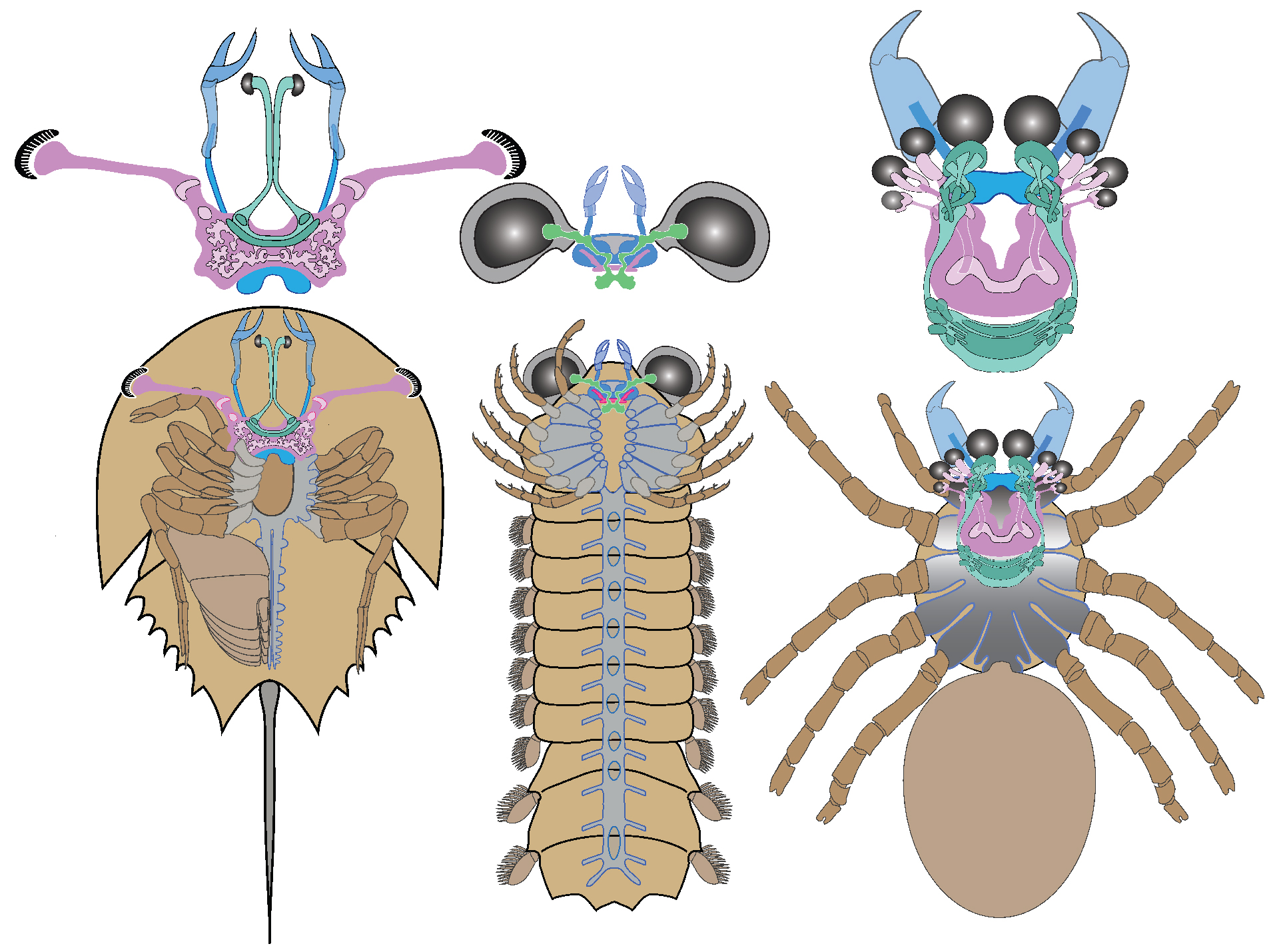

Figuring out how the human mind differentiates between track and speech can probably receive advantages folks with auditory or language issues corresponding to aphasia, the authors observe. Credit score: Neuroscience NewsThis language dysfunction afflicts greater than 1 in 300 American citizens every yr, together with Wendy Williams and Bruce Willis.“Even if track and speech are other in some ways, starting from pitch to timbre to sound texture, our effects display that the auditory device makes use of strikingly easy acoustic parameters to tell apart track and speech,” explains Andrew Chang, a postdoctoral fellow in New York College’s Division of Psychology and the lead creator of the paper, which seems within the magazine PLOS Biology.“Total, slower and secure sound clips of mere noise sound extra like track whilst the speedier and abnormal clips sound extra like speech.”Scientists gauge the speed of alerts by way of exact gadgets of dimension: Hertz (Hz). A bigger choice of Hz approach a better choice of occurrences (or cycles) in step with 2d than a decrease quantity. As an example, folks in most cases stroll at a tempo of one.5 to two steps in step with 2d, which is 1.5-2 Hz.The beat of Stevie Surprise’s 1972 hit “Superstition” is roughly 1.6 Hz, whilst Anna Karina’s 1967 wreck “Curler Lady” clocks in at 2 Hz. Speech, against this, is in most cases two to 3 instances sooner than that at 4-5 Hz.It’s been neatly documented {that a} track’s quantity, or loudness, through the years—what’s referred to as “amplitude modulation”—is slightly secure at 1-2 Hz. Against this, the amplitude modulation of speech is in most cases 4-5 Hz, which means its quantity adjustments often. Regardless of the ubiquity and familiarity of track and speech, scientists up to now lacked transparent figuring out of ways we easily and routinely determine a valid as track or speech. To raised perceive this procedure of their PLOS Biology learn about, Chang and associates carried out a chain of 4 experiments by which greater than 300 members listened to a chain of audio segments of synthesized music- and speech-like noise of more than a few amplitude modulation speeds and regularity. The audio noise clips allowed handiest the detection of quantity and velocity. The members had been requested to pass judgement on whether or not those ambiguous noise clips, which they had been informed had been noise-masked track or speech, appeared like track or speech.Staring at the development of members sorting masses of noise clips as both track or speech printed how a lot every velocity and/or regularity characteristic affected their judgment between track and speech. It’s the auditory model of “seeing faces within the cloud,” the scientists conclude: If there’s a definite characteristic within the soundwave that fits listeners’ thought of ways track or speech must be, even a white noise clip can sound like track or speech. The consequences confirmed that our auditory device makes use of strangely easy and elementary acoustic parameters to tell apart track and speech: to members, clips with slower charges (<2Hz) and extra common amplitude modulation sounded extra like track, whilst clips with upper charges (~4Hz) and extra abnormal amplitude modulation sounded extra like speech. Figuring out how the human mind differentiates between track and speech can probably receive advantages folks with auditory or language issues corresponding to aphasia, the authors observe.Melodic intonation remedy, as an example, is a promising strategy to teach folks with aphasia to sing what they need to say, the usage of their intact “musical mechanisms” to avoid broken speech mechanisms. Due to this fact, figuring out what makes track and speech equivalent or distinct within the mind can assist design simpler rehabilitation systems.The paper’s different authors had been Xiangbin Teng of Chinese language College of Hong Kong, M. Florencia Assaneo of Nationwide Self sufficient College of Mexico (UNAM), and David Poeppel, a professor in NYU’s Division of Psychology and managing director of the Ernst Strüngmann Institute for Neuroscience in Frankfurt, Germany.Investment: The analysis was once supported by way of a grant from the Nationwide Institute on Deafness and Different Communique Issues, a part of the Nationwide Institutes of Well being (F32DC018205), and Leon Levy Scholarships in Neuroscience.About this auditory neuroscience analysis newsAuthor: James Devitt

Supply: NYU

Touch: James Devitt – NYU

Symbol: The picture is credited to Neuroscience NewsOriginal Analysis: Open get entry to.

“The human auditory device makes use of amplitude modulation to tell apart track from speech” by way of Andrew Chang et al. PLOS BiologyAbstractThe human auditory device makes use of amplitude modulation to tell apart track from speechMusic and speech are complicated and distinct auditory alerts which might be each foundational to the human revel in. The mechanisms underpinning every area are broadly investigated. Alternatively, what perceptual mechanism transforms a valid into track or speech and the way elementary acoustic data is needed to tell apart between them stay open questions.Right here, we hypothesized {that a} sound’s amplitude modulation (AM), an very important temporal acoustic characteristic riding the auditory device throughout processing ranges, is important for distinguishing track and speech.Particularly, against this to paradigms the usage of naturalistic acoustic alerts (that may be difficult to interpret), we used a noise-probing strategy to untangle the auditory mechanism: If AM price and regularity are important for perceptually distinguishing track and speech, judging artificially noise-synthesized ambiguous audio alerts must align with their AM parameters.Throughout 4 experiments (N = 335), alerts with the next height AM frequency have a tendency to be judged as speech, decrease as track. Apparently, this theory is constantly utilized by all listeners for speech judgments, however handiest by way of musically subtle listeners for track. As well as, alerts with extra common AM are judged as track over speech, and this option is extra important for track judgment, without reference to musical sophistication.The information counsel that the auditory device can depend on a low-level acoustic assets as elementary as AM to tell apart track from speech, a easy theory that provokes each neurophysiological and evolutionary experiments and speculations.

How the Mind Distinguishes Track from Speech – Neuroscience Information