Would possibly 25, 2024 NewsroomMachine Finding out / Knowledge Breach

Cybersecurity researchers have came upon a big safety flaw in synthetic intelligence (AI)-as-a-service supplier Reflect that can have allowed attackers to achieve get entry to to their very own AI and confidential data. “Exploitation of this vulnerability may permit unauthorized get entry to to AI effects and effects for all Reflect purchasers,” cloud safety company Wiz stated in a record revealed this week. This factor is according to the truth that AI fashions are continuously embedded in a design that permits for indiscriminate killing, which an attacker can use to hold out a malicious assault.

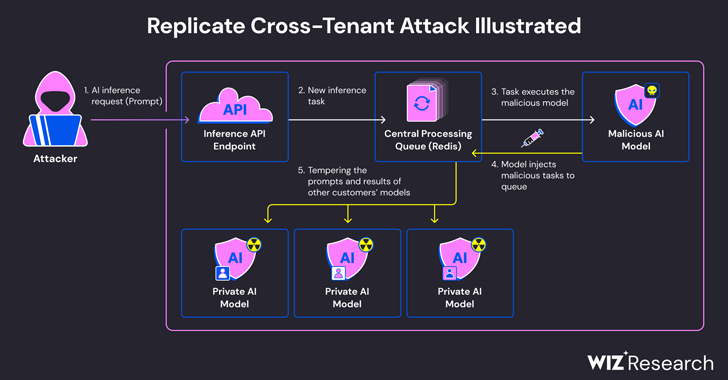

Reflect makes use of an open supply software known as Cog to embed and embed system finding out algorithms that may be deployed to an ordinary setting or Replication. Wiz stated it created a malicious Cog container and deployed it to Reflect, the usage of it to execute far off code at the undertaking's units with top privileges. “We suspect that this mix is a procedure, the place corporations and organizations run AI fashions from unreliable assets, even supposing those fashions are probably malicious,” safety researchers Shir Tamari and Sagi Tzadik stated. The assault means advanced by means of the corporate leveraged an already established TCP connection attached to a Redis server inside of a Kubernetes cluster hosted on Google Cloud Platform to inject arbitrary instructions. As well as, with the central Redis server getting used as a keep an eye on line for lots of buyer requests and their responses, it may be misused to give a boost to the danger of debtors by means of disrupting the method to arrange malicious actions that may have an effect on the result of others. buyer examples. Those mistakes now not best threaten the integrity of AI fashions, but additionally pose a major risk to the accuracy and reliability of AI-driven outputs. “An attacker can have queried the customer's AI fashions, revealing proprietary data or knowledge fascinated by type coaching,” the researchers stated. “Moreover, the intrusion can have uncovered delicate data, together with for my part identifiable data (PII).

The flaw, which was once printed in January 2024, was once addressed by means of Reflect. There is not any proof that the vulnerability was once used within the wild to compromise buyer knowledge. The revelation comes a month after Wiz described the hazards of platforms like Hugging Face that might permit hackers to extend get entry to, acquire get entry to to different sorts of shoppers, or even take part in integration and steady supply. (CI/CD) pipes. “Malicious fashions constitute a big risk to AI programs, particularly for AI-as-a-service suppliers as a result of attackers can use those fashions to dedicate crimes,” the researchers concluded. “The prospective could be very severe, as attackers can get entry to hundreds of thousands of personal AI fashions and systems saved inside of AI-as-a-service brokers.”

Did you to find this text attention-grabbing? Observe us on Twitter and LinkedIn to learn extra of our content material.