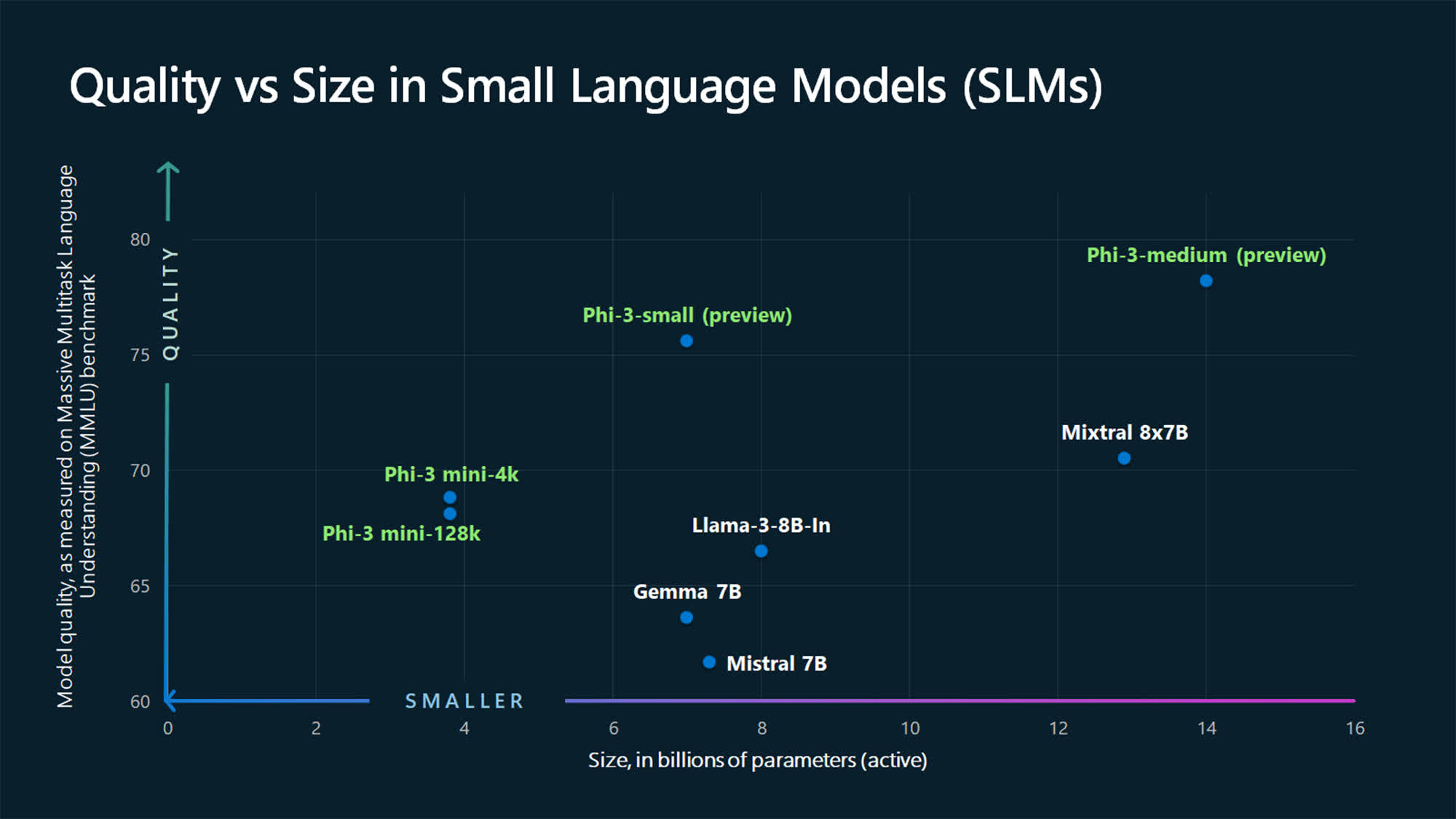

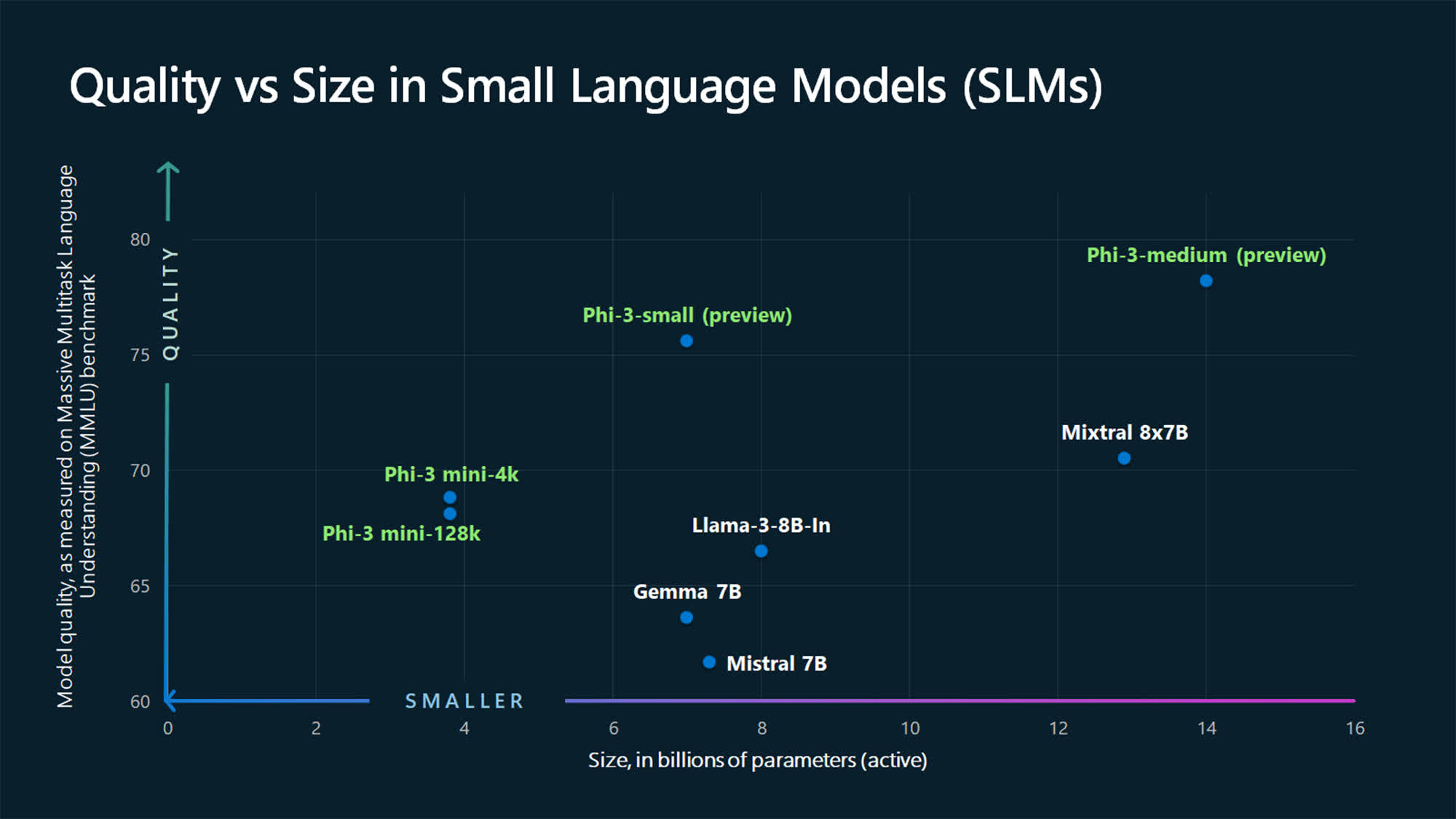

Why it's necessary: Complicated AI talents regularly require massive, cloud-based fashions with billions or billions of knowledge. However Microsoft claims it's the Phi-3 Mini, a pint-size AI powerhouse that may run to your telephone or computer whilst handing over efficiency that opponents the largest languages available in the market. Weighing in at simply 3.8 billion gadgets, the Phi-3 Mini is the primary of 3 built-in AI fashions that Microsoft has within the works. It can be small, however Microsoft says this little winner can upward thrust above its weight elegance, generating responses on the subject of what you'd get from fashions 10 its length. The tech large plans to practice up the Mini with Phi-3 Small (7 billion gadgets) and Phi-3 Medium (14 billion gadgets) later. However even the three.8 billion-parameter Mini is shaping as much as be a significant participant, in step with Microsoft's numbers. The numbers display the Phi-3 Mini conserving its personal towards heavy workloads just like the 175+ billion GPT-3.5 that powers the loose ChatGPT, and Mistral's Mixtral 8x7B model of the French AI. It's no longer unhealthy in any respect that the built-in model is sufficient to run in the community with out connecting to the cloud. So how does expansion measure up on the subject of AI language fashions? All of it comes right down to parameters – the numbers within the neural community that resolve the way it strikes and creates data. Extra fields typically manner a greater working out of your questions, and it additionally will increase your call for. Alternatively, that's no longer at all times the case, as OpenAI CEO Sam Altman defined.

Whilst behemoth fashions like OpenAI's GPT-4 and Anthropic's Claude 3 Opus are mentioned to hold a number of billion gadgets, the Phi-3 Mini handiest exceeds 3.8 billion. Alternatively, Microsoft researchers had been in a position to succeed in sudden effects thru a brand new manner of cleansing the educational information routinely. Via having a look on the 3.8 billion micro-model for the best quality of the web and the standard of the goods from the former Phi fashions, they gave the Phi-3 Mini the facility in its thinness. It may possibly grasp as much as 4,000 tokens at a time, with a distinct 128k token model additionally to be had. “As a result of by way of studying from such things as books, from excellent texts that give an explanation for issues really well, you’re making the language paintings more straightforward to learn and perceive the tale,” explains Microsoft. The effects can also be massive. If tiny AI fashions just like the Phi-3 Mini can ship products and services that rival lately's billion-plus behemoths, we will be able to go away AI-powered cloud farms in the back of in on a regular basis duties. Microsoft has already introduced the mannequin to run at the Azure cloud, in addition to thru open AI fashions with Hugging Face and Ollama.

Microsoft's Phi-3 Mini boasts ChatGPT-level efficiency in an ultralight 3.8B parameter package deal