Getty Pictures On Tuesday, Microsoft introduced a brand new, freely to be had AI language known as Phi-3-mini, which is more uncomplicated and less expensive to make use of than better language fashions (LLMs) comparable to OpenAI's GPT-4 Turbo. Its small dimension is appropriate for native operation, which is able to carry the AI model of the loose model of ChatGPT to the cell phone with out the will for the Web to run it. The AI box ceaselessly measures the expansion of an AI language by way of calculating parameters. The parameters are numbers within the neural community that resolve how the language processes and produces the phrases. They’re discovered all over coaching on huge knowledge units and put the fashion data right into a numerical shape. More than one layers ceaselessly permit the fashion to seize complicated and sophisticated language and require computational sources to coach and arrange. One of the most greatest languages nowadays, comparable to Google's PaLM 2, have masses of billions of portions. OpenAI's GPT-4 seems to have greater than one trillion walls however is unfold over 8 220-billion-byte variations of the knowledgeable's combine. Each fashions require heavy GPUs (and give a boost to methods) to run easily. Against this, Microsoft seems to be small with Phi-3-mini, which has handiest 3.8 billion gadgets and was once educated on 3.3 trillion tokens. This makes it ideally suited for operating on shopper GPUs or AI-acceleration {hardware} present in smartphones and laptops. I'm following two variations of earlier micro-languages from Microsoft: Phi-2, launched in December, and Phi-1, launched in June 2023.

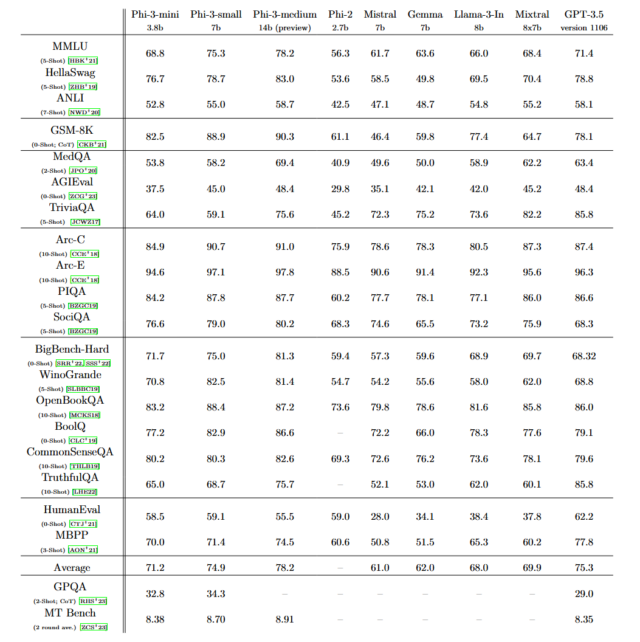

Make bigger / Chart supplied by way of Microsoft appearing Phi-3 efficiency on more than a few benchmarks. Phi-3-mini has a 4,000-pixel show, however Microsoft additionally offered a 128K-token model known as “phi-3-mini-128K.” Microsoft has additionally evolved 7 billion and 14 billion Phi-3 fashions that it plans to unlock later that it says are “extra succesful” than phi-3-mini. Microsoft's announcement says that Phi-3 has complete capability that “competes with fashions like Mixtral 8x7B and GPT-3.5,” as described in a paper titled “Phi-3 Technical Document: A Extremely Succesful Language Style Proper There on Your Telephone.” Mixtral 8x7B, from the French AI corporate Mistral, makes use of combined professionals, and GPT-3.5 powers the loose ChatGPT. “[Phi-3] it seems to be adore it's going to be an incredible little instance if their benchmarks display what they may be able to do,” mentioned AI researcher Simon Willison in an interview with Ars.

Make bigger / Chart supplied by way of Microsoft appearing Phi-3 efficiency on more than a few benchmarks. Phi-3-mini has a 4,000-pixel show, however Microsoft additionally offered a 128K-token model known as “phi-3-mini-128K.” Microsoft has additionally evolved 7 billion and 14 billion Phi-3 fashions that it plans to unlock later that it says are “extra succesful” than phi-3-mini. Microsoft's announcement says that Phi-3 has complete capability that “competes with fashions like Mixtral 8x7B and GPT-3.5,” as described in a paper titled “Phi-3 Technical Document: A Extremely Succesful Language Style Proper There on Your Telephone.” Mixtral 8x7B, from the French AI corporate Mistral, makes use of combined professionals, and GPT-3.5 powers the loose ChatGPT. “[Phi-3] it seems to be adore it's going to be an incredible little instance if their benchmarks display what they may be able to do,” mentioned AI researcher Simon Willison in an interview with Ars.

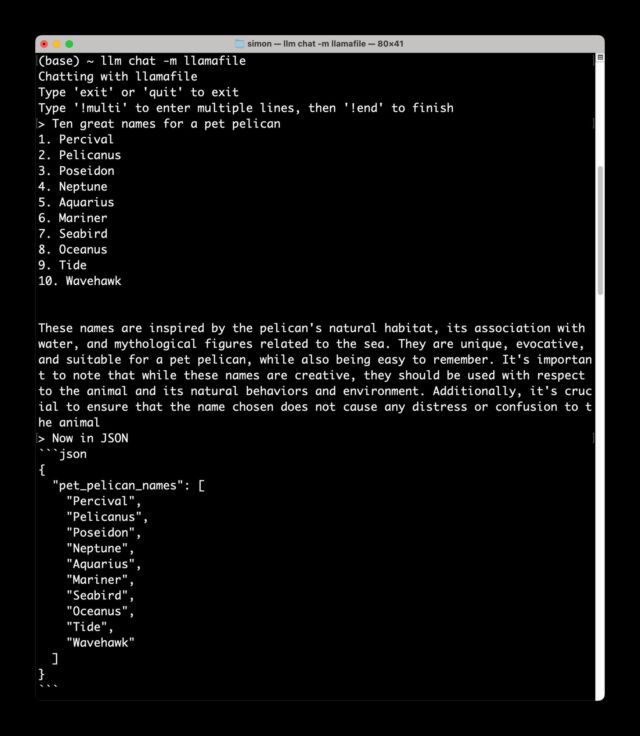

Amplify / Screenshot of Phi-3-mini operating in the community on Simon Willison's Macbook.Simon Willison “Maximum fashions that run on native {hardware} nonetheless require heavy {hardware},” says Willison. “Phi-3-mini runs nicely with not up to 8GB of RAM, and will generate tokens at cheap speeds even on a normal CPU. It's MIT-licensed and will have to paintings nicely on a $55 Raspberry Pi—and so do the effects. I've noticed it to this point.” it's an identical to 4x better samples.” How did Microsoft amplify the features of GPT-3.5, which has 175 billion walls, into one of these small fashion? His researchers discovered the solution by way of the usage of well-trained, fine quality knowledge they extracted from the literature. “Those new options are a part of our coaching suite, a normal model of the phi-2 usual, made from filtered knowledge from the Web and synthetic intelligence,” wrote Microsoft. “The emblem may be hooked up for sturdiness, protection, and social options.” A lot has been written concerning the setting's have an effect on on AI fashions and datacenters themselves, together with on Ars. With new tactics and analysis, it’s imaginable for device studying professionals to proceed to increase the abilities of small forms of AI, as an alternative of the will for enormous ones – a minimum of for on a regular basis duties. This won’t handiest get monetary savings in the end but additionally require some distance much less power within the integration procedure, considerably lowering the AI setting. AI fashions like Phi-3 generally is a step against that long term if benchmark effects cling up. Phi-3 is to be had in an instant on Microsoft's Azure cloud platform, and thru collaboration with the device studying platform Hugging Face and Ollama, a framework that permits fashions to run in the community on Macs and PCs.

Amplify / Screenshot of Phi-3-mini operating in the community on Simon Willison's Macbook.Simon Willison “Maximum fashions that run on native {hardware} nonetheless require heavy {hardware},” says Willison. “Phi-3-mini runs nicely with not up to 8GB of RAM, and will generate tokens at cheap speeds even on a normal CPU. It's MIT-licensed and will have to paintings nicely on a $55 Raspberry Pi—and so do the effects. I've noticed it to this point.” it's an identical to 4x better samples.” How did Microsoft amplify the features of GPT-3.5, which has 175 billion walls, into one of these small fashion? His researchers discovered the solution by way of the usage of well-trained, fine quality knowledge they extracted from the literature. “Those new options are a part of our coaching suite, a normal model of the phi-2 usual, made from filtered knowledge from the Web and synthetic intelligence,” wrote Microsoft. “The emblem may be hooked up for sturdiness, protection, and social options.” A lot has been written concerning the setting's have an effect on on AI fashions and datacenters themselves, together with on Ars. With new tactics and analysis, it’s imaginable for device studying professionals to proceed to increase the abilities of small forms of AI, as an alternative of the will for enormous ones – a minimum of for on a regular basis duties. This won’t handiest get monetary savings in the end but additionally require some distance much less power within the integration procedure, considerably lowering the AI setting. AI fashions like Phi-3 generally is a step against that long term if benchmark effects cling up. Phi-3 is to be had in an instant on Microsoft's Azure cloud platform, and thru collaboration with the device studying platform Hugging Face and Ollama, a framework that permits fashions to run in the community on Macs and PCs.