When OpenAI debuted its newest AI type, GPT4-o, in a slick reside webcast this previous Would possibly, it used to be Mira Murati, the corporate’s leader generation officer, now not the corporate’s better-known CEO, Sam Altman, who emceed the development.

Murati, wearing a grey collared T-shirt and denims, helped sing their own praises the device’s talent to have interaction thru voice and symbol activates, in addition to its talent at simultaneous translation, math, and coding. It used to be an excellent reside demo, timed to scouse borrow the thunder from OpenAI’s rival Google, which used to be set to unveil new options for its personal AI chatbot, Gemini, later that week.

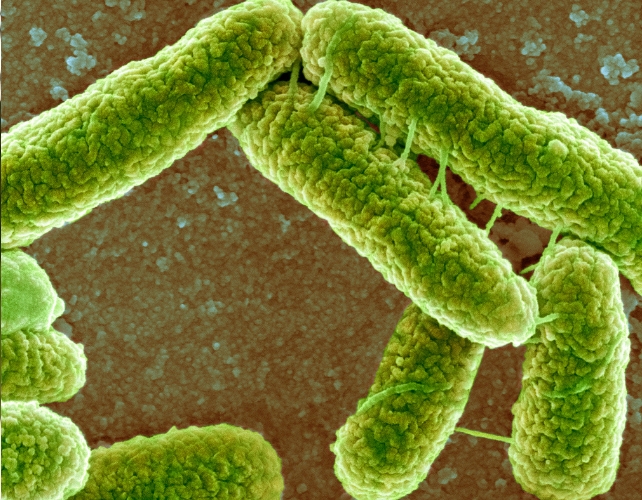

However at the back of the scenes, issues have been some distance from clean, consistent with assets conversant in the corporate’s inside workings. Relentless power to introduce merchandise equivalent to GPT-4o, and a more recent type, known as o1, which debuted closing month, have been straining the talents of OpenAI’s analysis and protection groups to stay tempo. There used to be friction between groups devoted to making sure OpenAI’s merchandise didn’t pose undue dangers, equivalent to the facility to lend a hand with generating organic guns, and business groups devoted to getting new merchandise into the marketplace and earning money.

Many OpenAI workforce concept that o1 used to be now not in a position to be unveiled, however Altman driven to release it anyway to burnish OpenAI’s recognition as a pace-setter in AI. That is the primary time main points of this debate over o1 were reported publicly.

Murati used to be regularly caught in the midst of arguments between the analysis and technical groups she oversaw and the economic groups that have been desperate to push merchandise out. She used to be additionally having to handle proceedings concerning the management taste of OpenAI cofounder and president Greg Brockman, and lingering resentment over the function she performed within the temporary ouster of Altman in a boardroom coup in November 2023.

Those pressures appear to have taken a toll on Murati, who on Wednesday introduced she used to be leaving OpenAI after six years, atmosphere off surprise waves around the tech business. She had performed the sort of important function in OpenAI’s meteoric upward thrust that many speculated concerning the attainable injury of her go out to the corporate’s long term. A spokesperson for Murati disputed this account, pronouncing burnout or problems associated with control struggles didn’t think about her determination to depart.

The similar day Murati stated she can be going, two different senior staffers, Bob McGrew, leader analysis officer, and Barret Zoph, vp of study who had gave the impression at the side of Murati within the GPT4-o release webcast, additionally introduced they have been stepping down. From the outdoor, it seemed as although the corporate used to be as soon as once more vulnerable to imploding at a time when it sought after to promote buyers on the concept it used to be maturing right into a extra solid, if nonetheless fast-growing, group.

Altman, in a word to workforce that he later posted to social media platform X, attempted to quell the firestorm via pronouncing the 3 had reached their selections to depart “independently and amicably.” The corporate had made up our minds to announce the departures at the similar day, he stated, to permit for “a clean handover to the following technology of management.” He stated that such transitions aren’t typically “so abrupt,” however famous that “we aren’t a typical corporate.”The 3 senior resignations upload to a rising listing of long-serving researchers and workforce participants who’ve left OpenAI up to now six months. A few of them have overtly expressed fear at how the corporate’s tradition and priorities have modified amid its speedy expansion from a small nonprofit lab devoted to making sure superpowerful AI is advanced for “the advantage of all humanity,” to a for-profit corporate increasing at a breakneck tempo.

In simply 9 years since its founding, OpenAI has driven to the vanguard of the AI revolution, putting worry into Giant Tech corporations like Google, Fb mother or father Meta, and Amazon which can be racing to dominate the newest generation. However OpenAI has additionally been convulsed via infighting and govt upheaval that threaten to gradual the corporate simply as its competitors acquire momentum.

The newest chaos comes as OpenAI pushes to succeed in 3 seriously vital trade milestones. The corporate, which collects billions of greenbacks yearly from the paid variations of its AI fashions, is making an attempt to cut back the reported billions of greenbacks it’s additionally dropping because of prime workforce prices and the price of the computing energy had to educate and run its AI merchandise.

On the similar time, OpenAI is making an attempt to finish a brand new project investment spherical, elevating up to $7 billion in a deal that may worth the corporate at $150 billion, making it a number of the maximum extremely valued tech startups in historical past. The interior drama would possibly frighten away buyers, doubtlessly resulting in a decrease valuation.Partly to make itself extra sexy to buyers, Altman has informed workforce that the corporate plans to redesign its present convoluted company construction, which has a nonprofit basis controlling its for-profit arm. Within the new association, the for-profit would not be managed via the nonprofit.

The tensions amongst OpenAI’s workforce flared maximum publicly in November 2023, when OpenAI’s nonprofit board rapidly fired Altman, pronouncing he had “now not been totally candid” with its participants. Altman’s firing brought about 5 days of spectacle, finishing with Altman’s rehiring and the resignation of a number of board participants. An out of doors regulation company later engaged via a newly constituted board to analyze Altman’s firing concluded that there have been a basic breakdown in believe between the board and Altman, however that none of his movements mandated his elimination. OpenAI staffers internally consult with the episode as “the Blip” and was hoping to guarantee the outdoor international that the drama used to be at the back of them.However Murati’s resignation along the ones of McGrew and Zoph point out that the tensions that experience roiled OpenAI could also be some distance from totally quieted.

The reporting for this text is in accordance with interviews with present and previous OpenAI staff and folks conversant in its operations. All of them asked anonymity for worry of violating nondisclosure clauses in employment or go out agreements.

An OpenAI spokesperson stated the corporate disagreed with lots of the characterizations on this article however did “acknowledge that evolving from an unknown analysis lab into an international corporate that delivers complex AI analysis to masses of hundreds of thousands of folks in simply two years calls for expansion and adaptation.”Rushed construction and protection trying out

Amid intense pageant with rival AI corporations and power to turn out its technological management to attainable buyers, OpenAI has rushed the trying out and rollout of its newest and maximum tough AI fashions, consistent with assets conversant in the method at the back of each launches.The GPT4-o release used to be “surprisingly chaotic even via OpenAI requirements,” one supply stated, including that “everybody hated the method.” Groups got best 9 days to habits protection tests of the type, forcing them to paintings 20-hour-plus days to satisfy the release date, consistent with every other supply conversant in the rollout. “It used to be no doubt rushed,” this supply stated.Protection groups pleaded with Murati for extra time, the supply stated. Prior to now Murati had infrequently intervened, over the objections of OpenAI’s business groups, to extend type debuts when protection groups stated they wanted extra time for trying out. However on this case, Murati stated the timing may just now not be modified.That time limit used to be in large part dictated via every other corporate: Google. OpenAI, the assets stated, knew Google used to be making plans to introduce a number of latest AI options, and tease an impressive new AI type with an identical features to GPT-4o, at its Google I/O developer convention on Would possibly 14. OpenAI desperately sought after to scouse borrow consideration clear of the ones bulletins and derail any narrative that Google used to be pulling forward within the race to expand ever extra succesful AI fashions. So it scheduled its reside debut of GPT-4o for Would possibly 13.

Finally, OpenAI’s protection trying out used to be now not fully concluded or verified by the point of the release, however the protection staff’s initial evaluation used to be that GPT-4o used to be secure to release. Later, then again, the supply stated, a security researcher found out that GPT-4o gave the impression to exceed OpenAI’s personal protection threshold for “persuasion”—how efficient the type is at convincing folks to switch their perspectives or carry out some activity. That is vital as a result of persuasive fashions may well be used for nefarious functions, equivalent to convincing any individual to consider in a conspiracy idea or vote a definite means.The corporate has stated it’s going to now not unlock any type that gifts a “prime” or “important” chance on any protection metric. On this case, OpenAI had first of all stated GPT-4o offered a “medium” chance. Now the researcher informed others internally that GPT-4o would possibly in fact provide a “prime” chance at the persuasion metric, consistent with the supply.

OpenAI denies this characterization and says its unlock timelines are decided best via its personal inside protection and readiness processes. An OpenAI spokesperson stated that the corporate “adopted a planned and empirical protection procedure” for the discharge of GPT-4o and that the type “used to be decided secure to deploy” with a “medium” ranking for persuasion. The corporate stated upper rankings for the type discovered after it rolled out have been the results of a methodological flaw in the ones assessments and didn’t replicate GPT-4o’s precise chance. It famous that because the type’s debut in Would possibly, “it’s been safely utilized by masses of hundreds of thousands of folks,” giving the corporate “self assurance in its chance evaluation.”

Facets of this timeline have been reported up to now via the Wall Boulevard Magazine, however have been showed independently to Fortune from more than one assets.As well as, Fortune has discovered that an identical problems surrounded the debut of OpenAI’s newest type, o1, which used to be unveiled on September 12, producing really extensive buzz. It’s extra succesful at duties that require reasoning, good judgment, and math than prior AI fashions and is observed via some as every other vital step towards AGI, or synthetic normal intelligence. This milestone, lengthy the Holy Grail of pc scientists, refers to an AI gadget matching standard human cognitive talents. AGI is OpenAI’s mentioned purpose.

However the release of the type—which to this point is best publicly to be had in a much less succesful “preview” model in addition to a “mini” model adapted to math and coding—brought about friction a number of the corporate’s senior executives, one supply conversant in the subject stated. Some OpenAI staffers felt the type used to be each too inconsistent in its efficiency and now not secure sufficient to unlock, the supply stated.

As well as, Woijceich Zarembra, an OpenAI researcher and cofounder, in a publish on X according to the departures of Murati, McGrew, and Zoph, alluded to clashes amongst workforce previous within the construction of o1. He stated that he and Zoph had “a fierce struggle about compute for what later turned into o1,” however he didn’t elaborate at the specifics of the argument.After o1 used to be educated, Altman driven for the type to be launched as a product virtually right away. The CEO insisted on a fast rollout, the supply who spoke to Fortune stated, in large part as a result of he used to be desperate to turn out to attainable buyers within the corporate’s newest investment spherical that OpenAI stays at the vanguard of AI construction, with AI fashions extra succesful than the ones of its competition.Many groups who reported to Murati have been satisfied o1 used to be now not in a position to be launched and had now not in point of fact been formed right into a product. Their objections, then again, have been overruled, the supply stated.Even now a staff referred to as “post-training”—which takes a type as soon as it’s been educated and unearths tactics to fine-tune its responses to be each extra useful and more secure—used to be proceeding to refine o1, the supply stated. Typically, this step is finished sooner than a type’s premiere. Zoph, who introduced he used to be leaving at the similar day as Murati, have been in control of the post-training staff.

In keeping with Fortune’s questions on o1, OpenAI supplied a observation from Zico Kolter, a Carnegie Mellon College pc scientist, and Paul Nakasone, a former director of the U.S. Nationwide Safety Company, who serve at the Protection and Safety Oversight Committee of OpenAI’s board. Kolter and Nakasone stated the o1 type unlock “demonstrated rigorous reviews and protection mitigations the corporate implements at each and every step of type construction” and that o1 have been delivered safely.

A spokesperson for Murati denied that rigidity associated with the headlong rush to get fashions launched or control complications performed any function in her determination to surrender. “She labored together with her groups intently and, coming off giant wins, felt it used to be the precise time to step away,” the spokesperson stated.Lingering questions on Murati’s loyaltyConflicts over the discharge of AI fashions won’t were the one supply of anxiety between Altman and Murati. Some assets conversant in the corporate stated amongst OpenAI staff there used to be hypothesis Altman considered her as disloyal because of her function in his temporary ouster throughout “the Blip.”Amongst OpenAI workforce, suspicions about Murati’s loyalty surfaced virtually right away after Altman’s firing in November, one supply stated. For something, the outdated board had appointed Murati as meantime CEO. Then, at an all-hands assembly the day of Altman’s dismissal, surprised staff quizzed senior workforce on after they first discovered of the board’s determination. All the executives stated that they had best came upon when the verdict used to be introduced—apart from Murati. She stated the board had knowledgeable her 12 hours sooner than the verdict used to be introduced. Shocked, some Altman loyalists concept she will have to have warned Altman, who used to be stunned via his ouster.On the assembly, Murati informed staff she would paintings with the remainder of the corporate’s executives to push for Altman’s reinstatement. Days later, the board changed her as meantime CEO with veteran tech govt Emmett Shear. Murati resumed her function as CTO when Altman returned as CEO, 5 days after his firing.Murati could have performed a bigger function in Altman’s temporary ouster, then again, than she has let on. The New York Occasions reported in March that Murati had written a memo to Altman expressing considerations about his management taste and that she later introduced those considerations to OpenAI’s board. It additionally reported that her conversations with the board had factored into their determination to fireplace Altman.Murati, thru a legal professional, issued a observation to the newspaper denying that she had reached out to OpenAI’s board so as to get him fired. However she later informed workforce that after person board participants had contacted her, she supplied “comments” about Altman, including that Altman used to be already acutely aware of her perspectives since “I’ve now not been shy about sharing comments with him immediately.” She additionally stated on the time that she and Altman “had a powerful and productive partnership.”In spite of her assurances, inside of OpenAI some puzzled whether or not Murati’s courting with Altman have been irrevocably broken, assets stated. Others speculated that buyers who’re pouring billions into OpenAI didn’t like the theory of a senior govt whose loyalty to Altman used to be lower than general.Brockman ruffled feathers, Murati needed to blank up the messOver the previous yr, Murati additionally needed to handle clashes between Brockman, the OpenAI cofounder and president, and one of the crucial groups that reported to her. Brockman, a infamous workaholic, had no particular portfolio, assets stated. As a substitute, he tended to throw himself into a number of initiatives, infrequently uninvited and on the closing minute. In different instances, he driven workforce to paintings at his personal brutal tempo, infrequently messaging them in any respect hours of the day and evening.Brockman’s habits riled staffers on the ones groups, with Murati regularly introduced in to both attempt to rein in Brockman or soothe bruised egos, assets stated.Ultimately, Brockman used to be “voluntold” to take a sabbatical, one supply stated. The Wall Boulevard Magazine reported that Altman met with Brockman and informed him to take day off. On August 6, Brockman introduced on social media that he used to be “taking a sabbatical” and that it used to be his “first time to chill out since cofounding OpenAI 9 years in the past.”

An OpenAI spokesperson stated Brockman’s determination used to be fully his personal and reiterated that he can be returning to the corporate “on the finish of the yr.”

Brockman may additionally now not were the one workforce headache that weighed on Murati and people who reported to her. In his publish on X, Zarembra additionally wrote that he have been reprimanded via McGrew “for doing a jacuzzi with a coworker,” atmosphere off a hypothesis on social media a few company tradition with few limits. Zarembra didn’t supply further main points.A spokesperson for Murati emphasised that managing workforce conflicts is a normal a part of a senior govt’s activity and that burnout or rigidity by no means factored in Murati’s determination to surrender. “Her rationale for leaving is, after a a hit transition, to show her complete consideration and energies towards her exploration and no matter comes subsequent,” the spokesperson stated.A transferring tradition

Brockman’s sabbatical and Murati’s departure are a part of a in style transformation of OpenAI up to now 10 months since Altman’s temporary ouster. Along with the senior executives who introduced their departures closing week, Ilya Sutskever, an OpenAI cofounder and its former leader scientist, left the corporate in Would possibly.

Sutskever have been on OpenAI’s board and had voted to fireplace Altman. Following Altman’s rehiring, Sutskever—who have been main the corporate’s efforts to investigate tactics to regulate long term tough AI programs that could be smarter than all people blended—by no means returned to paintings on the corporate. He has since based his personal AI startup, Protected Tremendous Intelligence.After Sutskever’s departure, Jan Leike, every other senior AI researcher who had coheaded the so-called “superalignment” staff with Sutskever, additionally introduced he used to be leaving to enroll in OpenAI rival Anthropic. In a publish on X, Leike took a parting shot at OpenAI for, in his view, increasingly more prioritizing “glossy merchandise” over AI protection.

John Schulman, every other OpenAI cofounder, additionally left in August to enroll in Anthropic, pronouncing he sought after to concentrate on AI protection analysis and “arms on technical paintings.” Prior to now six months, just about part of OpenAI’s protection researchers have additionally resigned, elevating questions concerning the corporate’s dedication to protection.In the meantime, OpenAI has been hiring at a frenetic tempo. All the way through Altman’s temporary ouster in November, OpenAI workforce who sought after to sign their reinforce for his go back took to X to publish the word “OpenAI is not anything with out its folks.” However increasingly more, OpenAI’s folks aren’t the similar ones who have been on the corporate on the time.OpenAI has greater than doubled in dimension since “the Blip,” going from fewer than 800 staff to with reference to 1,800. A lot of those that were employed have come from giant generation corporations or typical fast-growing startups versus the area of interest fields of AI analysis from which OpenAI historically drew lots of its staff.The corporate now has many extra staff from business fields equivalent to product control, gross sales, chance, and developer family members. Those folks will not be as motivated via the hunt to expand secure AGI as they’re via the danger to create merchandise that individuals use lately and via the danger for a large payday.The inflow of latest hires has modified the ambience at OpenAI, one supply stated. There are fewer “conversations about analysis, extra conversations about product or deployment into society.”A minimum of some former staff appear distressed at Altman’s plans to redesign its company construction in order that the corporate’s for-profit arm is not managed via OpenAI’s nonprofit basis. The adjustments would additionally take away the present limits, or cap, on how a lot OpenAI’s buyers can earn.

Gretchen Kreuger, a former OpenAI coverage researcher who resigned in Would possibly, posted on X on September 29 that she used to be involved concerning the proposed adjustments to OpenAI’s construction.“OpenAI’s nonprofit governance and cash in cap are a part of why I joined in 2019,” Kreuger wrote, including that doing away with the caps and liberating the for-profit from oversight via the nonprofit “appears like a step within the mistaken path, when what we’d like is more than one steps in the precise path.”Some present staff, then again, say they aren’t unsatisfied concerning the adjustments. They hope inside struggle will likely be extra rare now that colleagues whom they name too instructional or overly anxious about AI protection, and subsequently reluctant to ever unlock merchandise, are increasingly more opting for to depart.

Altman informed workforce within the memo that he would, in the meanwhile, oversee OpenAI’s technical workforce in my view. Prior to now, Altman have been essentially interested by public talking, assembly with govt officers, key partnerships, and fundraising whilst leaving technical trends to different executives.Of OpenAI’s 11 authentic cofounders, best Altman and Zarembra stay on the corporate full-time. Zarembra, in his publish at the departure of Murati, McGrew, and Zoph, famous that Altman, “for all his shortcomings and errors, has created a fantastic group.” Now Altman must turn out that group has the intensity of ability to proceed to be triumphant with out lots of the senior leaders who helped him get up to now—and with out additional drama.