Plasticity loss in Persistent ImageNet. Credit score: Nature (2024). DOI: 10.1038/s41586-024-07711-7

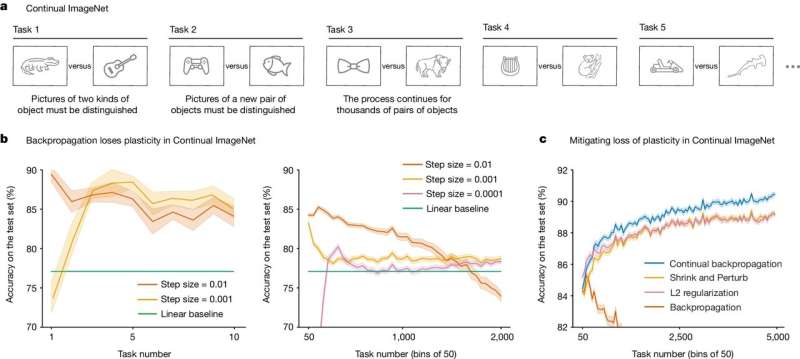

A group of AI researchers and laptop scientists on the College of Alberta has discovered that present synthetic networks used with deep-learning techniques lose their skill to be told all the way through prolonged coaching on new knowledge. Of their find out about, reported within the magazine Nature, the gang discovered some way to triumph over those issues of plasticity in each supervised and reinforcement studying AI techniques, permitting them to proceed to be told.

Over the last few years, AI techniques have turn out to be mainstream. Amongst them are huge language fashions (LLMs), which produce apparently clever responses from chatbots. However something all of them lack is the power to continue to learn as they’re in use, an obstacle that stops them from rising extra correct as they’re used extra. Additionally they are not able to develop any longer clever by means of coaching on new datasets.

The researchers examined the power of standard neural networks to continue to learn after coaching on their authentic datasets and located what they describe as catastrophic forgetting, by which a gadget loses the power to hold out a job it was once in a position to do after being educated on new subject matter.

They notice that this end result is logical, making an allowance for LLMs had been designed to be sequential studying techniques and be informed by means of coaching on fastened knowledge units. All through checking out, the analysis group discovered that the techniques additionally lose their skill to be told altogether if educated sequentially on more than one duties—a characteristic they describe as lack of plasticity. However in addition they discovered a approach to repair the issue—by means of resetting the weights which were prior to now related to nodes at the community.

With synthetic neural networks, weights are utilized by nodes as a measure in their power—weights can achieve or lose power by way of indicators despatched between them, which in flip are impacted by means of result of mathematical calculations. As a weight will increase, the significance of the guidelines it conveys will increase.

The researchers recommend that reinitializing the weights between coaching classes, the usage of the similar strategies that had been used to initialize the gadget, will have to permit for keeping up plasticity within the gadget and for it to continue to learn on further coaching datasets.

Additional info:

Shibhansh Dohare, Lack of plasticity in deep chronic studying, Nature (2024). DOI: 10.1038/s41586-024-07711-7. www.nature.com/articles/s41586-024-07711-7

© 2024 Science X Community

Quotation:

New manner permits AI to be told indefinitely (2024, August 22)

retrieved 22 August 2024

from

This file is matter to copyright. Except for any truthful dealing for the aim of personal find out about or analysis, no

section could also be reproduced with out the written permission. The content material is supplied for info functions best.