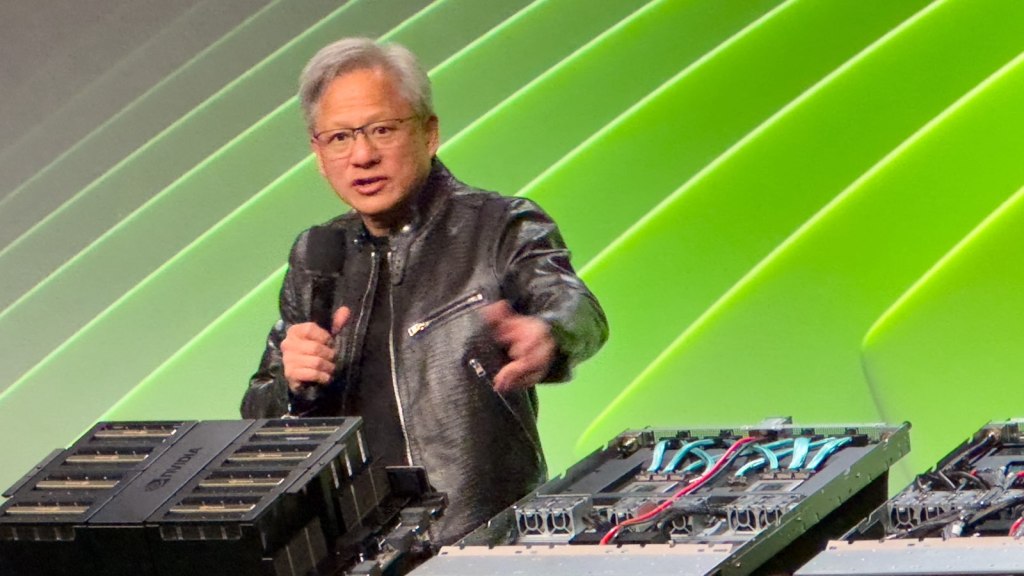

Photograph Credit: Haje Jan KampsArtificial normal intelligence (AGI) – ceaselessly known as “robust AI,” “complete AI,” “human-type AI” or “normal intelligence” – represents probably the most promising long term of synthetic intelligence. . Not like slender AI, which is designed for particular duties, similar to detecting product mistakes, summarizing a tale, or making a website online for you, AGI can carry out many cognitive duties at a human degree or upper. Talking to newshounds this week at Nvidia's annual GTC convention, CEO Jensen Huang appeared bored with speaking about the problem — no longer least as a result of he reveals himself being misquoted, he says. The scope of the query is apparent: The concept that raises questions concerning the function of people and guides a long term during which machines can suppose, be informed and carry out higher than people in virtually each and every house. The principle center of attention of this fear lies within the unpredictability of AGI's decision-making processes and objectives, which will not be aligned with human wishes or priorities (an concept that has been explored broadly in science fiction since no less than the Nineteen Forties). There are considerations that after AGI reaches a definite degree of autonomy and capacity, it’ll grow to be unattainable to include or regulate, resulting in scenarios that can’t be predicted or changed. When persuasive newshounds ask concerning the timeline, they ceaselessly attraction to AI mavens to put in writing the tip of humanity – or no less than the present state. Keep in mind that, AI CEOs aren’t keen to deal with this factor. On the other hand, Huang spent a large number of time telling the media what he concept concerning the matter. Predicting after we'll see an AGI step forward will depend on the way you outline AGI, Huang argues, and attracts a number of parallels: Regardless of the complexities of time divisions, you already know when the New Yr will occur and 2025 rolls round. Should you're riding to the San Jose Conference Middle (the place this 12 months's GTC conference is being held), you already know you've reached some degree the place you’ll see the large GTC indicators. What’s essential is that we will agree on how you’ll measure that you’ve arrived, both tentatively or naturally, the place you was hoping to move. “If we outlined AGI as one thing particular, a check {that a} device program can carry out rather well – or perhaps 8% higher than the general public – I consider we can get there inside 5 years,” explains Huang. He issues out that the check generally is a prison check, an emotional check, an financial check or the facility to move a pre-med check. Except the interviewer can obviously provide an explanation for what AGI approach within the query, they don't wish to wager. Just right. AI hallucinations may also be solved In Tuesday's Q&A consultation, Huang was once requested what to do about AI hallucinations — the tendency of a few AIs to generate logical however inconsistent responses. He appeared pissed off through the query, and instructed that info are simply accomplished – through making sure that the solutions are neatly researched. “Upload a rule: For each and every unmarried solution, it’s important to take a look at the solution,” Huang says, calling the follow “the following technology of additive studying,” describing a procedure similar to the fundamental wisdom of social media: Have a look at the supply and the context. . Examine the info within the supply with the identified reality, and if the solution is in fact right kind – even quite – discard all the supply and transfer directly to the following one. “AI will have to no longer simply solution; it wishes to do a little analysis first to determine which of the solutions is the most productive.” For extra essential answers, similar to well being recommendation or the like, Nvidia's CEO means that most likely taking a look at a couple of components and identified resources of reality is the way in which ahead. Sure, because of this the generator producing the solution will have to have the option to mention, “I don't know the solution on your query,” or “I will be able to't agree on the proper solution to this query,” or one thing like “Hi there, the Tremendous Bowl didn't occur, so I don't know who gained.” ”

Photograph Credit: Haje Jan KampsArtificial normal intelligence (AGI) – ceaselessly known as “robust AI,” “complete AI,” “human-type AI” or “normal intelligence” – represents probably the most promising long term of synthetic intelligence. . Not like slender AI, which is designed for particular duties, similar to detecting product mistakes, summarizing a tale, or making a website online for you, AGI can carry out many cognitive duties at a human degree or upper. Talking to newshounds this week at Nvidia's annual GTC convention, CEO Jensen Huang appeared bored with speaking about the problem — no longer least as a result of he reveals himself being misquoted, he says. The scope of the query is apparent: The concept that raises questions concerning the function of people and guides a long term during which machines can suppose, be informed and carry out higher than people in virtually each and every house. The principle center of attention of this fear lies within the unpredictability of AGI's decision-making processes and objectives, which will not be aligned with human wishes or priorities (an concept that has been explored broadly in science fiction since no less than the Nineteen Forties). There are considerations that after AGI reaches a definite degree of autonomy and capacity, it’ll grow to be unattainable to include or regulate, resulting in scenarios that can’t be predicted or changed. When persuasive newshounds ask concerning the timeline, they ceaselessly attraction to AI mavens to put in writing the tip of humanity – or no less than the present state. Keep in mind that, AI CEOs aren’t keen to deal with this factor. On the other hand, Huang spent a large number of time telling the media what he concept concerning the matter. Predicting after we'll see an AGI step forward will depend on the way you outline AGI, Huang argues, and attracts a number of parallels: Regardless of the complexities of time divisions, you already know when the New Yr will occur and 2025 rolls round. Should you're riding to the San Jose Conference Middle (the place this 12 months's GTC conference is being held), you already know you've reached some degree the place you’ll see the large GTC indicators. What’s essential is that we will agree on how you’ll measure that you’ve arrived, both tentatively or naturally, the place you was hoping to move. “If we outlined AGI as one thing particular, a check {that a} device program can carry out rather well – or perhaps 8% higher than the general public – I consider we can get there inside 5 years,” explains Huang. He issues out that the check generally is a prison check, an emotional check, an financial check or the facility to move a pre-med check. Except the interviewer can obviously provide an explanation for what AGI approach within the query, they don't wish to wager. Just right. AI hallucinations may also be solved In Tuesday's Q&A consultation, Huang was once requested what to do about AI hallucinations — the tendency of a few AIs to generate logical however inconsistent responses. He appeared pissed off through the query, and instructed that info are simply accomplished – through making sure that the solutions are neatly researched. “Upload a rule: For each and every unmarried solution, it’s important to take a look at the solution,” Huang says, calling the follow “the following technology of additive studying,” describing a procedure similar to the fundamental wisdom of social media: Have a look at the supply and the context. . Examine the info within the supply with the identified reality, and if the solution is in fact right kind – even quite – discard all the supply and transfer directly to the following one. “AI will have to no longer simply solution; it wishes to do a little analysis first to determine which of the solutions is the most productive.” For extra essential answers, similar to well being recommendation or the like, Nvidia's CEO means that most likely taking a look at a couple of components and identified resources of reality is the way in which ahead. Sure, because of this the generator producing the solution will have to have the option to mention, “I don't know the solution on your query,” or “I will be able to't agree on the proper solution to this query,” or one thing like “Hi there, the Tremendous Bowl didn't occur, so I don't know who gained.” ”