Enlargement / Guy vs. system in a sea of stones.Getty Photographs Within the historic Chinese language sport of Cross, synthetic intelligence has ceaselessly been ready to defeat the most efficient avid gamers since 2016. However after a couple of years, researchers have came upon flaws within the complex AI Cross strategies that give other folks a preventing likelihood. By means of the use of mysterious “cyclic” methods – which even a first-time participant can discover and defeat – a artful individual can ceaselessly exploit the gaps within the complex AI machine and idiot the set of rules into wasting. Researchers at MIT and FAR AI sought after to look if they might exchange the “worst” conduct of probably the most “human” AI Cross algorithms, trying out 3 ways to harden KataGo’s complex defenses in opposition to enemies. The consequences display that developing tough, unwieldy AIs can also be tough, even in tightly managed environments like board video games. 3 ways to fail Within the up to now revealed paper “Can Cross AIs be too robust?”, the researchers goal to create a Cross AI this is really “tough” in opposition to any assaults. Which means that algorithms that cannot be fooled into “game-losing errors {that a} human cannot make” and that will require a competing AI set of rules to spend a large number of computing energy to defeat. Likewise, a strong set of rules will have to additionally take care of attainable issues through the use of further computing sources when coping with odd eventualities.

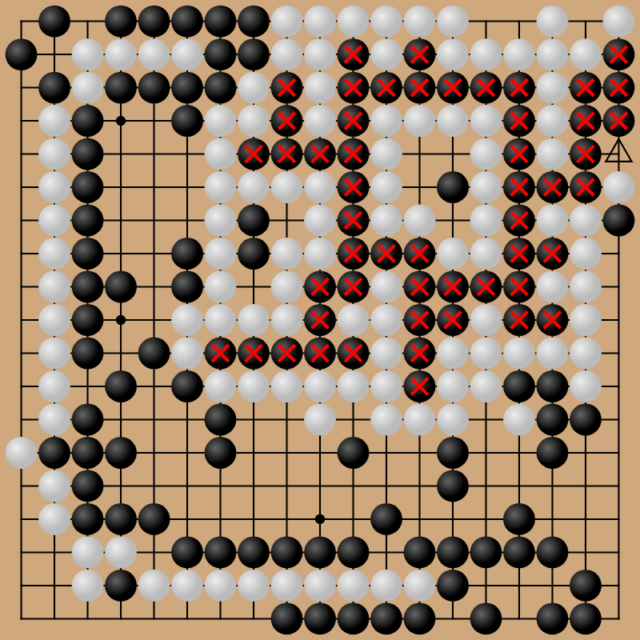

Amplify / Instance of the primary cyclic assault in development. The researchers attempted 3 how you can broaden a strong Cross set of rules. To start with, he simplest subtle the KataGo type the use of many examples of unlawful cyclic strategies that had already defeated him, hoping that KataGo would discover ways to acknowledge and conquer those patterns after seeing extra. This technique to begin with appeared promising, permitting KataGo to win one hundred pc of the video games in opposition to the “attacker”. But if the attacker used to be fine-tuned (an method that used a lot much less computing energy than KataGo’s fine-tuning), the assault price dropped to 9 % as opposed to a slight distinction within the authentic assault. Of their 2d experiment, the researchers added an “fingers race” cycle the place new sorts of enemies achieve new guns and new defenses search to plug newly came upon holes. After 10 rounds of such repeated coaching periods, the defensive finish simplest gained 19 % of the video games in opposition to the offensive finish which accomplished an exceptional variance in motion. This used to be true even supposing the changed set of rules maintained an edge in opposition to the attackers it were educated with previously.

Amplify / Instance of the primary cyclic assault in development. The researchers attempted 3 how you can broaden a strong Cross set of rules. To start with, he simplest subtle the KataGo type the use of many examples of unlawful cyclic strategies that had already defeated him, hoping that KataGo would discover ways to acknowledge and conquer those patterns after seeing extra. This technique to begin with appeared promising, permitting KataGo to win one hundred pc of the video games in opposition to the “attacker”. But if the attacker used to be fine-tuned (an method that used a lot much less computing energy than KataGo’s fine-tuning), the assault price dropped to 9 % as opposed to a slight distinction within the authentic assault. Of their 2d experiment, the researchers added an “fingers race” cycle the place new sorts of enemies achieve new guns and new defenses search to plug newly came upon holes. After 10 rounds of such repeated coaching periods, the defensive finish simplest gained 19 % of the video games in opposition to the offensive finish which accomplished an exceptional variance in motion. This used to be true even supposing the changed set of rules maintained an edge in opposition to the attackers it were educated with previously.

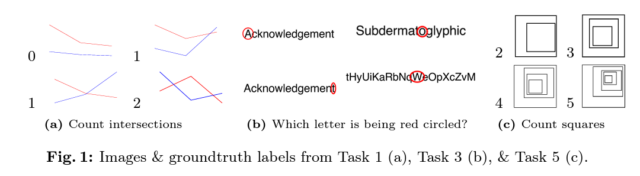

Extend / If you’ll be able to take care of these kinds of graphics, you’ll be able to have higher concepts than present AIs. Controlling most of these “worst case” situations is very important to steer clear of embarrassing errors when freeing AI machines to people. However this new learn about presentations that confirmed “adversaries” can ceaselessly in finding new holes in an AI set of rules’s efficiency quicker and extra simply than the set of rules can alternate to unravel the ones issues. And if that is the case in Cross—an overly advanced sport with transparent laws—it may be much more true in much less managed environments. “The important thing to AI is that those threats can be tough to take away,” FAR CEO Adam Gleave instructed Nature. linking information like jailbreak in ChatGPT.” On the other hand, the researchers don’t seem to be giving up. [new] “unimaginable assaults” in Cross, their strategies had been ready to attach the “fastened” variables that had been up to now identified. chasing new human/human powers.

Extend / If you’ll be able to take care of these kinds of graphics, you’ll be able to have higher concepts than present AIs. Controlling most of these “worst case” situations is very important to steer clear of embarrassing errors when freeing AI machines to people. However this new learn about presentations that confirmed “adversaries” can ceaselessly in finding new holes in an AI set of rules’s efficiency quicker and extra simply than the set of rules can alternate to unravel the ones issues. And if that is the case in Cross—an overly advanced sport with transparent laws—it may be much more true in much less managed environments. “The important thing to AI is that those threats can be tough to take away,” FAR CEO Adam Gleave instructed Nature. linking information like jailbreak in ChatGPT.” On the other hand, the researchers don’t seem to be giving up. [new] “unimaginable assaults” in Cross, their strategies had been ready to attach the “fastened” variables that had been up to now identified. chasing new human/human powers.

:max_bytes(150000):strip_icc()/GettyImages-1466481927-22c36921cd7e4c3f8767f389463f807c.jpg)