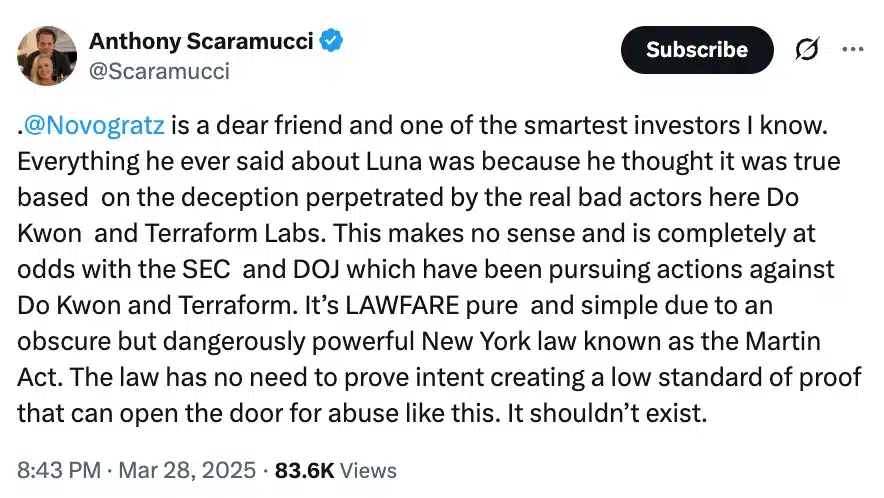

Yoshua Bengio (L) and Max Tegmark (R) speak about the improvement of synthetic common intelligence throughout a are living podcast recording of CNBC’s “Past The Valley” in Davos, Switzerland in January 2025.CNBCArtificial common intelligence constructed like “brokers” may turn out bad as its creators would possibly lose management of the machine, two of of the sector’s maximum distinguished AI scientists advised CNBC.In the most recent episode of CNBC’s “Past The Valley” podcast launched on Tuesday, Max Tegmark, a professor on the Massachusetts Institute of Era and the President of the Long term of Existence Institute, and Yoshua Bengio, dubbed one of the most “godfathers of AI” and a professor on the Université de Montréal, spoke about their issues about synthetic common intelligence, or AGI. The time period extensively refers to AI methods which might be smarter than people.Their fears stem from the sector’s largest corporations now speaking about “AI brokers” or “agentic AI” — which firms declare will permit AI chatbots to behave like assistants or brokers and lend a hand in paintings and on a regular basis lifestyles. Business estimates range on when AGI will come into life.With that idea comes the concept that AI methods may have some “company” and ideas of their very own, in step with Bengio.”Researchers in AI were impressed by way of human intelligence to construct gadget intelligence, and, in people, there is a mixture of each the facility to know the sector like natural intelligence and the agentic conduct, that means … to make use of your wisdom to succeed in targets,” Bengio advised CNBC’s “Past The Valley.””At the moment, that is how we are development AGI: we’re seeking to cause them to brokers that perceive so much concerning the international, after which can act accordingly. However that is in truth an excessively bad proposition.”Bengio added that pursuing this manner could be like “developing a brand new species or a brand new clever entity in the world” and “no longer realizing if they are going to behave in ways in which trust our wishes.””So as a substitute, we will believe, what are the eventualities by which issues pass badly and so they all depend on company? In different phrases, this is because the AI has its personal targets that we may well be in bother.”The theory of self-preservation may additionally kick in, as AI will get even smarter, Bengio mentioned.”Can we wish to be in festival with entities which might be smarter than us? It isn’t an excessively reassuring gamble, proper? So we need to know the way self-preservation can emerge as a purpose in AI.”AI gear the keyFor MIT’s Tegmark, the important thing lies in so-called “instrument AI” — methods which might be created for a particular, narrowly-defined function, however that do not need to be brokers.Tegmark mentioned a device AI is usually a machine that tells you how you can treatment most cancers, or one thing that possesses “some company” like a self-driving automobile “the place you’ll turn out or get some truly top, truly dependable promises that you are nonetheless going so that you can management it.””I believe, on an positive be aware right here, we will have virtually the whole lot that we are interested by with AI … if we merely insist on having some elementary protection requirements earlier than other people can promote robust AI methods,” Tegmark mentioned.”They have got to show that we will stay them beneath management. Then the business will innovate hastily to determine how to do this higher.”Tegmark’s Long term of Existence Institute in 2023 known as for a pause to the improvement of AI methods that may compete with human-level intelligence. Whilst that has no longer came about, Tegmark mentioned persons are speaking concerning the matter, and now it’s time to take motion to determine how you can put guardrails in position to management AGI.”So a minimum of now numerous persons are speaking the controversy. We need to see if we will get them to stroll the stroll,” Tegmark advised CNBC’s “Past The Valley.””It is obviously insane for us people to construct one thing means smarter than us earlier than we discovered how you can management it.”There are a number of perspectives on when AGI will arrive, partially pushed by way of various definitions.OpenAI CEO Sam Altman mentioned his corporate is aware of how you can construct AGI and mentioned it is going to arrive faster than other people suppose, despite the fact that he downplayed the affect of the generation.”My wager is we can hit AGI faster than the general public on the planet suppose and it is going to subject a lot much less,” Altman mentioned in December.

‘Unhealthy proposition’: Most sensible scientists warn of out-of-control AI