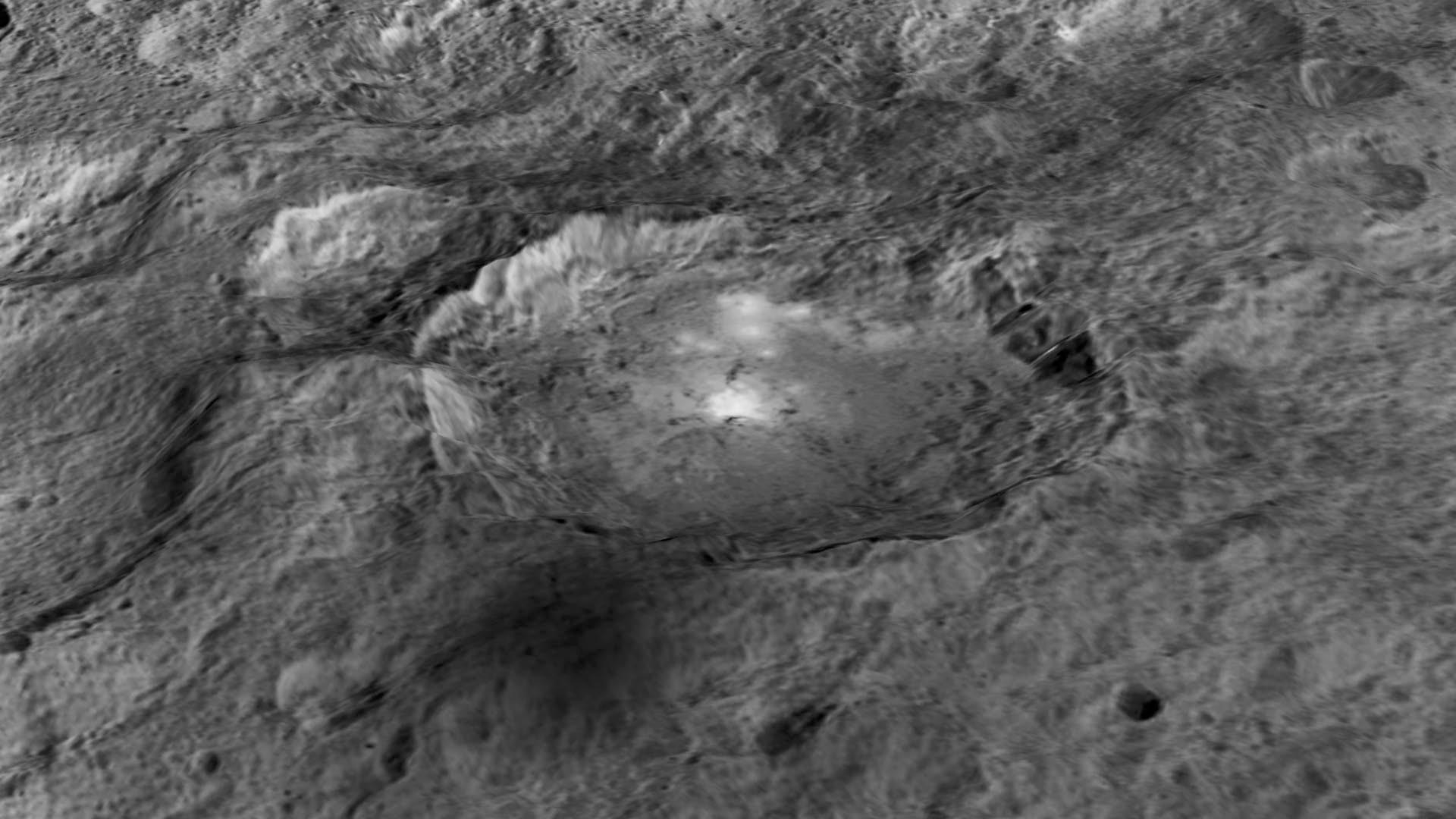

Abstract: A contemporary learn about delves into pareidolia, the human tendency to understand faces in inanimate items, revealing how people and AI stumble on illusory faces. Researchers discovered that AI fashions are much better at detecting pareidolic faces after being skilled to acknowledge animal faces, hinting at an evolutionary hyperlink.In addition they found out a “Goldilocks Zone” of symbol complexity the place pareidolia is in all probability to happen. The staff constructed an intensive dataset of five,000 photographs to review those results, offering insights that might beef up face detection techniques.Key Information:AI fashions stumble on pareidolic faces higher after being skilled on animal faces.A “Goldilocks Zone” of symbol complexity will increase pareidolia probability.This analysis is helping beef up face detection techniques, with vast packages.Supply: MITIn 1994, Florida jewellery clothier Diana Duyser found out what she believed to be the Virgin Mary’s symbol in a grilled cheese sandwich, which she preserved and later auctioned for $28,000. However how a lot will we in point of fact perceive about pareidolia, the phenomenon of seeing faces and patterns in items after they aren’t in point of fact there? A brand new learn about from the MIT Laptop Science and Synthetic Intelligence Laboratory (CSAIL) delves into this phenomenon, introducing an intensive, human-labeled dataset of five,000 pareidolic photographs, a long way surpassing earlier collections.  This predicted “Goldilocks zone” used to be then validated in exams with each actual human topics and AI face detection techniques. Credit score: Neuroscience NewsUsing this dataset, the staff found out a number of sudden effects concerning the variations between human and system belief, and the way the facility to look faces in a slice of toast may have stored your far-off family’ lives.“Face pareidolia has lengthy fascinated psychologists, however it’s been in large part unexplored within the laptop imaginative and prescient neighborhood,” says Mark Hamilton, MIT PhD scholar in electric engineering and laptop science, CSAIL associate, and lead researcher at the paintings.“We would have liked to create a useful resource that might lend a hand us know how each people and AI techniques procedure those illusory faces.”So what did all of those faux faces disclose? For one, AI fashions don’t appear to acknowledge pareidolic faces like we do. Strangely, the staff discovered that it wasn’t till they skilled algorithms to acknowledge animal faces that they was much better at detecting pareidolic faces.This sudden connection hints at a conceivable evolutionary hyperlink between our skill to identify animal faces — the most important for survival — and our tendency to look faces in inanimate items. “A outcome like this turns out to indicate that pareidolia may now not stand up from human social habits, however from one thing deeper: like temporarily recognizing a lurking tiger, or figuring out which means a deer is taking a look so our primordial ancestors may just hunt,” says Hamilton.Every other intriguing discovery is what the researchers name the “Goldilocks Zone of Pareidolia,” a category of pictures the place pareidolia is in all probability to happen. “There’s a particular vary of visible complexity the place each people and machines are in all probability to understand faces in non-face items,” William T. Freeman, MIT professor {of electrical} engineering and laptop science and main investigator of the mission says. “Too easy, and there’s now not sufficient element to shape a face. Too advanced, and it turns into visible noise.”To discover this, the staff advanced an equation that fashions how other folks and algorithms stumble on illusory faces. When inspecting this equation, they discovered a transparent “pareidolic top” the place the chance of seeing faces is very best, corresponding to photographs that experience “simply the correct quantity” of complexity.This predicted “Goldilocks zone” used to be then validated in exams with each actual human topics and AI face detection techniques.This new dataset, “Faces in Issues,” dwarfs the ones of earlier research that in most cases used handiest 20-30 stimuli.This scale allowed the researchers to discover how cutting-edge face detection algorithms behaved after fine-tuning on pareidolic faces, appearing that now not handiest may just those algorithms be edited to stumble on those faces, however that they may additionally act as a silicon stand-in for our personal mind, permitting the staff to invite and solution questions concerning the origins of pareidolic face detection which might be not possible to invite in people. To construct this dataset, the staff curated roughly 20,000 candidate photographs from the LAION-5B dataset, that have been then meticulously categorized and judged by way of human annotators.This procedure concerned drawing bounding containers round perceived faces and answering detailed questions on each and every face, such because the perceived emotion, age, and whether or not the face used to be unintended or intentional.“Accumulating and annotating hundreds of pictures used to be a enormous job,” says Hamilton. “A lot of the dataset owes its life to my mother,” a retired banker, “who spent numerous hours lovingly labeling photographs for our research.”The learn about additionally has attainable packages in making improvements to face detection techniques by way of lowering false positives, which will have implications for fields like self-driving vehicles, human-computer interplay, and robotics. The dataset and fashions may just additionally lend a hand spaces like product design, the place figuring out and controlling pareidolia may just create higher merchandise.“Believe having the ability to robotically tweak the design of a automobile or a kid’s toy so it seems to be friendlier, or making sure a scientific software doesn’t inadvertently seem threatening,” says Hamilton.“It’s interesting how people instinctively interpret inanimate items with human-like characteristics. For example, while you look at {an electrical} socket, it’s possible you’ll in an instant envision it making a song, and you’ll even believe how it could ‘transfer its lips.’ Algorithms, alternatively, don’t naturally acknowledge those cartoonish faces in the similar means we do,” says Hamilton. “This raises intriguing questions: What accounts for this distinction between human belief and algorithmic interpretation? Is pareidolia advisable or negative? Why don’t algorithms revel in this impact as we do?“Those questions sparked our investigation, as this vintage mental phenomenon in people had now not been totally explored in algorithms.”Because the researchers get ready to proportion their dataset with the clinical neighborhood, they’re already taking a look forward. Long run paintings would possibly contain coaching vision-language fashions to know and describe pareidolic faces, doubtlessly resulting in AI techniques that may interact with visible stimuli in additional human-like techniques.“This can be a pleasant paper! It’s amusing to learn and it makes me assume. Hamilton et al. suggest a tantalizing query: Why will we see faces in issues?” says Pietro Perona, the Allen E. Puckett Professor of Electric Engineering at Caltech, who used to be now not concerned within the paintings.“As they indicate, finding out from examples, together with animal faces, is going handiest half-way to explaining the phenomenon. I guess that fascinated with this query will train us one thing necessary about how our visible gadget generalizes past the learning it receives via lifestyles.”Hamilton and Freeman’s co-authors come with Simon Stent, team of workers analysis scientist on the Toyota Analysis Institute; Ruth Rosenholtz, main analysis scientist within the Division of Mind and Cognitive Sciences, NVIDIA analysis scientist, and previous CSAIL member; and CSAIL associates postdoc Vasha DuTell, Anne Harrington MEng ’23, and Analysis Scientist Jennifer Corbett.Investment: Their paintings used to be supported, partially, by way of the Nationwide Science Basis and the CSAIL MEnTorEd Alternatives in Analysis (METEOR) Fellowship, whilst being backed by way of america Air Power Analysis Laboratory and america Air Power Synthetic Intelligence Accelerator. The MIT SuperCloud and Lincoln Laboratory Supercomputing Middle supplied HPC assets for the researchers’ effects.About this pareidolia and AI analysis newsAuthor: Rachel Gordon

This predicted “Goldilocks zone” used to be then validated in exams with each actual human topics and AI face detection techniques. Credit score: Neuroscience NewsUsing this dataset, the staff found out a number of sudden effects concerning the variations between human and system belief, and the way the facility to look faces in a slice of toast may have stored your far-off family’ lives.“Face pareidolia has lengthy fascinated psychologists, however it’s been in large part unexplored within the laptop imaginative and prescient neighborhood,” says Mark Hamilton, MIT PhD scholar in electric engineering and laptop science, CSAIL associate, and lead researcher at the paintings.“We would have liked to create a useful resource that might lend a hand us know how each people and AI techniques procedure those illusory faces.”So what did all of those faux faces disclose? For one, AI fashions don’t appear to acknowledge pareidolic faces like we do. Strangely, the staff discovered that it wasn’t till they skilled algorithms to acknowledge animal faces that they was much better at detecting pareidolic faces.This sudden connection hints at a conceivable evolutionary hyperlink between our skill to identify animal faces — the most important for survival — and our tendency to look faces in inanimate items. “A outcome like this turns out to indicate that pareidolia may now not stand up from human social habits, however from one thing deeper: like temporarily recognizing a lurking tiger, or figuring out which means a deer is taking a look so our primordial ancestors may just hunt,” says Hamilton.Every other intriguing discovery is what the researchers name the “Goldilocks Zone of Pareidolia,” a category of pictures the place pareidolia is in all probability to happen. “There’s a particular vary of visible complexity the place each people and machines are in all probability to understand faces in non-face items,” William T. Freeman, MIT professor {of electrical} engineering and laptop science and main investigator of the mission says. “Too easy, and there’s now not sufficient element to shape a face. Too advanced, and it turns into visible noise.”To discover this, the staff advanced an equation that fashions how other folks and algorithms stumble on illusory faces. When inspecting this equation, they discovered a transparent “pareidolic top” the place the chance of seeing faces is very best, corresponding to photographs that experience “simply the correct quantity” of complexity.This predicted “Goldilocks zone” used to be then validated in exams with each actual human topics and AI face detection techniques.This new dataset, “Faces in Issues,” dwarfs the ones of earlier research that in most cases used handiest 20-30 stimuli.This scale allowed the researchers to discover how cutting-edge face detection algorithms behaved after fine-tuning on pareidolic faces, appearing that now not handiest may just those algorithms be edited to stumble on those faces, however that they may additionally act as a silicon stand-in for our personal mind, permitting the staff to invite and solution questions concerning the origins of pareidolic face detection which might be not possible to invite in people. To construct this dataset, the staff curated roughly 20,000 candidate photographs from the LAION-5B dataset, that have been then meticulously categorized and judged by way of human annotators.This procedure concerned drawing bounding containers round perceived faces and answering detailed questions on each and every face, such because the perceived emotion, age, and whether or not the face used to be unintended or intentional.“Accumulating and annotating hundreds of pictures used to be a enormous job,” says Hamilton. “A lot of the dataset owes its life to my mother,” a retired banker, “who spent numerous hours lovingly labeling photographs for our research.”The learn about additionally has attainable packages in making improvements to face detection techniques by way of lowering false positives, which will have implications for fields like self-driving vehicles, human-computer interplay, and robotics. The dataset and fashions may just additionally lend a hand spaces like product design, the place figuring out and controlling pareidolia may just create higher merchandise.“Believe having the ability to robotically tweak the design of a automobile or a kid’s toy so it seems to be friendlier, or making sure a scientific software doesn’t inadvertently seem threatening,” says Hamilton.“It’s interesting how people instinctively interpret inanimate items with human-like characteristics. For example, while you look at {an electrical} socket, it’s possible you’ll in an instant envision it making a song, and you’ll even believe how it could ‘transfer its lips.’ Algorithms, alternatively, don’t naturally acknowledge those cartoonish faces in the similar means we do,” says Hamilton. “This raises intriguing questions: What accounts for this distinction between human belief and algorithmic interpretation? Is pareidolia advisable or negative? Why don’t algorithms revel in this impact as we do?“Those questions sparked our investigation, as this vintage mental phenomenon in people had now not been totally explored in algorithms.”Because the researchers get ready to proportion their dataset with the clinical neighborhood, they’re already taking a look forward. Long run paintings would possibly contain coaching vision-language fashions to know and describe pareidolic faces, doubtlessly resulting in AI techniques that may interact with visible stimuli in additional human-like techniques.“This can be a pleasant paper! It’s amusing to learn and it makes me assume. Hamilton et al. suggest a tantalizing query: Why will we see faces in issues?” says Pietro Perona, the Allen E. Puckett Professor of Electric Engineering at Caltech, who used to be now not concerned within the paintings.“As they indicate, finding out from examples, together with animal faces, is going handiest half-way to explaining the phenomenon. I guess that fascinated with this query will train us one thing necessary about how our visible gadget generalizes past the learning it receives via lifestyles.”Hamilton and Freeman’s co-authors come with Simon Stent, team of workers analysis scientist on the Toyota Analysis Institute; Ruth Rosenholtz, main analysis scientist within the Division of Mind and Cognitive Sciences, NVIDIA analysis scientist, and previous CSAIL member; and CSAIL associates postdoc Vasha DuTell, Anne Harrington MEng ’23, and Analysis Scientist Jennifer Corbett.Investment: Their paintings used to be supported, partially, by way of the Nationwide Science Basis and the CSAIL MEnTorEd Alternatives in Analysis (METEOR) Fellowship, whilst being backed by way of america Air Power Analysis Laboratory and america Air Power Synthetic Intelligence Accelerator. The MIT SuperCloud and Lincoln Laboratory Supercomputing Middle supplied HPC assets for the researchers’ effects.About this pareidolia and AI analysis newsAuthor: Rachel Gordon

Supply: MIT

Touch: Rachel Gordon – MIT

Symbol: The picture is credited to Neuroscience NewsOriginal Analysis: The findings had been introduced on the Ecu Convention on Laptop Imaginative and prescient.