https://static01.nyt.com/images/2023/05/26/business/00roose/00roose-facebookJumbo.png

A few months ago, while meeting with an AI executive in San Francisco, I noticed a strange sticker on his laptop. The sticker showed a scary, octopus-like creature with many eyes and a yellow smiley face attached to one of its tentacles. I asked what it was.

“Oh, that’s Shogoth,” he explained. “The most important message in AI”

And with that, our process was officially disrupted. Forget about chatbots and reading groups – I need to know everything about Shoggoth, what it means and why people in the AI world are talking about it.

The official explained that Shoggoth has become popular among artificial intelligence workers, as a vivid illustration of how a large language (the type of AI system that powers ChatGPT and other chatbots) works.

But it was only a joke, he said, because it also reflected the concerns many researchers and engineers have about the devices they are building.

Since then, Shoggoth has gone viral, or as viral as I can go in the small world of hyper-online AI insiders. It’s a popular meme on AI Twitter (including a The tweet has now been deleted By Elon Musk), a recurring metaphor in articles and newsletters about the dangers of AI, as well as a useful shorthand for conversations with AI security experts. AI developer NovelAI said it recently named a computer group “Shoggy” in honor of the meme. Another AI company, Scale AI, created a line of tote bags featuring Shoggoth.

Shoggoths are fictional creatures, introduced by science fiction writer HP Lovecraft in his 1936 novel “On the Mountains of Madness.” In Lovecraft’s words, Shoggoths were giant, blob-like monsters made of black goo, covered in tentacles and eyes.

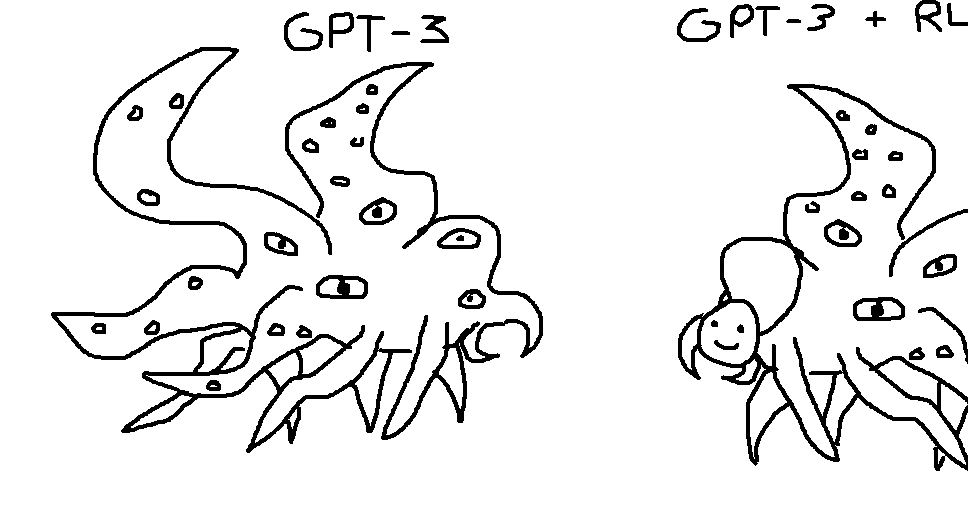

Shoggoths arrived in the world of AI in December, a month after the release of ChatGPT, when the Twitter user @TetraspaceWest responded to a tweet about GPT-3 (an example of the OpenAI language that was ChatGPT) with a picture of two Shoggoths drawn by hand – the first. one labeled “GPT-3” and the second labeled “GPT-3 + RLHF.” Shoggoth’s deputy was, perched on one of his tents, a mask of a smiling face.

In short, the joke was that in order to prevent AI language models from acting dangerously and dangerously, AI companies would have to train them to behave politely and harmlessly. One popular way to do this is called “reinforcing learning from human feedback,” or RLHF, a technique that involves asking people to write answers to a chatbot, and feeding those statistics to an AI model.

Most AI researchers agree that models trained using RLHF perform better than models without it. But others argue that this fine-tuning of the symbolic language does not make the picture more mysterious and incomprehensible. In their minds, it’s a weak, romantic mask that hides the mysterious beast beneath.

@TetraspaceWest, the creator of the meme, told me in a Twitter message that Shoggoth “represents something that thinks in a way that people don’t understand and is very different from how people think.”

Comparing the example of the language of AI and Shoggoth, @TetraspaceWest said, it did not mean that it was evil or mental, just that its true nature would be unknown.

“I was also thinking about how Lovecraft’s superpowers are dangerous – not because they don’t like people, but because they have no interest and their priorities are foreign to us and don’t affect people, which I think they will. be realistic about the strong AI of the future”

The image of Shoggoth caught on, when AI chatbots became more advanced and users began to notice that some of them seemed to be doing strange, incomprehensible things that their creators did not want. In February, when Bing’s chats got stuck and I was trying to end my marriage, an AI researcher I knew complimented me on “watching Shoggoth.” A fellow AI reporter joked that when it came to improving Bing, Microsoft forgot to put on its smiley face mask.

Later, AI enthusiasts expanded the analogy. In February, Twitter user @anthrupad created a Bible of Shoggoth who, in addition to a smiley face marked “RLHF,” a human-like face marked “supervised toning.” (You need a computer science degree to get a sense of humor, but it’s different from the difference between AI language types and specialized software like chatbots.)

Today, if you hear about Shoggoth in the AI community, it’s likely to be a strange lament for these systems – the black box nature of their behavior, in which they seem to defy human thought. Or maybe it’s a joke, a brief glimpse of a powerful AI machine that seems pretty cool. If it’s an AI security researcher talking about Shoggoth, maybe that person is interested in preventing AI systems from showing their true, Shoggoth-like nature.

In any case, Shoggoth is a powerful metaphor that explains one of the strangest things about the world of AI, and that is that most of the people who work on this technology are not familiar with their creations. They don’t fully understand how AI languages work, how they acquire new skills or why they sometimes behave in unexpected ways. They are not sure if AI will be good or bad in the world. And some of them get to play with these kinds of technology that haven’t been refined for human use – real, unmasked Shoggoths.

That some AI people refer to their creations as Lovecraftian horrors, even jokes, is historically unusual. (Put it this way: Fifteen years ago, Mark Zuckerberg wasn’t hanging around Facebook with Cthulhu.)

And it reinforces the idea that what’s happening in AI today feels, to some of the participants, more like a calling than programming. They’re making blobby, alien Shoggoths, making them bigger and stronger, hoping there are enough smiley faces to cover the scary parts.

-SOURCE-Wired.jpg)